Musings is an informal newsletter mainly highlighting recent science. It is intended as both fun and instructive. Items are posted a few times each week. See the Introduction, listed below, for more information.

If you got here from a search engine... Do a simple text search of this page to find your topic. Searches for a single word (or root) are most likely to work.

Introduction (separate page).

This page:

2016 (January-April)

April 30

April 27

April 20

April 13

April 6

March 30

March 23

March 16

March 9

March 2

February 24

February 17

February 10

February 3

January 27

January 20

January 13

January 6

Also see the complete listing of Musings pages, immediately below.

All pages:

Most recent posts

2026

2025

2024

2023:

January-April

May-December

2022:

January-April

May-August

September-December

2021:

January-April

May-August

September-December

2020:

January-April

May-August

September-December

2019:

January-April

May-August

September-December

2018:

January-April

May-August

September-December

2017:

January-April

May-August

September-December

2016:

January-April: this page, see detail above

May-August

September-December

2015:

January-April

May-August

September-December

2014:

January-April

May-August

September-December

2013:

January-April

May-August

September-December

2012:

January-April

May-August

September-December

2011:

January-April

May-August

September-December

2010:

January-June

July-December

2009

2008

Links to external sites will open in a new window.

Archive items may be edited, to condense them a bit or to update links. Some links may require a subscription for full access, but I try to provide at least one useful open source for most items.

Please let me know of any broken links you find -- on my Musings pages or any of my regular web pages. Personal reports are often the first way I find out about such a problem.

April 30, 2016

Xenotransplantation: using an organ from a different species as a replacement. In particular, pigs are considered as potential organ donors for humans. Musings has discussed the topic [links at the end].

A new article reports progress in a model system; we note it briefly as an example of work being done.

The model system involves addition of a pig heart to a baboon. By "addition" we mean that the transplanted heart is not a replacement heart, but rather an additional heart; the main purpose is to examine the survival of the pig heart. Variables include genetic modifications to the pig donor, and the immunosuppression regime used in the recipient.

The short summary is that four pig hearts were transplanted to baboons in the new work. Median survival was 298 days; the longest was 945 days. (A fifth transplant was omitted from the analysis; the baboon died from an infection of unknown origin.) Those numbers are better than in their previous work, in which the median and longest survival were 180 and 500 days, respectively.

What is the basis of the improved results? As the title of the article might suggest, the details are quite technical, but they represent progress in understanding the immune response. The donor pigs have been genetically modified to eliminate certain known problems. Then the scientists experiment with how to maintain appropriate immunosuppression in the recipients.

News story: Much longer survival for heart transplants across species -- Study involved transplanting pig hearts into baboons. (Science Daily, April 6, 2016.)

The article, which is freely available: Chimeric 2C10R4 anti-CD40 antibody therapy is critical for long-term survival of GTKO.hCD46.hTBM pig-to-primate cardiac xenograft. (M M Mohiuddin et al, Nature Communications 7:11138, April 5, 2016.)

Background posts include...

* How to do 62 things at once -- and take a step towards making a pig that is better suited as an organ donor for humans (January 17, 2016). This addresses another problem with pigs: their endogenous retroviruses.

* Organ transplantation: from pig to human -- a status report (November 23, 2015). Links to more.More: Laika, the first de-PERVed pig (October 22, 2017).

And one that is a direct follow-up to the current post: Pig hearts can sustain life in baboons for six months (January 7, 2019).

An alternative: Human heart tissue grown in spinach (September 5, 2017).

There is more about replacement body parts on my page Biotechnology in the News (BITN) for Cloning and stem cells. It includes an extensive list of related Musings posts.

More about baboons... Can French baboons learn to read English? (May 13, 2012).

April 29, 2016

Another story of a leak of natural gas (methane). A big event, a disaster, one that was headline news in California for several months. (And one that was only about ten miles up the road from a place I lived long long ago.)

The leak in this case was in a storage container for natural gas. That storage container was an old oil field. Yes, the gas company stored gas underground, in the Earth. It's a common practice, and it usually works ok. But this gas field sprang a leak, associated with a particular well. The leak spewed natural gas into the air in northern Los Angeles (LA) for several months.

Here are some numbers...

|

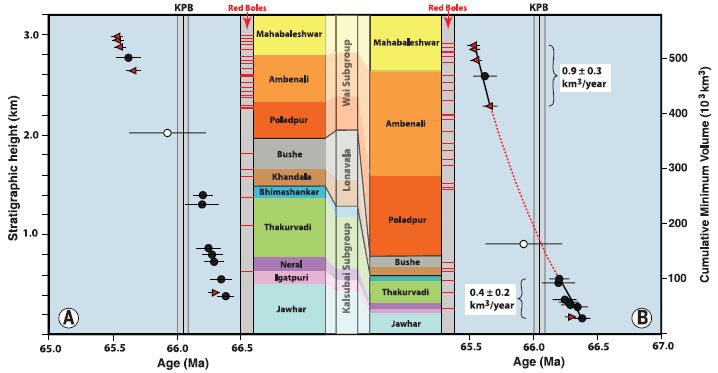

Part B (top) shows the rate of leakage of two hydrocarbons, in tonnes (metric tons) per hour. The data points are from actual measurements, taken from airplane monitors. The open symbols are for methane, CH4.

(The closed symbols, near the bottom, are for ethane, C2H6. Ethane is about 5% of natural gas. We won't deal with it further.)

For methane, the graph includes a red line; that is the scientists' best estimate what the overall curve looked like.

Part C (bottom) shows the cumulative amount of methane released, in thousands of tonnes. Part C also provides the detail for the x-axis time scale. To help you connect the two parts... A loss rate of 40 tonnes per hour is about a thousand tonnes per day. In round numbers, the field released about 40 tonnes of methane per hour, or a thousand tonnes per day, for a hundred days. That would be 100,000 tonnes. That's close to their best estimate: 97,100 tonnes, shown on the graph for part C. The decline in leakage rate starting about December 1 was due to reduced pressure in the field. The company moved much of the gas out of the leaking field. This is Figure 2 parts B & C from the article. |

Numbers. What do they mean?

The event is the second largest known accidental release of methane in the US. During the time of the event, it approximately doubled the methane release rate in the LA area.

On the other hand, such large releases are uncommon. Although the release doubled the LA area methane emission over that time period, it would be only a blip when looked at over a long time period.

Will such releases continue to be uncommon? If a similar event occurred, would we do a better job of containing it? Have we learned something from this event and others that would help reduce the risk in the future? Those are the types of questions we should be asking. Certainly, scientists are asking the questions. But implementing the answers is a political matter, too.

In addition to being a report on the Los Angeles leak, the work shows the value of using small airplanes carrying instrumentation for monitoring. The results from airborne monitoring were quickly made available to relevant parties, and guided what needed to be done on the ground.

News stories:

* California gas well blowout caused nation's largest methane release, study finds. (Phys.org, February 25, 2016.)

* Study: California's Aliso Canyon blowout led to largest U.S. methane leak ever. (NOAA, February 25, 2016.) From one of the government agencies involved in the work.

The article: Methane emissions from the 2015 Aliso Canyon blowout in Los Angeles, CA. (S Conley et al, Science 351:1317, March 18, 2016.) Check Google Scholar for a freely available preprint. (In the news media, the event was commonly described as being near Porter Ranch, the nearby community.)

Among previous leak reports: Boston is leaking (February 13, 2015).

A previous post about understanding and dealing with the leak problem: Methane leaks -- relevance to use of natural gas as a fuel (April 7, 2014).

Also see... Emissions from gas stoves, even when 'off' -- air pollution implications (February 21, 2023).

There is more about energy issues on my page Internet Resources for Organic and Biochemistry under Energy resources. It includes a list of related Musings posts.

An interesting story about a natural leak: Underwater "lost city" explained (July 25, 2016).

By the way, not all leak reports are about methane... Europa is leaking (February 10, 2014).

More from Los Angeles...

* Earthquakes induced by human activity: oil drilling in Los Angeles (February 12, 2019).

* DNA evidence in restaurants: is the fish properly labeled? (June 5, 2017).

April 27, 2016

I don't know about your yard, but a new article provides evidence for supernova debris on Earth.

In classical astronomy, a supernova is an extremely bright object that appears suddenly in the sky. We now understand that a supernova is an exploding star. A supernova event sends debris through the interstellar medium.

Would supernova debris hit Earth? Sure, why not?

How often? And would it do harm? Astronomers estimate that supernovae occur in the Milky Way galaxy about twice per century, on average. Perhaps there are supernovae in our neighborhood (within, say, a few hundred light-years) every few million years. What hits Earth would depend on the size of the event and its distance from us, but one might imagine this could be bad for Earth.

How would we recognize supernova debris? That debris includes unusual atomic nuclei that are made under the extreme conditions of the stellar explosion. Perhaps we could detect such unusual nuclei. The new work uses Fe-60, an isotope of iron. There is minimal "natural" Fe-60 on Earth. It is a radioactive isotope, with a half-life of about 3 million years. Any Fe-60 dating back to the formation of the Earth would have long since decayed, and there is no source for it on Earth. There is a substantial amount of Fe-60 in supernovae; finding Fe-60 on Earth might be taken as an indicator of supernova debris.

That's the idea. It's not entirely new; Fe-60 has been found before, and a supernova source suggested. In article #1 listed below, the scientists show that they find Fe-60 at about the same depth in sedimentary rocks under three oceans. By "same depth" we mean sedimentary material that dates to about the same age. Finding Fe-60 of about the same age in various places suggests it got deposited from some external source; a supernova would do nicely. Specifically, the scientists report finding Fe-60 in rocks about 2-3 million years old and about 7-9 million years old.

If we take the Fe-60 as evidence for supernova debris hitting Earth, then it means we have been subjected to such debris attacks at about those times. Did they do any harm? That's not obvious, but the question remains open.

Since the half-life of Fe-60 is about 3 million years, the method is not likely to detect debris attacks that are much older than those found here. The new work provides evidence for two attacks within the last ten million years; it doesn't limit the full story in any way.

There are actually a pair of articles, published together. Article #1 provides the evidence for Fe-60, dating it to certain times. Article #2 does a theoretical analysis of the debris attacks, and tries to put them in the context of the history of our neighborhood. That's beyond our scope here, but some of the news stories note both articles.

Bottom line... These articles develop an interesting story of detecting debris from supernova on Earth. There are plenty of questions remaining.

News stories:

* Nearby Supernovae Showered Earth with Radioactive Debris 2 to 8 Million Years Ago. (E de Lazaro, Sci.News, April 8, 2016.)

* Supernovae showered Earth with radioactive debris. (Science Daily, April 6, 2016.) For article #1.

News story accompanying the articles: Stellar astrophysics: Supernovae in the neighbourhood -- Detailed measurements of radioisotopes in deep-sea deposits, plus modelling of how they reached Earth, indicate that many supernovae have occurred near enough to have potentially influenced evolution. (A L Melott et al, Nature 532:40, April 7, 2016.)

The articles:

1) Recent near-Earth supernovae probed by global deposition of interstellar radioactive 60Fe. (A Wallner et al, Nature 532:69, April 7, 2016.)

2) The locations of recent supernovae near the Sun from modelling 60Fe transport. (D Breitschwerdt et al, Nature 532:73, April 7, 2016.)

More supernovae:

* How long does a supernova event last? (January 14, 2018).

* The major source of positrons (antimatter) in our galaxy? (August 13, 2017).A post that might be (but probably isn't) about a supernova: Tree rings, carbon-14, cosmic rays, and a red crucifix (July 16, 2012).

More about the Milky Way: Dung beetles follow the Milky Way (February 24, 2013).

My page of Introductory Chemistry Internet resources includes a section on Nucleosynthesis; astrochemistry; nuclear energy; radioactivity. That section lists Musings posts on related topics.

April 25, 2016

A person gets hit in the head. Is there a brain injury, such as a concussion? It's a question that is getting much attention, particularly in the context of athletes. And it's not easy to tell.

What about a blood test for brain injury? Perhaps the level of certain proteins in the blood would indicate the status of brain injury. These might be proteins released as a result of injury, or proteins induced by the injury as part of a repair response.

A new article reports some encouraging results; the following figure is an example. It is a real-world test, monitoring a couple of candidate proteins that had been identified previously.

|

The figure shows results of testing the blood for two proteins, shown by two different colored curves. The curves show blood level of each protein (y-axis) vs time after injury (x-axis).

All of the people being tested have had a trauma; some have evidence for a concussion. The concussion is more formally called mild to moderate traumatic brain injury (MMTBI). (Sometimes we just say traumatic brain injury, or TBI.) Results for those without MMTBI are in the top frame; results for those with MMTBI are in the bottom frame. (That is labeled at the right-end end.) Therefore, what you want to compare is the results between top and bottom frames. A quick glance shows that the protein levels are higher for those with MMTBI (bottom frame) at the beginning, say for a couple days. That's encouraging. Let's look more closely. The yellow curve (protein UCH-L1) rises very quickly following a brain injury. This could be very useful in distinguishing trauma cases with and without brain injury soon after the event. The blue curve (protein GFAP) rises more slowly following a brain injury. It becomes dramatically higher after a day or so. Importantly, the blue curve remains higher for those with MMTBI out to the end of the study (180 hours, 7 1/2 days). To see this, you need to look carefully at the y-axis scales. In the top frame, y=0 is offset a little from the bottom; the results for the blue curve are essentially zero for all times past 48 hr. For the lower curve (people with MMTBI), they are significantly above zero for the entire time span. The x-axis is labeled "time after injury". I suspect what the authors meant was time after first examination. The article says that this was within 4 hours of the injury. This is Figure 2B from the article. I have added the labels to identify what proteins the yellow and blue curves are for. However, the nature of the proteins is not important for now; they are just "markers". |

The results are encouraging. It may be that a simple blood test immediately following a trauma could provide quick information about whether the person has suffered a brain injury. Further, the test can still be very useful even if the person is not examined for a few days after the trauma. That's also important, because it is common for brain symptoms to be delayed, and for people to not seek immediate treatment after seemingly minor trauma.

News stories:

* Concussions In Sports: Simple Blood Test Could Diagnose Traumatic Brain Injury In 7 Days, Help Prevent CTE. (J Caba, Medical Daily, March 29, 2016.)

* Simple blood test can detect evidence of concussions up to a week after injury -- Biomarker released by the brain during injury found to stay in the bloodstream for 7 days. (Science Daily, March 28, 2016.)

The article: Time Course and Diagnostic Accuracy of Glial and Neuronal Blood Biomarkers GFAP and UCH-L1 in a Large Cohort of Trauma Patients With and Without Mild Traumatic Brain Injury. (L Papa et al, JAMA Neurology 73:551, May 2016.)

A post on the problem of evaluating brain injury... Early detection of brain damage in football players? A breakthrough, or not? (September 14, 2015). This and the current post address different parts of the problem. The new post is about immediate evaluation of possible brain injury following a trauma. The earlier post is looking for accumulated damage in the brain.

... and on doing something about it ... Role of a receptor for HIV in stroke recovery (March 23, 2019).

More about trauma: Studying concussions in egg yolks (February 28, 2021).

My page for Biotechnology in the News (BITN) -- Other topics includes a section on Brain. It includes a list of brain-related posts.

April 23, 2016

Traveling along a curve is different from traveling in a straight line. That's true whether you are driving or running.

Track events, such as the 200 meter race, are commonly run with a counterclockwise (CCW) curve. Does that matter? Well, it shouldn't matter much. After all, the human body is bilaterally symmetric, so even if we slow for curves when running, the effect should be about the same regardless of the direction of the curve.

A new article addresses an interesting variation of the question. What about runners who have one natural leg and one prosthetic leg? They are not bilaterally symmetric. Do they run curves the same regardless of curve direction? Is the common CCW track fair for both right- and left-leg amputees?

The Paralympics provides professional competition for athletes with disabilities. The authors of the new work wanted to investigate their question for the best of the athletes, so they got together a group of Paralympic runners and compared right- and left-leg amputees on tracks with both clockwise (CW) and CCW curves. The authors used the usual technologies of analyzing athletes, in particular high-speed videos.

The result? Most runners slow on curves. But asymmetric amputees slow more when their prosthetic leg is on the inside of the curve. The effect was about 4%. That could affect race time by 0.2 seconds, which might be important.

What should happen now, besides collecting further data? This is a scientific article, which provides information. It will be interesting to see whether there is any response to the finding. A simple possibility is to have those who might be at a disadvantage to run in the outer lanes, with a larger radius of curvature. (The scientists have not shown that the effect depends on the radius of curvature, but it is likely, and can be tested.) It's also an interesting question whether the new knowledge might help guide the development of better prosthetics.

A caution... The work here uses a small number of athletes, and the data sets are limited. The athletes vary, of course. For example, the results for the right- and left-leg amputees are quite different. Some of the effects reported are small. As noted above, a small effect, if real, could be important in a real race. So the caution is that this story may or may not hold up. It's an interesting project, which at least raises questions. I encourage those who may find this of serious interest to examine the data carefully; some of it is in the "Supplementary information". (And the article itself has detailed data on the nature of the strides.)

News stories:

* Why Paralympic sprinters have trouble with curves. (H Thompson, Student Science (Now Science Explorers, Society for Science & the Public), April 11, 2016.)

* Paralympic track sprinters are slowed by curves -- Left-leg amputee sprinters on inside lane of indoor track lose valuable time. (Science Daily, March 16, 2016.)

* News story accompanying the article: Paralympic sprinters' prostheses impair curve-running performance. (K Knight et al, Journal of Experimental Biology 219:769, March 15, 2016.) (In the pdf file, this is the first item.)

* The article: Maximum-speed curve-running biomechanics of sprinters with and without unilateral leg amputations. (P Taboga et al, Journal of Experimental Biology 219:851, March 15, 2016.)

More about running... Should you run barefoot? (February 22, 2010).

There is now an extensive list of sports-related Musings posts on my page Internet resources: Miscellaneous under Sports.

My Biotechnology in the News (BITN) page for Cloning and stem cells lists Musings posts not only in the title area, but more broadly posts about replacement body parts including prosthetic devices.

April 22, 2016

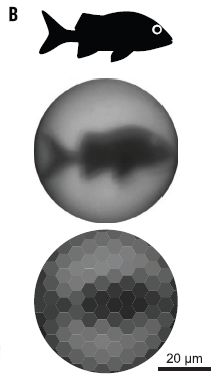

Look...

|

|

|

The figures show samples that have been stained using antibodies against the muscle protein myosin. Start with the "control", frame K (right). The sample here is blood vessels from an ostrich. The green color shows where the anti-myosin antibody bound; the green aligns with the vessel material. The sample in frame I (left) is from a dinosaur fossil. Looks pretty good. These are parts of Figure 5 from the article. The scale bars are 20 µm. |

That's the heart of the story from a recent article. Samples from a dinosaur fossil have identifiable proteins, consistent with them being from dinosaur blood vessels. The figures above show the evidence for myosin; the full figure includes similar evidence, using antibodies, for three other proteins. The article also includes analysis of the proteins by mass spectrometry.

It is a startling claim, because scientists have been skeptical that proteins can survive that long -- on the order of a hundred million years.

This is not a completely new story. It is part of an ongoing effort to identify proteins in dinosaur fossils. Musings has noted earlier work [link at the end].

Work with ancient DNA went through a time of serious skepticism. In fact, some of the early work was wrong. The problem is not the measurements per se. It's clear that the dinosaur sample above stains for myosin. The concern is sample integrity. Is the sample really what is claimed, or has it -- somehow -- been contaminated with other materials, either in the field or in the lab? Over time, the ancient DNA scientists have worked out procedures and standards for such work; the ancient protein scientists are trying to follow. Much of the current article discusses the integrity issue.

The current work is framed around a rather modest issue. Some have suggested that the protein material in the dinosaur fossils is from bacterial biofilms. The work in the article provides good evidence that these are animal proteins, not bacterial proteins. But there is much more to wonder about.

What's the take home lesson? I suggest that we simply note it. A team of scientists reports that structures and proteins in dinosaur fossils appear to be from blood vessels. Others will work on this, addressing every possible concern. Perhaps we will see independent confirmation. Perhaps someone will identify fatal flaws in the work. Perhaps finding flaws will lead to improved procedures. For now, all we can do is report what has been claimed, and await further developments. Science in progress.

News stories:

* Paleontologists Find Mineralized Blood Vessels in 80-Million-Year-Old Hadrosaur Fossil. (E de Lazaro, Sci.News, December 1, 2015.)

* Dinosaur Blood Vessels Survived 80 Million Years Without Fossilizing. (L Geggel, Live Science, December 9, 2015.)

The article: Mass Spectrometry and Antibody-Based Characterization of Blood Vessels from Brachylophosaurus canadensis. (T P Cleland et al, Journal of Proteome Research 14:5252, December 4, 2015.)

Background post: Dinosaur proteins (July 6, 2009). Links to more.

Most recent post about dinosaurs... What caused the extinction of the dinosaurs: Another new twist? (January 26, 2016).

Posts about blood vessels include... Peripartum cardiomyopathy -- a heart condition associated with pregnancy (June 30, 2012).

Some posts about the ancient DNA field...

* Ancient DNA: an overview (August 22, 2015).

* Chromosomes -- 180 million years old? (April 18, 2014). The claim here is based on visible features; there is no claim of old DNA.

* The oldest DNA: the genome sequence from a 700,000-year-old horse (August 4, 2013). I think this still stands as the oldest DNA that has been sequenced.More mass spec: Hydride-in-a-cage: the H25- ion (January 22, 2017).

April 19, 2016

Briefly noted...

A team of scientists has recently reported identification and dating of three fossil forests in Svalbard, in northern Norway.

They are tropical forests of primitive trees, as one might expect for 380 million years ago -- back when Norway was on the equator.

That was a time of extremely high CO2 in the atmosphere, several times present levels. It is thought that an era of vigorous growth by a new group of organisms known as trees played a key role in reducing CO2 to modern levels. Perhaps tropical Norway played a key role in reducing the CO2 level to something more acceptable to the modern animal life, which did not yet exist.

It's a fun little story, a reminder of how things change -- and move.

News stories:

* Paleontologists Unearth Tropical Fossil Forests in Norway. (N Anderson, Sci.News, November 19, 2015.)

* Ancient fossil forest unearthed in Arctic Norway. (Science Daily, November 19, 2015.)

The article, which is freely available: Lycopsid forests in the early Late Devonian paleoequatorial zone of Svalbard. (C M Berry & J E A Marshall, Geology, 43:1043, December 2015.)

More Norwegian trees... The spruce genome: it's big (July 1, 2013).

More from Svalbard... Svalbard is leaking (March 7, 2014).

More forests: The downside of nitrogen fixation? (November 4, 2017).

April 18, 2016

Huntington's disease (HD) is a progressive neurodegenerative disease. It is caused by mutations in the gene for a protein called huntingtin (HTT). Interestingly, the mutations involve expansions of a three-base (triplet) repeat, leading to repeats of the amino acid glutamine in the protein. How the mutant protein causes disease is not understood.

What if we put the mutant huntingtin gene into a songbird?

Let's look at what was reported in a recent article...

|

In this experiment, zebra finches with various forms of the huntingtin protein were studied. The key variable is the number of consecutive glutamines in the protein. This is shown by a number such as 4Q, meaning a repeat of 4 glutamines. (Q is the code for glutamine.)

In the test here, the birds learned their song by three months of age. They were then followed to see (hear?) how well they remembered it later. This is shown as a similarity score (y-axis), plotted against time (x-axis). You can see that the 4Q and 23Q birds remembered their songs rather well, but the 145Q birds performed poorly over time. This is Figure 3C from the article. |

The results show that birds with mutant HTT protein, with a large Q repeat, have song defects. Much of the article elaborates on this story.

Let's fill in some details... First, the birds naturally have a HTT gene, which is quite similar to that in humans. In fact, the birds labeled 4Q are normal, wild-type (WT) birds, with that normal bird HTT gene. (That is, 4Q is the normal repeat for these birds.) The birds with 23Q have the human wild-type HTT gene added. (23Q is about normal for humans, but it varies.) The birds with 145Q have an added HTT gene from a human with the disease.

HD is a dominant disorder. Thus, adding the human HTT gene to the birds (not replacing their natural copy) is appropriate.

The experiment described above shows that adding the wild-type human HTT has little effect. (Some of the experiments reported do show some effect of adding 23Q.) Adding the form of the HTT gene that causes disease in humans disrupts song in the birds.

Interestingly, the song birds with the 145Q HTT protein are generally normal, except for their problems with song. In fact, the authors note that their birds are "the first experimentally created, functional mutant songbirds" (abstract).

What does one do with this finding? That's not clear yet. Might it be useful in studying the nature of Huntington's disease? Might it be useful in studying bird song? For now, it is an intriguing new finding.

Why study HD in songbirds? The birds, like humans but unlike other common lab animals, make vocalizations that are learned.

News stories:

* Songbirds Could Be Used as Valuable Tool to Study Brain Neurodegeneration, Huntington's Disease. (M Ammam, Huntington's Disease News, October 7, 2015.)

* Finches offer researchers a new tool to study Huntington's disease. (Rockefeller University, October 5, 2015. Now archived.) From the lead institution.

The article: Human mutant huntingtin disrupts vocal learning in transgenic songbirds. (W Liu et al, Nature Neuroscience 18:1617, November 2015.)

Previous post about HD: Huntington's disease: Is it an amino acid deficiency? (October 4, 2014).

and more...

* An "antidote" for Huntington's disease? (February 29, 2020).

* Triplet-repeats: Do they act through the RNA? (September 24, 2017).More about songbirds...

* The oldest known syrinx (December 4, 2016).

* Are urban dwellers smarter than rural dwellers? (August 2, 2016).

* Bird brains -- better than mammalian brains? (June 24, 2016).My page for Biotechnology in the News (BITN) -- Other topics includes a section on Brain (autism, schizophrenia). It includes a list of brain-related posts.

April 17, 2016

The heart of the CRISPR system is a nuclease, Cas9, which cuts DNA at a target site. The targeting is achieved by a guide RNA. The use of an RNA for targeting is what makes CRISPR so easy to use; it is easy to make an RNA complementary to any DNA region of interest. What happens after the cut depends on how the system is being used.

A simple use of CRISPR is to restrict the growth of a virus, such as HIV. In this case, the guide RNA targets a critical region of the viral genome; Cas9 cuts at the targeted site. It is left to normal cellular processes to repair the cut; the expectation is that such repair will make the virus unable to grow.

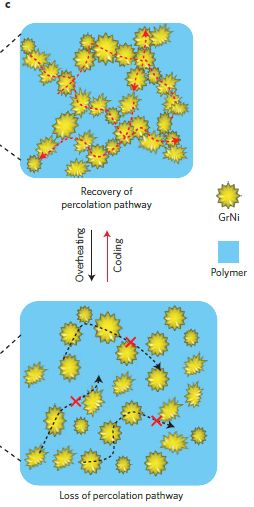

Maybe. Here is an example of what happens, as reported in a new article...

|

The graph shows HIV virus produced vs time of infection, for three conditions. (Actually, the scientists measured the amount of one viral enzyme, the reverse transcriptase (RT).)

The cellular host contained the Cas9 nuclease; the three conditions differed in what the targeting guide RNA was. |

|

In the control ("Ctrl"), there was no guide RNA. In effect, this is a normal virus infection. There is a peak of virus production at 10-12 days. For the other two conditions, there was a guide RNA targeted to a critical region of the virus. You can see that normal virus production was indeed stopped. Testing two different guides, targeted to different critical sites, helps to show that the result is of some generality. However, keep looking, and something else happens. Ten days later, there is a burst of virus. It is as if the HIV has overcome the CRISPR, and finally made virus. This is Figure 2A from the article. | |

That's the basic observation. Given some time, HIV seems to overcome CRISPR. The rest of the article explores why this happened.

The short version of the story is that, while most events repairing the Cas9 cut inactivated the virus, a few made a virus that could replicate. Those viruses, by the way, were no longer susceptible to the original guide RNAs.

What's the big message? CRISPR is a new tool, and we are still learning about it. For some uses of CRISPR, we carefully test what the result is before putting the resulting cells into a live organism. That helps protect against undesired events such as seen here. For direct use of CRISPR in organisms, we will need to think carefully about what unexpected consequences might happen.

News stories:

* HIV overcomes CRISPR gene-editing attack -- Virus can quickly develop mutations that resist attack by DNA-shearing enzymes. (E Callaway, Nature News, April 7, 2016.)

* How HIV Can Escape an Experimental CRISPR Therapy. (T Lewis, The Scientist, April 7, 2016. Now archived.)

The article, which is freely available: CRISPR/Cas9-Derived Mutations Both Inhibit HIV-1 Replication and Accelerate Viral Escape. (Z Wang et al, Cell Reports 15:481, April 19, 2016.)

A CRISPR post, which includes a complete list of all Musings CRISPR posts... CRISPR: an overview (February 15, 2015).

Next HIV post: Should we make antibodies to HIV in cows? (November 14, 2017).

My page for Biotechnology in the News (BITN) -- Other topics has a section on HIV. It includes a list of related posts.

April 15, 2016

You've probably heard the terms. Someone says they are a "morning person"; another is a "night person". Are these real characteristics? Are they determined, even in part, by our genes?

A new article reports finding some genes that may be associated with being a morning person. It's an interesting story. As usual, it is very preliminary.

The article is from a company that does "personal genomics", namely 23andMe. You send the company some cells and some money; they test your DNA, and send you back a report. Companies such as 23andMe collect vast amounts of human genome information. They also ask their customers to provide some information, so they are in a position to see if there is any correlation between certain genome sequences and certain characteristics.

Analysis of the company database suggests there is a correlation between certain sequences and being a morning person.

Here is how the results are presented...

|

The x-axis is the human genome, laid out on one line. It is labeled by chromosome number.

The y-axis is a probability number. It's shown here in a way such that the higher the number, the more likely there is an association -- according to the statistics. There is a horizontal line at 8; that corresponds to a probability value of 10-8. Values above that (with lower p) are suggested to be significant; the cutoff is somewhat arbitrary, but based on experience. Points -- genome sequences -- with those higher values are shown in red. The type of analysis here is called a GWAS, or genome-wide association study; it is a common type of study in this age of vast genome libraries. The type of graph above is called a Manhattan plot. Why? Because it reminds some people of the Manhattan skyline. This is Figure 1 from the article. |

That's it. There are several red points, above the cutoff line. There appears to be a correlation between having these genome sequences and being a morning person.

Now what? Each of those candidates needs to be tested, to see if the association is real. If so, what is the mechanism? Some of them will prove to be false positives, but some may prove interesting. That's the nature of GWASes; they can only offer hints, but they are very good at doing that.

There is a little more information than that, but be careful about reading too much into it at this point. The scientists can look at the regions of the genome where those candidate sequences occur. Sometimes we know a little about the genetic region, sometimes not. For some of these candidates, there is already some reason to suspect they have some relationship to sleep or to circadian rhythms.

News stories:

* Genetic links to being a 'morning person', says 23andMe study. (B Czub, BioNews, February 8, 2016.) (This story says that morning people are more likely to sleep soundly; they got that backwards.)

* Can Your DNA Determine If You're a Morning Person or Night Owl? (GEN, February 3, 2016. Now archived.)

The article, which is freely available: GWAS of 89,283 individuals identifies genetic variants associated with self-reporting of being a morning person. (Y Hu et al, Nature Communications 7:10448, February 2, 2016.)

The principle behind what companies such as 23andMe do is sound. There is some controversy about the information they send back to the customers, and the company has been challenged by the FDA. The issue is not the hard facts, but how much interpretation they do, and how much "medical advice" they offer. At least the better of the companies do good science, and are trying to learn how to build an acceptable business based on personal genomics.

The work here is a research activity of the company, not something they are reporting to the customers.* * * * *

A recent post on sleep and circadian rhythms: How caffeine interferes with sleep (December 11, 2015). It mentions the idea of morning people.

Also...

* Daylight savings time: night-owls have more difficulty adapting (August 1, 2021).

* Evening light: how it affects our sleep (July 30, 2019).

* Circadian rhythms and class time? (June 10, 2018).

* What if a lion came into your hotel room while you slept? (July 20, 2016).

* Sleepy teenagers (July 23, 2010).More circadian rhythms: Why growing sunflowers face the east each morning (November 8, 2016).

A post about personalized medicine... Personalized medicine: Getting your genes checked (October 27, 2009). This includes an extensive list of related posts.

Previous GWAS post... A gene that reduces the chance of successful pregnancy: is it advantageous? (May 18, 2015).

More from 23andMe: Ear lobe genetics: more complicated than you thought (March 23, 2018).

There is more about genomes and sequencing on my page Biotechnology in the News (BITN) - DNA and the genome. It includes an extensive list of related Musings posts.

April 12, 2016

A short note about a story that can largely speak for itself.

A new report provides data on the percentage of women members in national academies of science around the world. In addition to providing the basic numbers, there is discussion of what is behind the numbers. There are comparisons with the percentage of women in science, and discussions of what is being done. It's all interesting, but there are many questions you might raise.

News story: Women under-represented in world's science academies -- Fewer than half of academies have policies in place to boost gender equality in membership. (E Gibney, Nature News, February 29, 2016.)

The announcement of the report, which links to the report itself: Women underrepresented in world science report finds. (Academy of Science of South Africa, February 29, 2016. Now archived.) The report is a 100+ page pdf file, very slick as one might expect. There are data tables, but there is more than just the numbers. If you are willing to browse the report, perhaps starting with the table of contents, you can get a lot of information from it.

The report is actually a composite, based on two surveys. The main one was carried out by the South African Academy, named above as the author of the report. The other was carried out in the Americas, by the Inter-American Network of Academies of Sciences (IANAS). IANAS notes how the gender balance in the US National Academy of Sciences has changed. In 1990, it was 4% women; in 2014, 13%. Why? Because the Academy made a conscious effort to admit more women members. In the intervening years, the percent women admitted each year has varied between 8% and 31%; that has led to the gradual but generally consistent increase. The IANAS report is included (in summary form, I presume), in the main report, starting on page 75 of the pdf file. The numbers I quoted here are from data on pages 79-80.

* * * * *

Also see: The man who established the (US) National Academy of Sciences (February 12, 2016).

My page for Biotechnology in the News (BITN) -- Other topics includes a section on Ethical and social issues; the nature of science. It includes a list of related Musings posts.

April 11, 2016

Making ethanol from biomass is a well-known process. However, hydrocarbons are generally considered a better transportation fuel than ethanol.

A recent article addresses how to make hydrocarbon fuels from ethanol. It raises some interesting issues.

The following figure shows a key result...

|

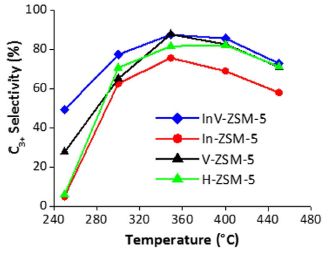

The general nature of the experiment is that ethanol is treated under conditions to convert it to hydrocarbons.

For convenience, the scientists divide the hydrocarbon products, a complex mixture, into two types, depending on the number of carbon atoms per molecule: C2 and C3+. C2 includes ethylene, a well-known product of simply dehydrating the ethanol molecule. C3+ refers to all larger hydrocarbons (with three or more C atoms). It is a simplification, but for the current context... the more C3+, the better. |

|

The graph shows the percentage of the C3+ products (y-axis) vs the temperature of treatment (x-axis). The various curves are for various catalysts. The first observation is that all the curves have the same general shape: the percentage of C3+ products increases with temperature (T), up to around 360 °C. It then declines some with higher T. Next, we see that one curve shows the best results. The curve with blue diamonds is highest, or very nearly so, at all T. In fact, one key finding from the work is that the catalyst used for the blue-curve test is the best catalyst so far. Some chemistry detail... All the catalysts have ZSM-5 in the name. That refers to a class of zeolites. The front part of the name identifies the added elements. The best catalyst is the InV one -- which is better than In or V alone. The InV catalyst contains both indium and vanadium; it is a heterobimetallic catalyst, as identified in the article title. This is the right-hand side of Figure 2 from the article. | |

The results above show that a new catalyst helps in making a larger hydrocarbon product, which is useful for transportation fuels.

The scientists also address the mechanism of the reaction. It is well known that ethanol can be dehydrated to make ethylene. In chemical terms... CH3CH2OH --> H2C=CH2 + H2O. In that process, the H of the alcohol group goes to the water, and does not end up in the hydrocarbon product.

In the current work, the scientists test this mechanism, by using alcohol with the isotope deuterium (D) replacing ordinary hydrogen in the alcohol group. To their surprise, the D got incorporated into the hydrocarbon products. This suggests that dehydration is not the first step in their process. The result has implications for how development proceeds, but for now, it is rather murky what is going on.

News stories:

* Energy-efficient reaction drives biofuel conversion technology. (Science Daily, November 3, 2015.)

* ORNL team discovers mechanism behind direct ethanol-to-hydrocarbon conversion; implications for energy efficiency and cost of upgrading. (Green Car Congress, November 4, 2015.)

The article, which is freely available: Heterobimetallic Zeolite, InV-ZSM-5, Enables Efficient Conversion of Biomass Derived Ethanol to Renewable Hydrocarbons. (C K Narula et al, Scientific Reports 5:16039, November 3, 2015.)

Some of the authors are involved in a start-up company that is working to develop the process described here.

A post about catalyst development, in the context of biomass: Turning lignin into a useful product (April 11, 2015).

Another post about zeolites: Upsalite: a novel porous material (September 6, 2013). Zeolite is a term for a broad group of chemicals; there is no connection between the functions in the two zeolite posts.

More: A better catalytic sponge: degrading plastics, and more (August 11, 2020).

More vanadium: A better membrane for vanadium-based flow batteries (March 11, 2022).

There is more about energy issues on my page Internet Resources for Organic and Biochemistry under Energy resources. It includes a list of some related Musings posts.

April 9, 2016

Watch... video (23 seconds; no sound). Pay attention to the water droplet on the left; it is about one millimeter across. (This video is also included with the news story listed below.)

What's happening? A water droplet is jumping up and down. Why? and what is powering it? Ah, that's what makes this interesting. It is spontaneous; there is no external power. The conditions are adjusted so that the interaction between the water droplet and the surface is very weak; then, the thermal energy of the water is enough to allow the droplet to escape. It rises, only to fall due to gravity -- and so forth. Exactly why the droplet trampolines -- jumps higher and higher -- was a puzzle for the scientists to work out.

This work was reported in a recent article. How did the scientists achieve the conditions that allow this trampolining behavior? The first key step is that the surface is extremely hydrophobic; it is called superhydrophobic. You may recall that hydrophobic things avoid water. The surfaces used here do so to an extreme degree. That is the basis of making the interaction very weak. The scientists then lower the pressure, thus making it easier for the water droplet to escape.

You may wonder... Is the phenomenon here related to evaporation? Yes, indeed, but there is an important difference. Evaporation is about individual molecules; the trampolining reported here is about water droplets -- big enough to see by eye. Evaporation is one part of the story.

Why is this of interest? Well, it's a new finding; who knows what people will make of it. One goal that the scientists have in mind is de-icing of airplane wings. Using superhydrophobic wing coatings to reduce the tendency of water to stick could be a good step. Now, can they get this to work without having to reduce the pressure?

News story: Trampolining water droplets. (Nanowerk News, November 4, 2015.) Includes two movies, one of which is noted at the top of this post.

* News story accompanying the article: Materials science: Droplets leap into action. (D Vollmer & H-J Butt, Nature 527:41, November 5, 2015.)

* The article: Spontaneous droplet trampolining on rigid superhydrophobic surfaces. (T M Schutzius et al, Nature 527:82, November 5, 2015.) There are additional movie files posted with the article as Supplementary information; they should be freely available, regardless of your access to the article. The first one is quite good, but you need to work through it slowly.

More about hydrophobic materials...

* Disease transmission by sneezing -- in wheat (July 29, 2019).

* A superhydrophobic fly -- that can survive in highly alkaline water (February 25, 2018).

* A biodegradable agent for herding oil slicks (September 18, 2015).

* Electronic devices that can work under water (November 7, 2011).More droplets: What determines the size of liquid droplets from a sprayer? (September 21, 2018).

April 8, 2016

Here is the armadillo:

|

A fossil glyptodont.

This is reduced from a figure on a web page from the American Museum of Natural History: Glyptodonts. |

If you don't know how big a beetle is, go check the Volkswagen web site.

Glyptodonts were armored animals that lived over many millions of years in South America; they probably became extinct about 10,000 years ago. The largest ones fit in with the age of megafauna. The fossils have been known for nearly two centuries; Charles Darwin may have been one of the first to note them. It has long been suspected that the glyptodonts were closely related to armadillos, but classifying fossils is always hard.

A new article reports recovery of DNA from a glyptodont fossil. about 12,000 years old. Sequencing showed that it is indeed an armadillo.

News stories, which will let you see the tail:

* Extinct glyptodonts really were gigantic armadillos, ancient DNA shows. (Phys.org, February 22, 2016.)

* Researchers Sequence Mitochondrial Genome of Glyptodont. (N Anderson, Sci.News, February 22, 2016.)

The article: The phylogenetic affinities of the extinct glyptodonts. (F Delsuc et al, Current Biology 26:R155, February 22, 2016.) The article itself, only two pages, is largely sequencing and its analysis; as typical of genome papers, it is not easy reading. You can look at the family tree, if you want.

Other posts about armadillos...

* An armadillo's distress call (October 15, 2019).

* Leprosy: the armadillo connection (May 14, 2011).

* Twins (April 30, 2009).Among many posts on beetles... How to preserve dead mice so they stay fresh and edible (January 18, 2019).

There is more about genomes and sequencing on my page Biotechnology in the News (BITN) - DNA and the genome. It includes an extensive list of related Musings posts.

April 6, 2016

A recent article offers some intriguing science about a topic with two layers of politics. We note it briefly.

The rare earth elements (REE) are a group of about 17 metals, mostly in the lanthanoid row of the periodic table. They are hard to separate, and used to be considered rather obscure -- until people began to find uses for them. Now, our modern high-tech society depends on the REE -- and they are in short supply.

The new article shows that byproducts of coal mining contain significant amounts of REE. Further, it shows that a simple extraction method is quite effective at recovering them. (The geology of coal varies. The work here focuses on coal fields in the eastern United States.)

The extraction method is the heart of the new article. The method is called ion exchange. Briefly, the REE ore is washed with a solution containing lots of ions; inexpensive and non-toxic ammonium sulfate, (NH4)2SO4 works fine. The ions in the solution exchange with the desired metal ions. This works because the REE ions are apparently simply bound to the surface of the ore material, so they are easily exchanged. A benefit of the coal byproduct material is that it is already finely ground; the process occurs mainly at the surface, and the smaller the particles the better.

Ion exchange is well known, and has in fact been used for REE. What's new here is the application to coal byproducts.

So, with some optimism, perhaps the work opens up a new supply of REE. The scientists don't claim to have a practical economic process at this point, but they think it can be done. New supplies of REE would, in general, be welcomed.

The politics? Well, first there is the issue of using coal. Then, there is the issue of the world supply of REE currently being dominated by one country. We won't go into those political issues here, but they will inevitably be part of the conversation if the new REE process begins to be considered seriously.

News story: Extracting rare-earth elements from coal could soon be economical in US. (Science Daily, February 2, 2016.)

The article: A Study on Removal of Rare Earth Elements from U.S. Coal Byproducts by Ion Exchange. (P L Rozelle et al, Metallurgical and Materials Transactions E 3E:6, March 2016.)

Another approach: Briefly noted... Extracting rare earth elements from coal fly ash: use of various supercritical solvents (April 1, 2023).

Previous post on a REE: Penidiella and dysprosium (September 11, 2015).

A post that notes issues in the use of coal... Electric cars and pollution (April 5, 2011).

More mining: Role of biological processing in the formation of a uranium ore (June 30, 2017).

This post is listed on my page Introductory Chemistry Internet resources in the section Lanthanoids and actinoids.

April 5, 2016

Is that a good idea? It might be if you don't need the implant anymore. It might be better than having a second surgery to remove it.

A new article reports such a device -- an implant that will disappear. It follows on much work learning what happens to materials in the body.

Here is a test...

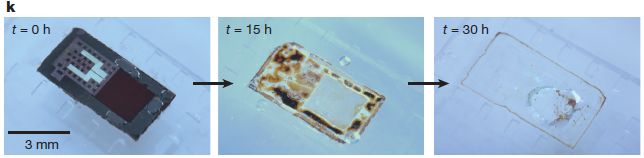

|

In this test, the device was put into a buffer solution at pH 12, and observed over 30 hours.

You can see what the device looks like initially in the left-hand frame (t = 0 h). You can see that it has substantially disappeared by 30 hours (right-hand frame). This is Figure 1k from the article. The device is a pressure sensor, intended to monitor pressure within the brain following an injury. |

The test shown above establishes the principle of a device that can disappear, but it is a quite artificial test. It is an accelerated test. The lab may be able to relate it to natural conditions; the test itself is artificial -- but fast.

Here is some testing under more relevant conditions...

|

In this test, individual materials used to make devices were incubated in artificial cerebrospinal fluid (ACSF) at 37 °C.

The y-axis reports h/ho, the relative thickness of the material, over time (x-axis). The ratio starts at 1, by definition, and declines to zero when the material is gone. You can see that each material tested "disappears" over the time course of the observations. That's the main point. |

|

The materials are: Si NMs: silicon nanomembranes; np-Si: nanoporous Si; Mg foil: magnesium foil; SiO2: silicon dioxide. This is Figure S16 from the "Supplementary Information" accompanying the article. | |

Most of the article is about the performance of the devices, in rats. Not surprisingly, they perform well. The more interesting part of the work, perhaps, is the ability of the material to disappear, in a safe manner. The materials used have been tested before. The two graphs shown above are examples of data on device stability from the new article.

In the title I used the term biodegradable. That term is questionable, if you think about how we commonly use it. I use it more loosely to mean "degrades in the body". The authors use the term bioresorbtion, which may be fine if you understand it -- and if that is what happens. In fact, it's not entirely clear what happens to all the material -- except that it "disappears", in the body. Don't worry much about the details of how for now.

The story is that we are closer to having devices that can be implanted in the body, even in the brain, to take measurements, and which will somehow disappear without ill effect when no long needed.

News story: Tiny electronic implants monitor brain injury, then melt away -- Eliminate the need for additional surgery to remove monitors and reduce risk of infection and hemorrhage. (Kurzweil, January 19, 2016.)

The article: Bioresorbable silicon electronic sensors for the brain. (S-K Kang et al, Nature 530:71, February 4, 2016.)

More on electronics that can disappear when no longer needed...

* Using wood-based material for making biodegradable computers (July 21, 2015).

* Silk-clothed electronic devices that disappear when you are done with them (October 19, 2012). Earlier work from the same lab.My page for Biotechnology in the News (BITN) -- Other topics includes a section on Brain (autism, schizophrenia). It includes a list of brain-related posts.

April 3, 2016

A recent Musings post was about the biodegradation of polystyrene plastic [link at the end].

A new article reports the isolation of bacteria that can degrade another plastic: a common polyester, poly(ethylene terephthalate) (PET).

How did the scientists find such bacteria? They isolated bacteria from PET debris, and screened them to find those that could "live on" the plastic -- use it as their main carbon and energy source. It's actually a rather traditional approach to finding bacteria with a specific metabolic ability.

Degradation of PET isn't entirely new; others have found fungi that can degrade it. However, the newly isolated bacteria do it much better; under lab conditions they can actually completely degrade the plastic over several weeks.

In one sense, PET shouldn't be hard to degrade. After all, the main linkages between its subunits are ester bonds, a type of bond that is common and easy to hydrolyze. However, the physical form of PET is about as uninviting as you can get. Bacteria are not good at using knives and forks, or even chewing things. Usually.

An interesting question will be how the bacterial enzymes gain hold, so they can effectively digest the plastic. There is precedent for such special chewing processes in bacteria, for example, in some that degrade cellulose. Maybe something like that happens here.

Another interesting question is on the "pure science" side. The bacteria use two enzymes to degrade PET. What is their origin? Analysis of these enzymes suggests they are quite specialized for using PET, and quite distinct from general esterases. It seems likely that these enzymes have evolved as a response to the presence of PET, which first appeared about 70 years ago. What might we be able to produce in the lab using artificial selection -- now knowing the general form of the solution?

There is another interesting possibility for how this story could play out. The basic finding is that the new bacteria can degrade PET. To what? To its monomers, ethylene glycol and terephthalic acid. What then? The bacteria eat the monomers, as energy source (just as common bacteria eat sugar). But what if we could block the process so that the bacteria simply break down the plastic and make the monomers. Those monomers could then be collected, and used to make new plastic. That could move the plastic toward being a sustainable product. There are questions we would need to think about, but modifying the degradation so that the monomers accumulate is probably easy in this case. It's an intriguing possibility.

Caution... All this work on biodegradation of plastics is at the earliest stages of research. As research, it is interesting. It may or may not be possible to develop practical processes from any of them. These discoveries should not be used to justify release of plastic waste into the environment. For now, for the most part, there is effectively no natural biodegradation.

News story: Ideonella sakaiensis: Newly-Discovered Bacterium Can Break Down, Metabolize Plastic. (S Prostak, Sci.News, March 11, 2016.)

* News story accompanying the article: Microbiology: Feeding on plastic. (U T Bornscheuer, Science 351:1154, March 11, 2016.)

* The article: A bacterium that degrades and assimilates poly(ethylene terephthalate). (S Yoshida et al, Science 351:1196, March 11, 2016.)

Background post on biodegradation of plastic: Polystyrene foam for dinner? (October 19, 2015). Links to more. You might wonder if this process could be one arm of a sustainable process. Good question. So far we have no idea what the actual biochemical process is. However, it is quite likely that it damages the monomer units. The prediction -- and it is just that -- is based on the nature of the specific plastic.

Follow-up on current post: Follow-up: bacterial degradation of PET plastic (April 25, 2018).

There is a section on my page Internet Resources for Organic and Biochemistry for Carboxylic acids, etc. Esters are an example of the carboxylic acid derivatives. The section lists a good resource for polymers. It includes a list of some related Musings posts.

This post is noted on my page Unusual microbes.

A broad view of plastics: History of plastic -- by the numbers (October 23, 2017).

April 2, 2016

42 meters per second.

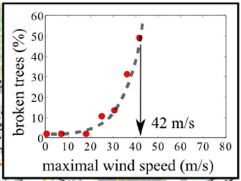

Here are some data, reported in a new article. The data are based on a major storm, called Klaus, in France in 2009.

|

The figure shows the percentage of trees that were broken (y-axis) as a function of wind speed (x-axis).

You can see that the percentage of broken trees is low at low wind speed. It then rises -- with increasing steepness -- as the wind speed increases. The midpoint of the curve is at about 42 m/s (90 miles per hour). This is the inset from Figure 2b in the article. |

The scientists were intrigued by the seemingly simple curve. After all, trees vary in both diameter and height, and there are different kinds of wood structures.

They then did some experimental work in the lab, measuring the breaking point of logs. They could measure the effects of diameter and height. Most real trees, it seems are in the range where these effects tend to cancel out. That is, most real trees have similar sensitivity to breaking.

What does this mean? I wonder... Perhaps it means that trees have adapted to the environment over the ages. They survive common winds well, but not extreme winds. If this is so, then it might be that similar work in other places would yield different critical values. That is testable.

Don't go away from this with just "the answer". (Admittedly, there is some appeal to "The answer is 42.") It's a nice story of how some good observations in the field can lead to interesting exploratory lab science. And maybe to more.

Why does this matter, beyond being fun? Wood strength matters. Climate change will probably lead to more severe storms. Can we understand storm damage better? Further, mankind uses wood, in part for its strength.

This is not a new field of inquiry. The reference list goes back over 500 years, to a work by L da Vinci [ref 13]. In fact, the reference list includes several items dating back to the 18th century or earlier, some from familiar names. Remember Hooke's Law?

News stories:

* Trees break at fixed wind speed, irrespective of size or species. (I Randall, Physics World, February 10, 2016.)

* Oddly enough, all trees regardless of size break at the same wind speed. (T Puiu, ZME Science, February 9, 2016.)

The article: Critical wind speed at which trees break. (E Virot et al, Physical Review E 93:023001 February 2, 2016.) Check Google Scholar for a copy.

Posts about wind include...

* Atmospheric rivers and wind (May 9, 2017).

* What is the proper length for eyelashes -- and why? (March 16, 2015).

* How rocks travel (November 14, 2014).Posts about trees include...

* The quality of citizen science: the SOD Blitz (September 28, 2015).

* More from the artificial forest with artificial trees (August 31, 2015).

* Why do koalas hug trees? (June 13, 2014).More about wood and such:

* The strongest bio-material? (May 30, 2018).

* Making wood stronger (March 19, 2018).

March 30, 2016

Evidence from Brazil suggests that Zika virus can lead to microcephaly in children born to mothers who had Zika infections during pregnancy. Oddly, there is little evidence for an association between Zika and microcephaly except for the current Brazil outbreak.

Why might we have such a discrepancy? One type of explanation suggests that the main reason is simply lack of data. The other type of explanation suggests that there is something special about Brazil.

We now have an article on a recent outbreak of Zika in French Polynesia (e.g. Tahiti). Scientists have gone through the health records carefully; they now provide a thorough analysis.

The outbreak lasted seven months; about 2/3 of the population became infected.

The incidence of microcephaly was best explained with a model with the following features:

* Background rate of microcephaly: 2 per 10,000 babies.

* Rate of microcephaly when mother was infected during first trimester: 95 per 10,000 women. That is about 1%. (Note that is expressed a little differently than the first one; it shouldn't make much difference.)

* For infection during other trimesters, the data is inconclusive.

That's all interesting. It is the kind of analysis we have wanted. It would seem to provide good support for a connection between the virus and microcephaly, outside Brazil.

The main problem with the analysis is that it is based on a total of eight cases. It is a reminder that we still have only limited data about Zika.

Many estimates of the frequency of microcephaly from Brazil are far higher than that given here. Much of the data from Brazil should be taken as preliminary; there are reasons it may be an over-count. Thus we cannot claim to have completely solved the problem presented at the start -- only to have made a useful start with a nice piece of work.

News story: French Polynesia study gauges Zika microcephaly risk in early pregnancy. (L Schnirring, CIDRAP, March 15, 2016.) Good overview.

* Commentary accompanying the article: Microcephaly and Zika virus infection. (L C Rodrigues, Lancet 387:2070, May 21, 2016.)

* The article: Association between Zika virus and microcephaly in French Polynesia, 2013-15: a retrospective study. (S Cauchemez et al, Lancet 387:2125, May 21, 2016.)

Previous post on Zika: Zika virus can infect and inhibit neural progenitor cells (March 14, 2016).

Zika is mentioned in the post How long is a yawn? (December 16, 2016).

There is a section on my page Biotechnology in the News (BITN) -- Other topics on Zika. It includes a list of Musings post on Zika. (This is the second.)

March 28, 2016

A brain-computer interface (BCI) allows a person to control mechanical operations using their thoughts. During training, the computer learns what brain signals mean. It can then act on the user's thoughts.

The principle of the BCI is now well-established. Musings has noted an example of a basic BCI [link at the end], as well as other examples of using the information content of brain waves.

However, BCI devices do not work very well -- yet. The dream of a disabled person being able to carry out activities by thinking about them is not yet fulfilled. One of the problems is that the device is not very robust, and requires frequent re-calibration.

A new article takes a useful step. Re-calibration occurs continually during normal use of the device.

The following figure shows some results.

|

The general plan here is that quadriplegic typists, using a BCI to control a typewriter with their thoughts, typed at their own pace. The graphs show their typing speed vs time.

The key variable is whether or not the new "self-calibration" software is used. We'll come back to that in a moment. Data is shown for two participants. Data in the left and right sides is for persons T6 and T7, respectively, as labeled at the top. The y-axis is a measure of typing speed. It is labeled CSPM, which stands for correct selections per minute. You can think of it as correct keystrokes per minute. (With the computer system used here, a single keystroke may result in various numbers of characters.) Let's look at some results for T6 (left side). In frame A (top), there are some green bars across the top. These are for parts of a single session. You can see that typing speed is maintained more or less steady during the "green" session. The other two sets of colored bars are for two other sessions. Again, the key observation is that typing speed is substantially constant during each session. (It is not clear what the different shadings mean in the figure. But I am rather sure the main idea noted here is ok.) Now, look at frame C (bottom). It's also for T6. Black bars. In this case, typing speed decreases over time. Why? Because the software "innovations" were turned off. That is, comparison of the results in frame A, with new software doing continual re-calibration, and frame C, without it, shows the effect of the calibration software. The right side (frames B and D) shows similar results with person T7. However, there is one interesting twist. In the lower frame (black bars), the typing speed quickly dropped to zero with the calibration software turned off. The scientists turned it back on, and the typing speed soon returned to a normal range, as shown by the later bars. (Those bars are colored to show that the software was on.) This is Figure 5 from the article. |

The best typing speeds seen here are 2-3 times the best previously reported. Importantly, they can be sustained, without outside intervention (from lab staff).

The nature of the continual re-calibration is not particularly novel. It's an extension of the original calibration, just based on data while the person is doing real typing. What's important is they have implemented it, and it helps.

News story: Self-calibration enhances BrainGate ease, reliability. (Science Daily, November 11, 2015.)

The article: Virtual typing by people with tetraplegia using a self-calibrating intracortical brain-computer interface. (B Jarosiewicz et al, Science Translational Medicine 7:313ra179, November 11, 2015.) Check Google Scholar for a copy available from the authors. Caution... it is not easy reading.

More on BCI:

* Brain-computer interface -- without invasive electrodes (December 28, 2016).

* Brain-computer interface: Paralyzed patients control robotic arm by their thoughts (June 16, 2012). From the same lab as the current post.My page for Biotechnology in the News (BITN) -- Other topics includes a section on Brain (autism, schizophrenia). It includes a list of brain-related posts.

March 26, 2016

A familiar example of a hydrogen bond is that between two molecules in liquid water; such bonds are responsible for some of the remarkable properties of water. (And very similar hydrogen bonds are responsible for how information is stored in DNA and for some aspects of the structures of proteins.)

Here is how we might draw such a hydrogen bond, in simple text:

H-O-Hδ+ --- δ-OH2

We have two water molecules there; they are shown differently to facilitate lining them up for a hydrogen bond. The dashed line is the hydrogen bond between the two molecules (intermolecular). We've also shown the basis for the bond: the two atoms have small opposite charges, and thus attract. (The δ, the lower case "delta", is usually interpreted as meaning "slightly".) Why do those atoms have slight charges? O atoms hold electrons more tightly; we say that the O atom is more electronegative.

In general, hydrogen bonds occur when an H is between two atoms that are highly electronegative (and quite small).

Here is another hydrogen bond, as reported in a new article.

|

The dashed line in the figure shows a hydrogen bond between a H atom in diborane, B2H6, and the side of a benzene ring.

That's different. Let's deal with the benzene ring first. That aspect is actually not new. The side of a benzene ring has a slight negative charge; think about those π electrons. It's the other end of this hydrogen bond that is quite novel. It is an H attached to a B; in fact it is an H attached to 2 B atoms. B? 2 B? B is not a highly electronegative atom. In fact, it is more positive than H. One might expect a B-H bond to be polarized Bδ+- δ-H. |

|

Why, then, is this H δ+? Because there are two B atoms pulling electrons away from the H. That in itself makes compounds such as this unusual; the whole field of B-H chemistry is full of things that came as quite a surprise given our basic understanding of bonding. This is from the "graphical abstract" accompanying the article at the journal web site. | |

That's the idea. What do the authors do? They do theoretical calculations and experimental measurements. Together they support the story above: an H bonded to two B atoms can be δ+, and capable of serving as a hydrogen bond donor. The resulting bond is similar in strength and length to a common H bond between water molecules.

News story: New type of hydrogen bond discovered. (D Bradley, Chemistry World (RSC), March 9, 2016.)

The article: B-H...π Interaction: A New Type of Nonclassical Hydrogen Bonding. (X Zhang et al, Journal of the American Chemical Society 138:4334, April 6, 2016.)

As noted above briefly, boron chemistry gets quite fascinating. Those who think that chemical bonds contain two shared electrons will not be able to make sense of the diborane structure shown above. Hint... The five bonds in the middle, between the two B atoms, contain a total of four electrons.

A post about hydrogen bonding: Life's newest DNA base pair: 5SICS-NaM (June 4, 2014).

More about boron... Paleobioboron (January 26, 2011).

More unusual bonding: How many atoms can one carbon atom bond to? (January 14, 2017).

March 23, 2016

Man has a large brain, compared to other animals (taking size into account). However, our overall energy expenditure is about the same. It follows, therefore, that we must spend less energy on some other activity in order to fuel the energy-intensive brain. A good candidate is digestion. Man has a smaller digestive system than other animals. That includes a smaller mouth and teeth.

How can we get by with less of a digestive system? By eating food that is more easily digested. It has been proposed that the invention of fire was important in this regard. Cooked meat is more easily digested than raw meat. Moving toward cooked meat would have allowed ancient hominids to divert energy resources away from gut toward brain.

A new article offers another possibility.

To get an idea of the main results from the new work, we look at what is in the mouths of the participants.

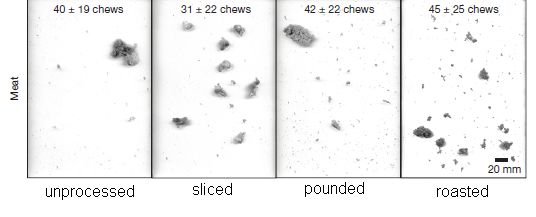

|

In this experiment, the (human) participants were given a piece of meat to chew on. Goat meat. (Meat from modern domestic meat animals is much more tender than wild meat. Apparently, raw goat meat is quite difficult to chew.) The meat was processed various ways before giving it to the participants. They chewed the meat until they were ready to swallow; that was personal choice.

At the time chosen for swallowing, a sample of the mouth contents was laid out -- and photographed. The four photos above are examples of the results, one for each meat treatment condition, as labeled at the bottom. Qualitatively... The photo at the left shows that the basic raw meat sample -- unprocessed -- is still largely one big chunk, even after an average of 40 chews (see top). In contrast, chewed samples of both sliced meat and roasted meat were substantially broken down to smaller pieces. (That happened faster with sliced meat than with roasted meat.) There is also a fourth sample, in which the meat was pounded. As judged by the photos above, this didn't have much effect. Pounding was more relevant to the other part of the study, on eating root vegetables. This is the lower part of Figure 1 from the article. I have added the labels at the bottom. |

That's it. Slicing meat, as well as cooking it, can make meat more digestible. It is a clever lab experiment.

So, which was it? Which innovation for improving meat played the key role in allowing humans to develop larger brains -- if indeed that is the correct model? The work here does not address that; it merely offers the possibilities. What actually happened is some historical fact, and requires some historical evidence to solve.

The authors suggest that the tools for slicing meat were available earlier than the tools for cooking it. But it's not at all conclusive.

If some ancient hominids had tried using their stone knives on their meat, would they have realized any benefit? The results above show that slicing made the meat easier to chew, so they might have liked it even if they did not measure energy expenditure.

From this work, it becomes plausible that learning to slice meat contributed to the development of human intelligence -- and to the development of a smaller mouth. It is now time for the archeologists to get more facts -- but that will not be easy.

News stories:

* How sliced meat drove human evolution. (L Wade, Science magazine, March 9, 2016.)

* The benefits of food processing: Processing food before eating likely played key role in human evolution. (Science Daily, March 9, 2016.)

The article: Impact of meat and Lower Palaeolithic food processing techniques on chewing in humans. (K D Zink & D E Lieberman, Nature 531:500, March 24, 2016.)

More about the history of meat-eating...

* Carnivory in human ancestors: when did it start? (April 2, 2022).

* Did Lucy butcher a cow? (February 11, 2011).More meat: Growing meat without an animal? (April 11, 2018).

More about the brain size-gut size problem:

* The metabolic rate of humans vs the great apes: some data (August 1, 2016). This post challenges the assumption that the overall energy expenditure of humans is at the same level as the other apes.

* Fish with bigger brains may be smarter, but ... (January 25, 2013). This is more directly relevant to the current post than you might guess.More about brain size: A possible genetic cause for the large human brain (March 25, 2017).

My page for Biotechnology in the News (BITN) -- Other topics includes a section on Brain (autism, schizophrenia). It includes a list of brain-related posts.

More about chewing: A robot that can chew gum (March 30, 2021).

More about goats: Q or Beware of goats bearing infections or It's one health. (February 20, 2010).

Also see: Can we distinguish monkey tools and human tools? (April 17, 2023).

March 21, 2016

It has been a huge story over the last year. The water supply in the US city of Flint, Michigan, has a very high level of lead, as well as numerous other problems. The incident began when the city switched the water supply, and apparently neither planned carefully nor monitored the water supply for problems.