Home

> Musings: Main

> Archive

> Archive for January-April 2018 (this page)

| Introduction

| e-mail announcements

| Contact

Musings: January - April 2018 (archive)

Musings is an informal newsletter mainly highlighting recent science. It is intended as both fun and instructive. Items are posted a few times each week. See the Introduction, listed below, for more information.

If you got here from a search engine... Do a simple text search of this page to find your topic. Searches for a single word (or root) are most likely to work.

Introduction (separate page).

This page:

2018 (January-April)

April 30

April 25

April 18

April 11

April 4

March 28

March 21

March 14

March 7

February 28

February 21

February 14

February 7

January 31

January 24

January 17

January 10

January 3

Also see the complete listing of Musings pages, immediately below.

All pages:

Most recent posts

2026

2025

2024

2023:

January-April

May-December

2022:

January-April

May-August

September-December

2021:

January-April

May-August

September-December

2020:

January-April

May-August

September-December

2019:

January-April

May-August

September-December

2018:

January-April: this page, see detail above

May-August

September-December

2017:

January-April

May-August

September-December

2016:

January-April

May-August

September-December

2015:

January-April

May-August

September-December

2014:

January-April

May-August

September-December

2013:

January-April

May-August

September-December

2012:

January-April

May-August

September-December

2011:

January-April

May-August

September-December

2010:

January-June

July-December

2009

2008

Links to external sites will open in a new window.

Archive items may be edited, to condense them a bit or to update links. Some links may require a subscription for full access, but I try to provide at least one useful open source for most items.

Please let me know of any broken links you find -- on my Musings pages or any of my web pages. Personal reports are often the first way I find out about such a problem.

April 30, 2018

Sudden infant death: a genetic factor affecting breathing?

April 30, 2018

Sometimes a baby goes to sleep -- and dies. It's called sudden infant death syndrome (SIDS), or, casually, crib death. In places with generally low levels of infant infection, it may be the leading cause of infant death.

There is no warning, and little explanation. It is probably respiratory failure, but why? Efforts to make sure babies sleep on their backs, and are not buried under things that might obstruct breathing, have led to a decrease in the rate of SIDS, but the condition is still largely mysterious.

A new article uncovers a genetic condition that may contribute to SIDS for some babies. It's an interesting clue, and it may even lead to a treatment. It is also a very small piece of the story.

The scientists did genome sequencing for a group of babies who had died of SIDS, and a group of healthy controls. They focused on regions suspected of having relevant genes. The results for one particular gene stood out. 1.4% of the babies who died of SIDS carried a mutation that altered the function of a particular gene, called SCN4A. None of the controls carried such mutations. We'll skip the detail of the numbers here, but that difference tested as significant. Further, the relevant mutations are all considered extremely rare as judged by genome databases.

What is this gene? A gene for a particular type of ion channel, which controls skeletal respiratory muscle function.

Previous work had shown that some babies who die of SIDS have an ion channel mutation that affects heart rhythm.

The following figure shows an example of the effect of one of the mutations, based on work in a lab model.

The test here measures the ion channels in lab cells that have been modified to have the mutant gene of interest. The graphs show the electrical current as a function of time. Time is a stand-in here for voltage. The voltage is increased over time, and the resulting current is measured.

The left frame is for the wild type ion channel. The right frame is for one of the mutant ion channels found in a baby who had died from SIDS. It's clear that the mutant channel is different. The spike in current at about 1 ms represents the activation of the channel. The mutant channel doesn't activate well.

The details vary for each mutant channel studied. What is common is that for each case that was finally counted as contributing there was a distinct change in ion channel performance.

This is Figure 2A from the article.

|

Overall... A small number of babies who died from SIDS have a mutation that affects their breathing muscles. Suspicious, isn't it? But remember that such mutations are found in only 1.4% of the cases, and no causal link has been established. It may be a clue, but it is only that for now.

As an example of what might be happening... It may be that the mutation weakens the breathing system, making the baby more vulnerable to a stress.

There are drugs that can modulate such ion channels. Might they be useful in preventing SIDS? Again, it can only be a lead for now -- but leads are a good place to start.

News stories:

* Rare Gene Variant Tied to SIDS -- Genes controlling breathing muscle function could be important. (M Walker, MedPage Today, March 29, 2018.) Link is now to Internet Archive.

* Potential genetic link in sudden infant death syndrome identified. (Science Daily, March 28, 2018.)

Both of the following are freely available.

* "Comment" accompanying the article: Skeletal muscle channelopathy: a new risk for sudden infant death syndrome. (S C Cannon, Lancet 391:1457, April 14, 2018.)

* The article: Dysfunction of NaV1.4, a skeletal muscle voltage-gated sodium channel, in sudden infant death syndrome: a case-control study. (R Männikkö et al, Lancet 391:1483, April 14, 2018.)

Previous posts on SIDS: none.

More SIDS: A bio-marker for SIDS? (June 27, 2022).

A post that notes the issue of child mortality: Ten Great Public Health Achievements, 2001-2010 (June 26, 2011).

Previous post on voltage-gated sodium channels: A long worm with a novel toxin (April 28, 2018). Immediately below.

A long worm with a novel toxin

April 28, 2018

Imagine you are walking by a building, and a worm crawls out of a window on the third floor. It reaches down and bites you -- while still holding on to its third-floor perch.

Now imagine a similar scenario, but the worm is on the 15th floor.

We have a new article about the toxin produced by a worm, but it's the worm that is getting the attention. The special feature of the worm is noted in the title of the article, and in the title of all the news stories I saw.

The worm is the bootlace worm, Lineus longissimus. It's a type of ribbon worm, a group that has not been studied much. It's very long. In fact, it is the longest known animal. The longest specimen known is about 55 meters (about 180 feet). That's nearly twice the length of the longest known whale. It's long enough to get you from the 15th floor. It's about a centimeter wide -- ribbon-like, indeed.

The report of a 55 m worm goes back over a century. I don't know how reliable it is. More typical are worms 10 m or so. Long enough to get you from the 3rd floor.

These are marine worms. They don't live in tall buildings. And they don't attack humans. I think. The opening of this post was to establish a sense of scale, not their ecology.

The toxin? This worm makes a toxin that can kill medium size arthropods, such as crabs. In the current work, the scientists isolated the toxin, determined its structure, and explored its function. They also looked for related toxins from other worms of the group.

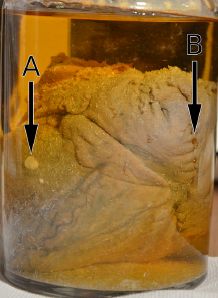

Here are the worm and the toxin. They are shown here at about the same size.

|

|

|

The worm

|

The toxin

|

The worm is from the Phys.org news story. There is no scale given. We might guess that it is about a centimeter wide, and a few meters long.

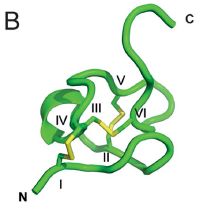

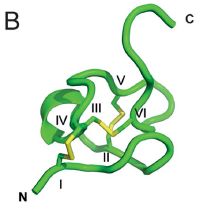

The toxin is from Figure 4B of the article. It's a protein -- actually a 31-amino acid peptide. It's shown here as a computer-generated ribbon, one common way to show protein structures. This small peptide is probably about a nanometer across.

In the toxin structure, N and C mark the amino and carboxyl termini. The Roman numerals are for cysteine residues that pair off to form disulfide bonds (which are themselves yellow).

The article does have a picture of the animal (Figure 1A), but it's partly folded up. (One of the news stories has a picture of the worm completely folded up into a ball.)

|

Is this toxin of interest? Maybe. The scientists show that it is very effective against ion channels in the nervous system of arthropods. It has much less effect on mammalian ion channels. Thus it -- and related peptide toxins found in related worms -- might be considered as the basis for development of novel insecticides. Perhaps this is worth exploring further. At some point, it might be possible to talk about the toxin without noting that it is from the world's longest animal.

News stories:

* Bootlace Worm: Earth's Longest Animal Produces Powerful Toxin. (Sci.news, March 27, 2018.)

* TIL: The World's Longest Animal Is Over 50 Meters Long and It's A Worm! ("trumpman", steemit, March 23, 2018.) A blog post on a site I had not seen before. Useful story, with a couple of worm videos.

* Potential insecticide discovered in Earth's longest animal. (Phys.org, March 23, 2018.)

The article, which is freely available: Peptide ion channel toxins from the bootlace worm, the longest animal on Earth. (E Jacobsson et al, Scientific Reports, 8:4596, March 22, 2018.) It's a very readable article, with some discussion of the worms, and considerable discussion of neurotoxins from a wide range of sources.

Previous post featuring the longest of something: The longest C-C bond (April 17, 2018).

Previous post about a worm: Why you should not eat larb: A story of trichinellosis -- locally (March 11, 2018).

More on novel insecticides:

* A case of horizontal gene transfer from plant to insect; exploiting it for insect control (April 18, 2021).

* Alternative microbial sources of insecticidal proteins (December 9, 2016).

Next post on voltage-gated sodium channels: Sudden infant death: a genetic factor affecting breathing? (April 30, 2018). Immediately above.

Ancient DNA and the archeologists

April 27, 2018

Archaeology -- a study of ancient humans -- has a new tool. Genome sequencing is one of the revolutionary developments of recent years. A symbol, or reference point, for this revolution was the announcement of the first complete human genome sequence in 2001. Ancient DNA presents special challenges, but these have been addressed. Since 2010 scientists have sequenced the genomes of 1300 ancient humans. Half of those are from the current year -- which is still very much in progress.

A new tool and a lot of data. Not all the new data agrees with what the archeologists thought they knew.

A recent News Feature from Nature explores the role of sequencing ancient genomes in modern archeology. It's not a simple story, but it is a good story about how science progresses. Worth a browse.

News feature. which is freely available: Divided by DNA: The uneasy relationship between archaeology and ancient genomics -- Two fields in the midst of a technological revolution are struggling to reconcile their views of the past. (E Callaway, Nature News, March 28, 2018.) In print, with the title The Battle for Common Ground... Nature 555:573 (March 29, 2018).

The article has a graphic showing the number of ancient human genomes reported per year.

A post about the very first ancient human genome that was published: Inuk, a 4000 year old Saqqaq from Qeqertasussuk (March 1, 2010).

There is more about DNA sequencing on my page Biotechnology in the News (BITN) - DNA and the genome. It includes an extensive list of related Musings posts.

April 25, 2018

Follow-up: bacterial degradation of PET plastic

April 25, 2018

Two years ago Musings reported the isolation of a bacterial strain that could digest one type of polyester plastic, poly(ethylene terephthalate) (PET) [link at the end]. We now have a new article reporting work on the PET-degrading enzyme system from that bacterium. Among other things, the scientists report an improvement in the activity of the main enzyme. The article has been hyped in some of the news reports, but still is of interest.

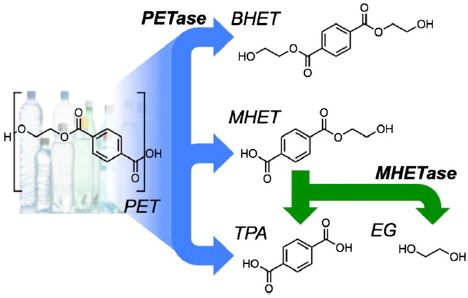

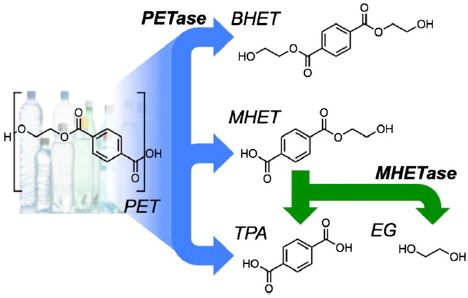

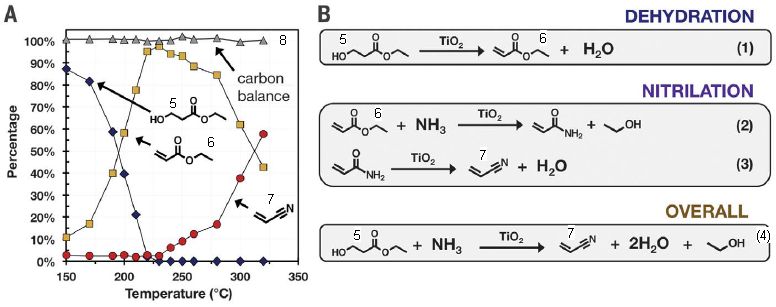

The following figure introduces the PET polymer, and shows what the bacterial enzymes do to it.

The left side shows the chemical structure of the PET polymer. It is an alternating polymer of terephthalic acid (TPA) and ethylene glycol (EG). If it is new to you, you might start at the lower right, which shows those two monomers.

The right side shows what the enzymes do to PET. Not surprisingly, the enzymes break the ester link between monomers, leading to various smaller molecules. For example, the PETase -- the enzyme that directly attacks the PET-- makes the top two molecules on the right. They consist of TPA with one or two EG attached. Those are called MHET and BHET [mono- and bis-(2-hydroxyethyl)-terephthalic acid], respectively. (MHET is the major product from the PETase enzyme.)

The second enzyme is MHETase. It breaks down MHET to the two original monomers. This is the step at the lower right.

It's of interest that the enzyme system yields the two monomers used to make the plastic. If this process can be made practical, it clearly makes useful products.

This is Figure 1 from the article.

|

In the new work, the scientists did various biochemical and structural studies on the two enzymes. Along the way, they made a few mutant enzymes, by changing certain amino acids that they thought might be in interesting positions. Of particular interest is one double-mutant enzyme, called W159H,S238F.

As we have noted occasionally, such a name describes the amino acid changes. For example, "W159H" means that amino acid W (tryptophan) at position 159 has been changed to an H (histidine).

The following figure shows some data for the enzymes...

The figure shows some results for how the PETase attacks PET.

There are three sets of data. The middle set is for the wild-type PETase enzyme. The set at the right is for the double mutant enzyme noted above. At the left is a buffer control, with no enzyme.

For each data set, there are three measures of enzyme activity. From the left... loss of crystallinity (green bar), and production of two products: MHET (blue-striped bar) and TPA (black-hatched bar) For crystallinity, use the y-axis scale at the left; for the products, use the scale at the right.

Look first at the results for crystallinity. There is a small loss of crystallinity even in the buffer, but it is enhanced by the enzyme. Further, the mutant enzyme leads to much more loss of crystallinity. (The plastic used here is about 15% crystalline. Thus the highest green bar shows loss of about 1/3 of the original crystallinity.)

Now look at the production of the two small-molecule products. There is none with the buffer control, but significant amounts with the enzymes. There is little difference between the two enzymes here.

This is Figure 3D from the article.

|

From those results, it seems likely that the enzyme makes random nicks in the polymer structure. That leads to a relatively fast loss of polymer integrity, as reflected in the crystallinity. That the mutant enzyme does this better is encouraging.

By this model, appearance of small products requires two nicks close to each other. That takes more time. Clearly, the enzyme leads to the small-molecule products, but the enzyme improvement isn't reflected by this measure. Note that we have only one time point (96 hours); it would be interesting to see more kinetics.

Overall, the article provides evidence for an improved enzyme. Some of the news coverage has suggested that the article provides a process for degrading PET. However, it is not yet good enough for a practical process. The authors recognize that.

A little more from the article... The authors test the enzyme on other polyester plastics. It does act on another plastic that has an aromatic ring in the monomers, a plastic that may be coming along as a replacement for PET. However, the enzyme does not act on polyesters that lack an aromatic ring.

It's time to repeat the big caution about plastics... There are diverse types of plastic -- as your local recycler will undoubtedly remind you. The work here is on one specific type. It is indeed a major plastic. If this work leads to a practical process for the degradation of PET, that could be important. But there is nothing here that is general for plastics.

News stories:

* Scientists accidentally create mutant enzyme that eats plastic bottles. (D Carrington, Guardian, April 16, 2018.)

* Research Team Engineers a Better Plastic-Degrading Enzyme. (National Renewable Energy Laboratory (NREL), April 16, 2018.) From one of the participating institutions.

* Expert reaction to enzyme to digest plastic. (Science Media Centre, April 16, 2018.) Several comments.

The article, which is freely available: Characterization and engineering of a plastic-degrading aromatic polyesterase. (H P Austin et al, PNAS 115:E4350, May 8, 2018.)

Background post reporting the PET-degrading bacterial strain: Discovery of bacteria that degrade PET plastic (April 3, 2016).

More about getting rid of plastics:

* Good enzymatic degradation of polyesters, by manufacturing the plastic with the enzymes in it (May 4, 2021).

* Turning waste plastic into fuel -- a solar-driven process? (October 9, 2018).

A recent post on plastics: A "greener" way to make acrylonitrile? (January 6, 2018).

This post is listed on my page Internet Resources for Organic and Biochemistry in the section for Carboxylic acids, etc.

Ice in your diamond?

April 23, 2018

Briefly noted...

A recent article reports evidence for finding ice inside some diamonds.

It's not ordinary ice, but rather an unusual crystal form called ice-VII. As with other inclusions found in diamonds, the ice must have gotten there when the diamond was formed -- deep down inside the Earth. More precisely, the water that formed the ice was included in the diamond upon its formation. That is, the presence of the ice in the diamond is evidence for the presence of liquid water at the site of diamond formation.

Then what? As the diamond -- and its water -- cooled, ice formed. Ice-VII only forms at extremely high pressures -- found only at depths of several hundred kilometers below the surface.

There was a recent report of finding ice-VI in diamonds. The current article is the first report of ice-VII, indicative of even higher pressures, in diamonds.

The conclusion is clear: these diamonds must have formed at great depths. And there must be water -- and aqueous chemistry -- down there. That's useful information; understanding water in Earth's mantle is important, but limited. And finding ice-VII in nature is also new; previously, ice-VII was known only from lab work.

From the title of the post, you may have expected some pictures. Sorry, no pictures. The article contains X-ray pictures to determine the nature of the inclusions, and diagrams of the pressure inside the Earth. These diamonds have a story to tell about Earth's interior, but apparently aren't anything special to look at.

News stories:

* Small Inclusions of Unique Ice in Diamonds Indicate Water Deep in Earth's Mantle. (Sci.news, March 12, 2018.)

* Scientists Just Discovered a Strange New Type of Ice Inside Deep-Earth Diamonds -- We've never seen ice-VII in nature before. (M McRae, Science Alert, March 9, 2018.)

* Diamond inclusions suggest free flowing water at boundary between upper and lower mantle. (B Yirka, Phys.org, March 9, 2018.)

The article: Ice-VII inclusions in diamonds: Evidence for aqueous fluid in Earth's deep mantle. (O Tschauner et al, Science 359:1136, March 9, 2018.)

Previous post about diamonds: The smallest radio receiver (April 4, 2017).

More diamonds: Another Solar System planet -- revealed by its diamonds? (May 22, 2018).

A recent post about ice: Should we geoengineer glaciers to reduce their melting? (April 4, 2018).

More ice:

* A new ice, with the density of liquid water (May 23, 2023).

* Why is ice slippery? (September 9, 2018).

Fracking and earthquakes: It's injection near the basement that matters

April 22, 2018

Fracking is associated with earthquakes in some places. Quake activity is usually most closely associated with the follow-up process of wastewater injection, but there may also be effects from the fracking injection itself. Background posts discuss both of these possibilities [links at the end].

The work on wastewater disposal has suggested that injection near rigid basement structures that are prone to seismic activity may be of particular concern. A new article reinforces the point.

The article is based on statistical analysis of data from the Oklahoma oil and gas fields. We note one summary figure...

|

The graph analyzes a large number of wastewater injection sites. Each site is classified by the seismic activity that has been observed there. This is recorded as the total annual seismic moment, in Newton-meters (Nm). In particular, two sub-groups of the sites are featured; those with high seismic activity (pink) and those with low seismic activity (blue). Further, each site is at a known depth, and a known distance from the basement structure at that region.

The y-axis scale is a measure of the probability of such seismic activity. It is plotted against depth of the site in frame A, and against distance from the basement in frame B.

Look first at frame B (bottom). You can see that the pink distribution, for the sites with high seismic activity, clusters very near zero. In contrast, the blue distribution, for sites with low seismic activity, is bimodal, and tends to be away from zero. Remember, in this frame, zero means near the basement.

In contrast, frame A (top) plots the results vs actual depth (rather than depth relative to the basement). Interestingly, the low-seismic sites (blue) are at lower depth.

This is part of Figure 3 from the article.

|

Overall, the work supports the notion that injection of wastewater near the basement is of special concern. The contribution of the new article is to separate the more specific measure of "distance from basement" from the more general "depth".

Volume injected is also an issue. It becomes increasingly important when injection is near the basement

The authors note that state regulators have implemented some regulations restricting wastewater injections, based on earlier findings. Preliminary data suggests that there is now reduced seismic activity in the area, though there is too little data to be conclusive. The authors hope that their new findings will lead to improved regulations.

The story of fracking and seismic activity has been developing over several years. At first, there were anecdotal reports, with considerable skepticism. There is now general acceptance that there is a problem in certain locations. A stream of scientific studies, such as the current work, has led to some understanding of what is going on; that guides implementation of solutions. At least some regulatory agencies are now actively dealing with the issue. Of course, our current understanding is undoubtedly incomplete, and the analysis must continue.

News stories:

* Wastewater well depths linked to Oklahoma quakes. (E D O'Reilly, Axios, February 1, 2018.)

* Oklahoma's Earthquakes Strongly Linked to Wastewater Injection Depth. (Phys.org, February 1, 2018.)

The article: Oklahoma's induced seismicity strongly linked to wastewater injection depth. (T Hincks et al, Science 359:1251, March 16, 2018.)

Background posts on fracking and earthquakes include:

* Hydraulic fracturing (fracking) and earthquakes: a direct connection (February 13, 2017).

* Fracking: the earthquake connection (June 19, 2015). This is about quakes associated with wastewater disposal.

More about earthquakes...

* Earthquakes induced by human activity: oil drilling in Los Angeles (February 12, 2019).

* A significant local earthquake: identifying a contributing "cause"? (July 31, 2018).

* Detecting earthquakes using the optical fiber cabling that is already installed underground (February 28, 2018).

More fracking: Fracking by mice (November 18, 2019).

More seismic waves... How seismic waves travel through the Earth: effect of redox state (June 8, 2018).

There is more about energy issues on my page Internet Resources for Organic and Biochemistry under Energy resources. It includes a list of some related Musings posts.

Do naked mole rats get old?

April 20, 2018

Aging is not simply about time. A 40-year-old person may be in the prime of life. An 80-year-old is near the end. "The end". The term implies that there is some kind of limit -- a maximum lifespan. Aging is a physiological process near the end of that lifespan.

The following figure illustrates the idea, in a different -- but intriguing -- way.

|

Simplifying a bit, the figure shows the "mortality hazard" (risk of death) vs "age" for four mammals.

The purple curve near the left is for humans. The mortality hazard is low at the start, then increases dramatically.

The curve for horses is very similar.

The curve for mice is similar, too, but the increase occurs at a later age (on this scale, which we will explain below).

And then there is the green curve. It is for naked mole rats. It, too, starts low -- but this curve stays low.

This is Figure 5E from the article.

|

In summary, the graph shows that the death rate for three mammals rises dramatically at some point during their life. That is typical of what is found for mammals. However, according to this graph, the naked mole rat doesn't do that. It doesn't age.

Let's look at the graph scales. The y-axis is somewhat arbitrary; there are real numbers, but they are different for the different animals. What matters for the y-axis is the trend. (The numbers are seen more cleanly in the other parts of the full figure, which show the data underlying the graph above on a more conventional scale, vs simple age.)

The x-axis scale is more interesting. Note the vertical red line at 1. It is labeled Tsex; that is the age at sexual maturity. And the x-axis scale is labeled "fold Tsex". That is, the x-axis scale gives time as a multiple of the age at sexual maturity. For example, for humans and horses the death rate starts to increase at about 3 times the age of sexual maturity. Mice start to age at about 10 on this scale. What's new here is that the naked mole rats are different: they show no sign of aging even when 25 times older than the age at sexual maturity.

To help put the x-axis scale in perspective... Humans reach sexual maturity at age 16 (the authors' number) and start to "age" at about 48 years old. The exact numbers don't matter much. Emphasize the big trends -- and the differences between species.

Since the "age" is given as a ratio, one might wonder whether it is distorted by an odd value for the denominator. If naked mole rats reached sexual maturity early, that could lead to large numbers on the scale used here. In fact, the opposite is true. Mice and naked mole rats are both rodents, of about the same size. Mice reach sexual maturity at about 6 weeks of age; naked mole rats at 6 months. Mice die within two years. The scale shown above for naked mole rats goes out to age 12 years; there is no sign of an increasing death rate.

So the naked mole rats don't seem to "age" out to 12 years old. More specifically, the current work says they don't have an increasing death rate out to that age. (Other work has shown a lack of other signs of aging.) That's already surprising, for a rodent only slightly larger than a mouse. What about older ages? The authors present the best data that is available, and suggest that it shows no signs of aging out to 30 years old. Unfortunately, the data is limited. Naked mole rats have only been maintained in captivity since about 1980. In nature most only live a couple years, though some much older specimens have been reported, mainly breeding females.

That's about it. The naked mole rats have attracted considerable attention, starting with their appearance and extending to various physiological issues. We now see that they don't seem to age -- don't have an increasing death rate with chronological age -- the way we expect for mammals. Whether they age at all is not clear, but if they do, it is late, and that in itself is interesting. They have an important place in the study of aging.

News stories:

* Naked Mole Rats Defy Mortality Mathematics. (C Engelking, Discover (blog), January 29, 2018.) Now archived.

* Naked mole rat found to defy Gompertz's mortality law. (B Yirka, Phys.org, January 30, 2018.) Nice picture.

* Naked Mole Rats Break 'Law' of Aging. (R Lilleston, AARP, January 31, 2018.) Interesting source. The AARP is the American Association of Retired Persons.

The article, which is freely available: Naked mole-rat mortality rates defy Gompertzian laws by not increasing with age. (J G Ruby et al, eLife 7:e31157, January 24, 2018.) (The article is from Calico, a Google spin-off.)

A post about the low incidence of cancer in naked mole rats: A clue about cancer from the naked mole rat? (January 18, 2014). One should wonder how the effect claimed there relates to the current post. Cancer is largely a disease of aging.

A post about the human lifespan: How long can humans live? (November 29, 2016).

And its follow-up post: Follow-up: How long can humans live? (July 23, 2018).

My page for Biotechnology in the News (BITN) -- Other topics includes a section on Aging. It includes a list of related Musings posts.

April 18, 2018

The longest C-C bond

April 17, 2018

The bond between the two carbon atoms in ethane, H3C-CH3 is 1.54 Å (Ångstroms) long. That's a typical C-C single bond.

In some compounds, the C-C bond is longer; such longer bonds are typically weaker. That should seem logical: the stronger the interaction between the two atoms, the shorter the bond. In fact, the relationship between bond length and bond strength for C-C bonds seems to be approximately linear. Using the assumption of a linear relationship, one can calculate that the bond energy would be zero at 1.803 Å. It shouldn't be possible to have a C-C bond longer than that.

In a new article. scientists report a compound with a C-C bond of 1.806 Å. That's longer than "possible", by the simple assumption of linearity. There is no measurement of the bond energy, but the compound can be stored at room temperature in ambient air. Further, it is stable at 400 K (123 °C).

Here is the compound...

|

A new chemical reported in the article.

Focus on the bond between the two carbon atoms labeled C1 and C2. That bond is 1.806 Å long. They measured it, by X-ray crystallography.

This is part of Figure 1 from the news story from the university. The structure is in Scheme 1 and Fig 6 of the article. "10c" (lower right) is the number for this compound in the article.

|

Why is the bond so long? That is what all the surrounding structure is about.

The two C atoms C1 and C2 are forced apart by the ring systems attached to them at the top. You can see that there are two simple aromatic rings on each side. But there is also a big ring between those two aromatic rings. Count the atoms... a seven-membered ring. That means the ring is not planar. And it means there is considerable interaction between the ring systems on C1 and C2. They are in an awkward "scissors" conformation, forcing C1 and C2 apart. (That's hard to tell from a simple 2D drawing, but is discussed in detail in the article.)

Further, the long bond is more or less "hiding" inside a complex shell; it is well protected from things that might react with it.

The bond discussed here is the longest C-C bond yet reported. It is longer than considered possible using the assumption of a linear relationship between bond length and strength. The second point is interesting, but not too important. There was no theoretical basis for the linear relationship, and there had already been reason to suspect it. But the claim of the longest C-C bond stands. That is what is important here, along with some explanation of what makes it possible.

The authors suggest that they may be able to make even longer C-C bonds, building on what they have learned here. Maybe even 2 Ångstroms.

And just for fun...

A crystal of that chemical 10c.

This is part of Figure 2 from the news story from the university.

|

|

News stories:

* Longest carbon-carbon bond yet pushes chemistry to its limits. (K Krämer, Chemistry World, March 16, 2018.)

* New record set for carbon-carbon single bond length. (Hokkaido University, March 9, 2018.) From the lead institution.

The article: Longest C-C Single Bond among Neutral Hydrocarbons with a Bond Length beyond 1.8 Å. (Y Ishigaki et al, Chem 4:795, April 12, 2018.)

More unusual carbon bonding: How many atoms can one carbon atom bond to? (January 14, 2017).

More about measuring bond lengths: Doing X-ray "crystallography" without crystals (September 18, 2016).

Next post featuring the longest of something: A long worm with a novel toxin (April 28, 2018).

Update December 2018...

The record reported here may have been broken -- within a year.

* News story: World record for longest carbon-carbon bond broken. (D Bradley, Chemistry World, December 5, 2018.) Links to the article. The news story raises an interesting question about what kind of bond should "count". The molecule is complex, and it is not easy to see what is going on.

Exoskeletons: focus on assisting those with "small" impairments

April 16, 2018

Exoskeletons -- in the context of human prosthetics -- are devices that provide skeletal support. Typically, they provide additional muscle function. Much of the development has focused on two applications. One is allowing people to do things beyond the normal human capability. The interest in such devices by the military is an example, and was an early impetus. The other application is for those unable to walk.

There is a class of application between those: helping those with mild but significant impairments. Helping those who walk poorly as a result of stroke or just old age could be a huge application. It's a type of application that perhaps requires less power, but more subtlety, as it must be carefully matched to each user's needs.

A recent "news feature" in The Scientist focuses on this class of exo-skeleton work. It's a nice overview.

News feature: Next-Generation Exoskeletons Help Patients Move -- A robot's gentle nudge could add just the right amount of force to improve walking for patients with mobility-impairing ailments such as Parkinson's disease, multiple sclerosis, and stroke. (K Weintraub, The Scientist, February 1, 2018, p 40. In the print issue, it was on p 40, with the main title Robotic Healers.) Now archived.

A recent post on exoskeleton development: Personal optimization of an exoskeleton (September 22, 2017). The article discussed here is reference 10 of the current news feature.

Also see my page Biotechnology in the News (BITN) - Cloning and stem cells. It includes an extensive list of Musings posts in the fields of stem cells and regeneration -- and, more broadly, replacement body parts, including prosthetics.

More about stroke: Role of a receptor for HIV in stroke recovery (March 23, 2019).

Next robotics post: A robot that can assemble an Ikea chair (May 23, 2018).

Multi-organ lab "chips"

April 14, 2018

Studying miniature versions of individual organs, such as organoids, in the lab is becoming increasingly common. They may be useful model systems for studying organ function. They also may be useful for drug testing, to avoid the use of lab animals, or to test tissue from individual people.

However, an animal is more than a collection of isolated organs. The organs work together to form a complex animal. A new article explores the development of multi-organ devices, or "chips".

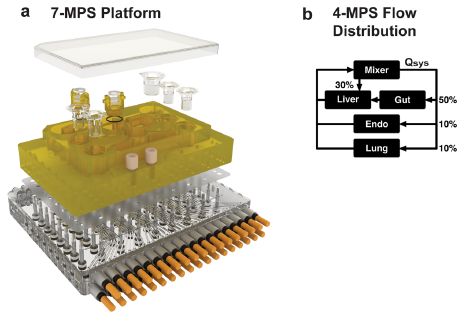

Here is the idea...

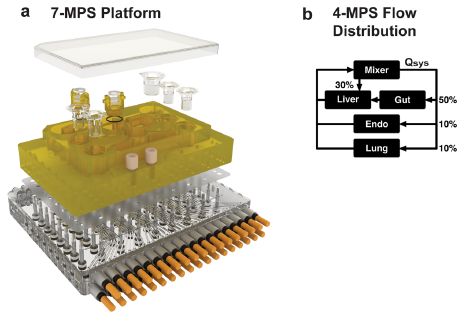

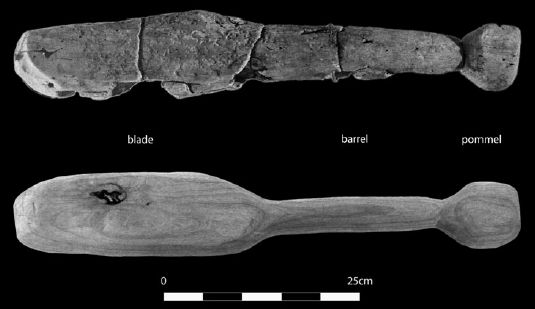

Frame a (left side) shows a device, dissembled into layers. (MPS = microphysiological system(s).) The upper layer holds several specialized cell-culture "cups". This device has seven such cups. Three of them are clear at the upper right; the others are in the middle, where they almost blend into the yellowish plastic support.

You can see that the cups are different sizes. It you think of the cups as being about one centimeter diameter, you will get an idea of the size of the device. Each cup holds one MPS -- or "organ". There are devices with 4, 7, or 10 organs in this article.

The rest of the device is plumbing.

Frame b (right side) gives an example of what the plumbing accomplishes. This frame is for a simpler device, with only four organs. ("Endo" = endometrium.) The device is programmed to deliver fluid to the organs as shown here. The details are not important for now; the point is that fluid flows can be set. This includes flows from one organ to another.

This is part of Figure 2 from the article.

|

That establishes the general idea of the system. The organs are maintained separately, but interact through the plumbing.

What happens? As a start, the scientists measured one property of each organ over time. The following figure shows some results, as examples...

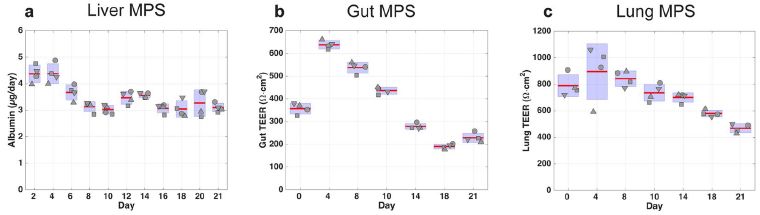

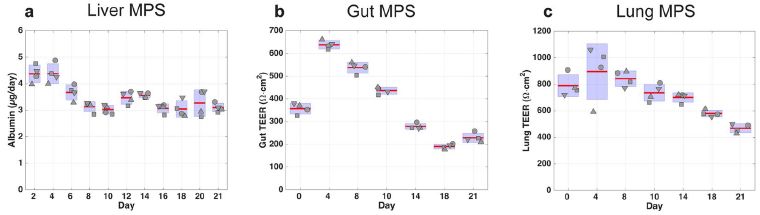

The graphs show results for three of the organs in a 7-organ device.

The details don't matter much. This is early work, and what's important here is that they are beginning to establish such systems.

In general, you can see that each organ was maintained as functional over the three weeks of measurement. The quality varied. I do note that the consistency of the liver measurement was much better here than with the 4-organ device.

For liver, the measurement is albumin production. For the others, it is the TEER = trans-epithelial electrical resistance, a measure of the quality of the surface.

The other organs in this 7-organ device were: endometrium, heart, pancreas, and brain.

The 10-organ device included all those, plus: skin, kidney, and skeletal muscle.

This is part of Figure 4 from the article. The full figure contains results for each of the seven organs in this device. The article also contains similar figures for each organ in the 4-organ and 10-organ devices.

|

The goal here is to show how the scientists are developing multi-organ lab chips, or "physiome-on-a-chip", with interactions between the organs. The article is complex, because the system is complex. Each "organ" has to be developed, and the multi-chip platform is a complex device integrating complex biological systems. There is considerable empirical development of operating parameters. In some tests, cells were replaced after problems occurred. Overall, it is progress. The authors say that theirs is the first such system to integrate seven organs.

It's an interesting approach; the results show that such devices are possible.

News stories. The following two stories are similar, but have quite different figures.

* DARPA-funded 'body on a chip' microfluidic system could revolutionize drug evaluation -- Linked by microfluidic channels, compact system replicates interactions of 2 million human-tissue cells in 10 "organs on chips," replacing animal testing. (Kurzweil, March 19, 2018.) Now archived.

* "Body on a chip" could improve drug evaluation -- Human tissue samples linked by microfluidic channels replicate interactions of multiple organs. (A Trafton, MIT News, March 14, 2018.) From the lead institution.

The article, which is freely available: Interconnected Microphysiological Systems for Quantitative Biology and Pharmacology Studies. (C D Edington et al, Scientific Reports 8:4530, March 14, 2018.)

Background posts include:

* Human heart organoids show ability to regenerate (May 2, 2017).

* How much would it cost to make a brain? (November 1, 2015). Considers drug testing using lab mini-brains.

* Autism in a dish? (September 4, 2015). Develops and compares lab tissue from multiple individuals.

Lab-on-a-chip -- but no biology: Using music to control a machine (October 17, 2009).

Also see: Using lab-grown organoids in medical treatment (May 3, 2021).

April 11, 2018

Growing meat without an animal?

April 11, 2018

Mankind consumes a lot of meat. Would it be possible -- or practical -- to make meat without growing animals? It's an intriguing question.

Biologists have been growing cells in lab-scale cultures for decades. And they have been learning how to grow organized multi-layer structures, sometimes called 3D cultures. In some cases, tissues have been grown in culture that are suitable for transplantation back into an animal. Growing meat, which is typically muscle tissue, under such conditions, might seem to be just one example of such an application.

A news feature-type article in the magazine of a scientific society gives a good overview of the field. It discusses the motivations for trying to make "cultured meat", and the barriers to doing so. It's worth reading as an introduction and status report.

News feature, which is freely available: Clean meat. (L Cassiday, INFORM, February 2018.) INFORM is a magazine from the American Oil Chemists' Society (AOCS). INFORM is itself an acronym, describing the science content. The article includes an extensive list of references, many of them to recent scientific articles.

You can also download the entire issue as a pdf file. Go to the INFORM archive, and scroll down to the February 2018 issue. There is a link there to download the issue.

Thanks to Borislav for sending the article.

More about cultured meat:

* Briefly noted... Cultured meat: the environmental cost (June 24, 2023).

* Briefly noted... Cultured meat -- using spinach. (May 26, 2021).

* Tuning the protein and fat content of cultured meat (February 2, 2021).

Posts about meat include...

* Why you should not eat larb: A story of trichinellosis -- locally (March 11, 2018). Most recent meat post.

* Sliced meat: implications for size of human mouth and brain? (March 23, 2016).

* The WHO report on the possible carcinogenicity of meat (December 12, 2015). It's important to note that one should not expect the nutritional aspects of cultured meat to be any different than for natural meat. The goal of cultured meat is an alternative process, not an improved process. Of course, it would allow for targeting nutritional issues, by process or genetic modification, as later developments.

* Carnivorous algae -- that hunt large animals (October 7, 2012).

My page Biotechnology in the News (BITN) for Cloning and stem cells includes an extensive list of related Musings posts.

How frequent are volcanic eruptions that are truly catastrophic?

April 10, 2018

Most problems are small. Most earthquakes are small. Most volcanic eruptions are small. But it is the rare big ones that are potentially the most serious. We would like estimates of the chances of big events, but it can be hard to get them.

A recent article looks at the chances of catastrophic volcanic eruptions. It's interesting to see how they approached the problem. There is no basis for theoretical predictions. All we can do is to look at the historical record.

For this work, the authors define a catastrophic eruption as one of magnitude 8 or greater. That corresponds to the ejection of 1000 gigatons (Gt). Such eruptions could have serious effects worldwide.

The magnitude scale used here for volcanoes has nothing to do with the magnitude scale for earthquakes. (However, I suspect that the way it was scaled was intended to make it somewhat similar in result.)

An estimate of the frequency of such "super-eruptions" was published in 2004. That estimate was that such eruptions would occur every 45-714 thousand years.

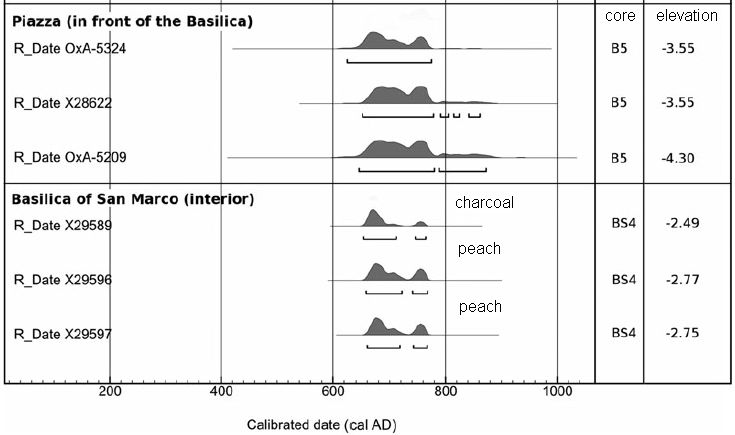

The following figure shows some of the data behind the new work...

|

Each frame shows the cumulative count of volcanic eruptions of the specified magnitude over time, for the last 100,000 years (100 kiloyears, or ky).

Start with the bottom frame. It is for major eruptions, those with magnitude above 7.5.

(Note that the graph does not quite correspond to the cutoff for "catastrophic" eruptions, which is M = 8. There is a reason for this, but it doesn't really matter much.)

You can see that only five such eruptions have been identified; they are labeled. Their occurrence is erratic; given their low frequency, that is of no particular significance. You can also see that there is, on average, one such eruption every 20 ky. (That's every 25 ky if you only include magnitude ≥8.)

Now look at the top frame. Same idea, but for eruptions of a medium magnitude; these are much more frequent. The shape of the curve is perhaps surprising. Wouldn't one expect a linear accumulation of events over the long term? Are volcanic eruptions becoming steadily more frequent, or is something else going on?

Caution... The authors use both "a" and "y" for years, with abbreviations such as ka and ky. They make a distinction... y is for dates, a is for intervals. That is, an event might be dated to, say, 100 ky ago. And the average interval between events would be, say, 20 ka. I don't think the distinction matters much, or that there will be any confusion if you mix them up -- other than wondering why they use two symbols for the "same" thing.

This is part of Figure 2 from the article. The full figure shows more magnitude ranges. The additional frames are similar to the top frame above.

|

The authors suggest that the accelerating pace seen in the top frame above is due to bias in the records. Note that one big change in slope was about 40,000 years ago. This is not about recordkeeping, but about our ability to recognize old volcanic events. It's plausible that older events are harder to detect. The authors take this under-recording into account in their current analysis. It is an example of the subtleties they uncover, helping them to adjust the observed record.

When they run all the numbers, their best estimate of the average interval between catastrophic eruptions is about 17,000 years. Their estimate is a lot less than the earlier one; that's due to the greater number of events in the database, as well as to how they do the estimate. The estimated interval is getting close to the time span of recorded history. (The estimate has considerable uncertainty, of course; the 95% confidence limits are 5-48 ky.)

In one sense, there is nothing here that is particularly important. We have no basis for predicting such events, and the probability of one happening this year is low. Nothing in the analysis changes any of that. However, the display of data is of some interest, and it is interesting to see how the authors attempt to analyze the data. Overall, a "fun" little article.

News story: Time between world-changing volcanic super-eruptions less than previously thought. (University of Bristol, November 29, 2017.) From the University.

The article: The global magnitude-frequency relationship for large explosive volcanic eruptions. (J Rougier et al, Earth and Planetary Science Letters 482:621, January 15, 2018.)

Posts about volcanoes include:

* Rise of the Roman Empire: role of an Alaskan volcano? (July 28, 2020).

* Aerosols and clouds and cooling? (August 27, 2017).

* What caused the dinosaur extinction? Did volcanoes in India play a role? (April 13, 2015).

* VPOW (July 14, 2010).

Posts about major earthquakes include:

* Are large earthquakes occurring non-randomly? (February 10, 2012).

* The great Tonga earthquake: how many quakes were there? (September 12, 2010). Hm, could this happen for volcanoes? Would it be possible to have a great eruption, and no one notices?

More catastrophes: Could we treat COVID by driving it to an error catastrophe? (June 30, 2020).

The fetal kick

April 7, 2018

Briefly noted...

The mammalian fetus moves during development. For humans, motions start at about 10 weeks of development, and are usually evident to the mother by about 17 weeks. Fetuses that do not move normally often end up showing abnormal development; that is, fetal movements are part of development. In some sense, fetal movements are like exercise, and play a role in developing the skeleton.

It is now possible to observe fetal movements, using magnetic resonance imaging (MRI) -- a procedure called cine-MRI.

A new article examines many sequences of human fetal movement. The striking feature of the article is the "movies".

The following movie file, at the journal web site, illustrates several kick sequences, from human fetuses of various ages.

Movie 2. It is an animated gif, so it loops, but is fundamentally only a few seconds. Individual kick sequences are 2-4 seconds. (The gif file itself is almost 15 MB, so be patient if you have a slow connection.)

The article contains some analysis of the data. The authors develop models that allow them to estimate the forces involved, including the force on the wall of the uterus. They show graphs of the stresses and strains over the course of pregnancy. It's pioneering work, building on the pioneering measurements. But it is also very limited at this point. The news stories introduce this aspect of the work.

There is also discussion of the implications. The article starts with an overview of what is known about fetal movements, and the possible implications of absent or aberrant movements.

"This research represents the first quantification of kick force and mechanical stress and strain due to fetal movements in the human skeleton in utero, thus advancing our understanding of the biomechanical environment of the uterus." (From the abstract.)

News stories:

* Monitoring fetal movements helps detect musculoskeletal malformations. (B Yirka, Medical Xpress, January 24, 2018.)

* First study of its kind shows how foetal strength changes over time. (C Brogan, Phys.org, February 2, 2018.)

The article, which is freely available: Stresses and strains on the human fetal skeleton during development. (S W Verbruggen et al, Journal of the Royal Society Interface 15:20170593, January 2018.)

More fetal imaging... Imaging of fetal human brains: evidence that babies born prematurely may already have brain problems (March 10, 2017).

More things uterine...

* Involvement of the non-pregnant uterus in brain function? (February 11, 2019).

* Lamb-in-a-bag (July 14, 2017).

* Cannibalism in the uterus (May 31, 2013).

Observing inside animals with an improved bioluminescence system

April 6, 2018

Imaging is an important part of modern biology. Medical imaging techniques such as CAT scans and MRI are examples, which involve some fancy technologies. But they also require that the subject stay still. What if we could image the inside of an animal as it wandered around its cage?

One approach is to use bioluminescence. Most animals are not naturally bioluminescent, but one can make lab animals that carry the gene for the light-making enzyme. Then we could just watch the animals' light emission.

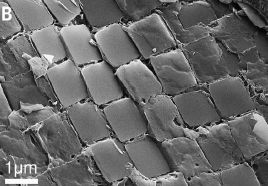

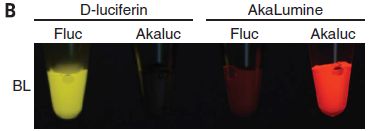

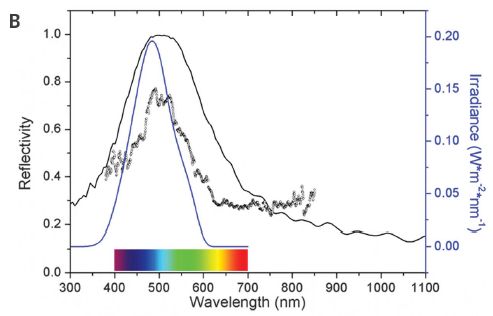

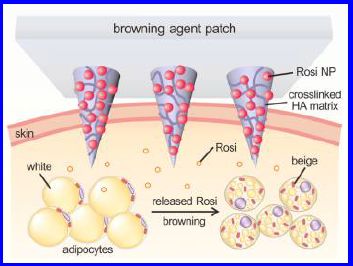

A recent article reports an interesting development that could make such imaging more practical. The following figure presents one key step.

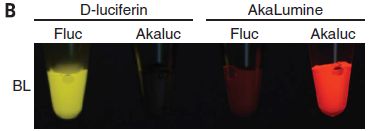

Terms: Luciferase is an enzyme. It acts on a substrate called luciferin. The resulting reaction emits light. But caution...

The figure shows the bioluminescence from four systems. They have various combinations of substrate (labeled in top row) and enzyme (second row).

The first tube (at left) shows a natural system: firefly luciferase (Fluc) acting on the common luciferin.

The last tube (right) shows the system developed in the new work. It uses a new enzyme (Akaluc) and a new substrate (AkaLumine). Development of the new enzyme is the heart of the current work; the new substrate had been developed earlier.

The results show that the new system is different from the original system in two ways: brighter and redder.

Tissue transmits red light better than other colors. So the new system is better suited for use within animals both because it is intrinsically brighter, but also because it emits a color that is more suitable for this use. (In fact, much of the emission is in the infrared (IR), where transmission is even better.)

The other two tubes are for hybrids between the two systems, such as old substrate with new enzyme. They show little.

BL at the left of the figure means bioluminescence.

This is part of Figure 1B from the article.

|

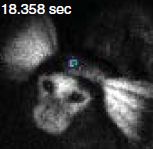

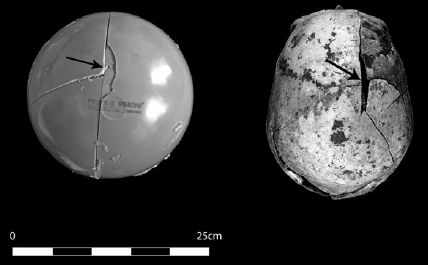

Here is an example of the use of the new system with an intact animal.

The animal was injected with a small number of cells that express the new luciferase enzyme (Akaluc). In this case, the injection was about 5 millimeters into the brain of a marmoset (a small monkey).

To prepare for a set of measurements, a solution of the luciferin was injected into the abdominal cavity. In this case, that was about a year following the injection of the cells capable of making the luciferase enzyme.

|

|

The figure shown here is actually a composite of two types of images that were obtained, using the same electronic camera. One is an ordinary optical image, showing the animal. The other is the bioluminescence. But that is not shown directly. (Remember, it is red, actually peaking in the IR). The camera records the bioluminescence signal, processes it, and displays it with a false color that represents the intensity. That's why the red emission is represented here by blue -- denoting a medium amount of the red light. (There is a color bar in the full figure translating the color shown to the intensity.)

Don't see the bioluminescence signal? It's a small blue circle, about the size of the letter c in the label at the top. It's on the forehead, about half way between the ears.

This is part of Figure 4D from the article.

|

A feature of the image above is that it was obtained on a "free" animal -- neither held down nor anesthetized. And that's the point. The method developed here can be used to observe what is going on inside an animal's body as it goes about its ordinary activity. That could be a useful tool.

Movies. In fact, the image above is a frame from a movie sequence. There are three movie files posted at the journal web site along with the article. Without getting into the details, each shows an example of light emission from the head of an animal labeled with the new bioluminescence system. The first two show mice; the third shows the marmoset seen above. I encourage you to check at least one of them. (Each is about a half minute; no sound.)

News story: In living color: seeing cells from outside the body with synthetic bioluminescence. (Phys.org, February 22, 2018.)

* News story accompanying the article: Imaging: Unnaturally aglow with a bright inner light -- A bioluminescent system enables imaging single cells deep inside small animals. (Y Nasu & R E Campbell, Science 359:868, February 23, 2018.)

* The article: Single-cell bioluminescence imaging of deep tissue in freely moving animals. (S Iwano et al, Science 359:935, February 23, 2018.)

More on bioluminescence:

* Milky seas: now observable from space (September 27, 2021).

* Xystocheir bistipita is really a Motyxia: significance for understanding bioluminescence (May 9, 2015).

There is a section of my page Internet resources: Chemistry - Miscellaneous on Chemiluminescence. It includes a list of related Musings posts.

A post that is a reminder about the importance of staying still for an MRI scan... Dog fMRI (June 8, 2012).

Another way to see what is going on inside the head of an animal is to remove the top and look inside... A microscope small enough that a mouse can wear it on its head (November 12, 2011).

April 4, 2018

Should we geoengineer glaciers to reduce their melting?

April 4, 2018

Glaciers are melting at an increased rate because of global warming. That leads to rising sea level, which can impact people near coasts around the world.

What if we tried to reduce glacier melting, by cutting off the local heat source or supporting the ice shelves that hold back glacier movement? Those are among the proposals put forward in a recent "Comment" article in Nature.

Apparently, not much thought has been given to such an approach to mitigate one aspect of global warming. The authors' goal is to put the topic on the table. If nothing else, the article is interesting in discussing how glaciers melt; in fact, the authors emphasize the importance of improving our understanding of glaciers as part of considering intervention. They have some specific proposals, and they discuss pro and con aspects.

It's an intriguing and provocative article.

"Comment" article, which is freely available: Geoengineer polar glaciers to slow sea-level rise. (J C Moore et al, Nature 555:303, March 15, 2018.)

A post about sea level changes, including the contribution from glaciers melting: Climate change and sea level (October 2, 2017).

A post about the more commonly discussed type of geoengineering, with the goal of changing the atmosphere: Geoengineering: the advantage of putting limestone in the atmosphere (January 20, 2017). Links to more.

The glaciers of particular concern here are in Greenland and Antarctica. Posts about other things from those lands include...

* Is Arctic warming leading to colder winters in the eastern United States? (May 11, 2018).

* What do microbes eat when there is nothing to eat in Antarctica? (April 2, 2018). That's the post immediately below. Links to more.

* Inuk, a 4000 year old Saqqaq from Qeqertasussuk (March 1, 2010). Links to more from the Arctic.

More ice: Ice in your diamond? (April 23, 2018).

What do microbes eat when there is nothing to eat in Antarctica?

April 2, 2018

They eat the air, according to a new article.

Antarctica is a harsh place. However, there are diverse microbial communities, with no obvious source of food. We commonly think of photosynthesis as the primary energy source for most life on Earth, with some specialized communities using chemical energy, such as that from thermal vents in the oceans. These Antarctic microbial communities seem to have extremely low photosynthesis; anyway, it's dark much of the year there.

A recent article explores the basis of life on the Antarctic desert. It uses extensive DNA sequencing -- metagenomics. And it does some biochemical testing.

Here is one of the experiments from the article...

|

The graph shows CO2 fixation under various conditions for soil samples from two sites in Antarctica.

Focus on the right hand set, from Adams Flat.

The four conditions are shown at the bottom, with their color code. The order of the bars in the graph is the same as the order in the key.

|

The first two bars (from the left) are for CO2 fixation in the dark, without or with hydrogen. You can see that CO2 fixation is higher with H2; the difference is statistically significant, as shown by the p value (and asterisks).

The next two bars are for similar conditions, but with light. That is, without and with H2 in the light. The pattern is the same as in the dark.

Now compare the data without and with light. They are very similar. (The p value at the top says they are not significantly different.)

This is Extended Data Figure 4c from the online version of the article.

|

Those results from Adams Flat suggest that CO2 fixation is stimulated by H2, but not by light. That is, the microbes are growing using chemical energy (from H2) and using CO2 as their carbon source. They use chemical energy (not light energy) to drive CO2 fixation.

You can also see that there is considerable variability. And for the other site, Robinson Ridge, there is no clear trend. (However, separation of the Robinson Ridge data by specific site suggests that one of them behaves similarly to the Adams Flat samples.)

What makes the results particularly interesting is that the experiment was done using a level of H2 typical of the ambient air. Further...

- Direct testing of H2 metabolism showed that it was consumed at that low level. (H2 consumption was found even at the lowest temperature tested: -12 °C.)

- Analysis of the DNA in the soils showed that the key enzyme needed for H2 metabolism, hydrogenase, was present. It was a type of hydrogenase considered high-affinity, able to metabolize low levels of H2.

The scientists also showed that carbon monoxide was consumed at the level found in ambient air, and that the key enzyme needed for that metabolism, carbon monoxide dehydrogenase, was present.

Bottom line... The article provides evidence that microbes on the Antarctic desert metabolize trace levels of combustible gases in the air. They use these trace gases, H2 and CO, as energy sources. Both gases are present at quite low levels, but they probably are reliable energy sources -- at least by Antarctic standards.

The bacteria use CO2 from the air as their carbon source. They use the common C-fixing enzyme Rubisco for that purpose. There is nothing unusual about this step.

The main claim is that the bacteria use the trace gases from the air for maintenance energy. Whether they use these gases for primary growth is an open question.

We should stress that the scientists have not isolated any particular organism with the claimed properties. The metagenomic studies show what genes are present in the population, with some clues about how they might be combined into chromosomes of individual bacteria. The metabolic studies are with soil samples. Thus the article offers hypotheses about what is happening, but there is more to do, and many questions remain.

The authors propose two new phyla of bacteria, based on the work: Eremiobacteraeota (desert bacterial phylum) and Dormibacteraeota (dormant bacterial phylum).

News story: Living on thin air - microbe mystery solved. (Phys.org, December 6, 2017.)

* News story accompanying the article: Microbial ecology: Energy from thin air. (D A Cowan & T P Makhalanyane, Nature 552:336, December 21, 2017.)

* The article, which is freely available: Atmospheric trace gases support primary production in Antarctic desert surface soil. (M Ji et al, Nature 552:400, December 21, 2017.)

A recent post about other bacteria that seem to be having trouble finding food: Nuclear-powered bacteria: suitable for Europa? (March 27, 2018).

Posts about Antarctica include...

* Should we geoengineer glaciers to reduce their melting? (April 4, 2018). That's the post immediately above.

* A bit of IPD -- found in Antarctica (January 13, 2015).

* IceCube finds 28 neutrinos -- from beyond the solar system (June 8, 2014). Nice picture of Antarctica -- a very different scene than the desert of the current work.

* Life in an Antarctic lake (April 22, 2013).

* How were the Gamburtsevs formed? (December 7, 2011).

This post is noted on my page Unusual microbes.

There is more about DNA sequencing on my page Biotechnology in the News (BITN) - DNA and the genome. It includes an extensive list of related Musings posts.

A blood test that detects multiple types of cancer

March 30, 2018

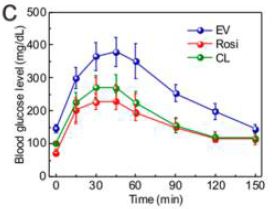

The article discussed here is complicated. It is also very interesting, and potentially important; it has received considerable news coverage. So, let's start with a summary, for perspective... The article presents a blood test for cancer -- a general test, to detect any kind of cancer. The approach is to measure many things. The results are encouraging, suggesting that such a test might be practical and useful. However, this is a very preliminary report, and much work remains to be done.

The test starts with a blood sample. The blood is tested by DNA sequencing. It is now recognized that there is a variety of DNA floating around in the blood -- including DNA from tumor cells. Recent developments in DNA sequencing allow us to find and sequence even very low levels of DNA, almost blindly. For the current use, the scientists focus on a set of genes that often carry mutations in cancers. The idea is that if the person has a cancer with a mutation in one of the common cancer genes, then such mutant cancer DNA will be found in the blood. What they do is to take the blood, and use PCR to amplify any DNA that comes from such cancer genes. They then sequence the PCR-amplified DNA, which is now enriched for cancer genes.

As a preliminary step, they need to choose which cancer genes to look for. They start with databases of cancer genomes, and make a list of specific mutant sequences that are common in cancers. These are sequences worth looking for. They get a list of about 200 possible sequences.

How many should they actually use? Use too few, and they will miss some cancers. But the more genes one looks at, the more expensive the test. The following experiment explores that trade-off.

The test is a computer analysis, but it uses real genome data. What the scientists do is to test a database of genomes from cancer patients, and see what the detection frequency would be if they used various numbers of test sequences.

The following figure illustrates what they find...

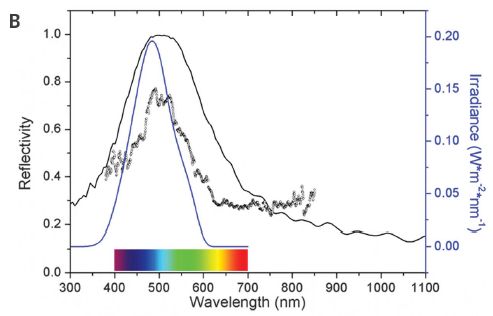

The three graphs show the chance of detecting the cancer (y-axis) vs the number of sequences -- or "amplicons" -- tested (x-axis). The term amplicon reflects the role of the PCR amplification. The x-axis is labeled here for the middle frame; it is the same for all frames.

Results are shown here for two specific types of cancer (ovary and liver), as well as the overall analysis for all eight types of cancer examined (left-hand panel).

Start with the middle panel, for ovarian cancer. The solid line shows that the chance of finding a cancer increases as they test more sequences. The important point is that the curve gradually levels off, and reaches a plateau at about 60 sequences tested. Without quibbling about the exact number... It is good to test 60 sequences, but there is little value in testing more than that.

The right-hand panel is a similar analysis for liver cancer. The percent of cancers detected is lower than for ovarian cancer, but the shape of the response curve is the same. In particular, 60 sequences is once again a good cutoff.

The full analysis reported in the article includes six more types of cancer. The pattern is similar for all, as illustrated by the two just discussed.

The left-hand panel is a summary over all eight types of cancer studied. Qualitatively, the graph is similar to the other two. The big point is that using about 60 sequences is a good compromise; little is gained by using more. The scientists settled on a set of 61 test sequences.

Each graph also contains a single point. It is at x = 61 amplicons. And the y value is just above the line we have been discussing. The point is based on an independent test with about a thousand cancer patients. Blood samples were tested, using the set of 61 test sequences. In each case shown here, the results were a little better than predicted from the solid line. (In fact, that was true for each type of cancer in the full study -- except one, for which the solid line was already very near 100%.)

That point at x = 61 supports that the test with 61 test sequences detects a high percentage of cancers of various types.

This is slightly modified from the top row of Figure 1 of the article. The full figure also shows the results for six more types of cancer. I have added some labeling for the x-axis of the central panel here; it is the same for each panel.

|

So far, we have a test done with a single blood sample. It detects a variety of cancers, with about 70% effectiveness overall.

The test builds on recent developments. It makes use of the extensive databases of cancer genomes. And it makes use of low-cost DNA sequencing; that is intrinsic to the test, as well as being behind the databases.

The scientists added eight cancer protein markers to the test; these were tested using standard immunoassays of the same blood sample. These increased the detection percentages. They also added 31 additional protein tests, as follow-up. With these, they were now able to address the question of cancer type. As before, the results vary by type of cancer, but are in the same range as above.

The DNA sequence testing, alone, yields no information about the type of cancer. The gene sequences tested are mutated in cancers generally; they are not specific to types of cancer.

What do we make of the test? It's an interesting development. It is providing information that is not currently easily obtained. Some of the cancer types studied here do not now have any early-detection system. But this is an early step. The authors do not claim this is a test ready for widespread use at this point.

Here are a couple of the issues for this test...

Cost. The authors estimate the test would cost about $500 (USD). Is that expensive or not? Not an easy question. It's actually in the range of many medical tests, though certainly more expensive than many routine tests. Of course, along with cost, we need to consider benefit. A simple screen for early-stage cancer would have tremendous benefit. The article has some information about the stage at which cancer becomes detectable by the proposed test; it's mixed. For now, the point is that cost (and benefit) must be carefully considered.

False positives. Tests such as this have two types of error. One is missing some cases; we call these false negatives. Above, we noted that the test finds about 70% of the cancers; it's missing a lot, but it is detecting many cases that would be missed otherwise. That may be useful progress. Tests may also find things that aren't real; we call these false positives. The article suggests that the rate of false positives here is about 1%. That may seem good, but even a low rate of false positives can be a problem.

To illustrate the problem of false positives... Imagine we have a test with 1% false positives. That sounds good. But let's use the test on the general population with a condition that occurs with 1% frequency. We test 100 people. We detect one case, and we get one false positive (statistically). That is, half the "hits" are false positives. Both of those will have to undergo further testing. Adjust the numbers a little, and you can see that broad screening for rare conditions can yield mainly false positives. Of course, the problem of false positives becomes less as the real incidence increases. False positives are less of an issue when screening populations at high risk (i.e., with higher incidence). There is no simple answer to what level of false positives is acceptable; it depends on the details of the situation, but it is a point that must be considered, especially in tests intended for broad screening.

Overall, as we have already noted, the article is an interesting step toward a general test for cancer.

The test is called CancerSEEK.

News stories:

* Simple blood test detects eight different kinds of cancer -- 'Liquid biopsy' technique looks for genetic mutations and proteins linked with tumours. (H Ledford, Nature, January 18, 2018.)

* Single Blood Test Screens for Eight Cancer Types -- Provides unique new framework for early detection of the most common cancers. (Johns Hopkins Medicine, January 18, 2018.) From the lead institution. It is a good overview of the work. As expected, perhaps, from the source, it is not so good at critical analysis. The following two news sources are better at that aspect.

* Expert reaction to paper on potential non-invasive blood test for multiple types of cancer. (Science Media Centre, January 18, 2018.)

* News story accompanying the article: Cancer: Cancer detection: Seeking signals in blood -- Combining gene mutations and protein biomarkers for earlier detection and localization. (M Kalinich & D A Haber, Science 359:866, February 23, 2018.)

* The article: Detection and localization of surgically resectable cancers with a multi-analyte blood test. (J D Cohen et al, Science 359:926, February 23, 2018.) Check Google Scholar for a freely available pdf of a preprint.

Another example of analyzing DNA that just happens to be in the blood: Genome sequencing of a human fetus (August 25, 2012).

More blood testing: Should we screen the blood supply for Zika virus? (May 20, 2018).

My page for Biotechnology in the News (BITN) -- Other topics includes a section on Cancer. It includes an extensive list of relevant Musings posts.

There is more about DNA sequencing on my page Biotechnology in the News (BITN) - DNA and the genome. It includes an extensive list of related Musings posts.

March 28, 2018

Nuclear-powered bacteria: suitable for Europa?

March 27, 2018

There is water on the Jovian moon Europa. There is almost certainly an ocean, under the surface. Is there life? What would be its energy source? There is no sunlight deep underground. Chemical energy? Perhaps. Or maybe nuclear energy.

A recent article examines the possibility that life on Europa might be powered by nuclear energy.

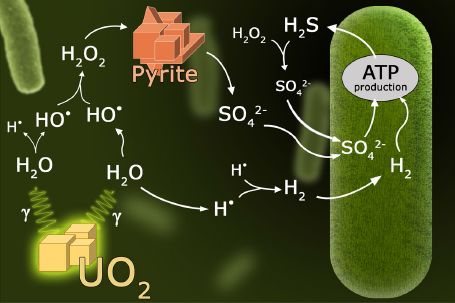

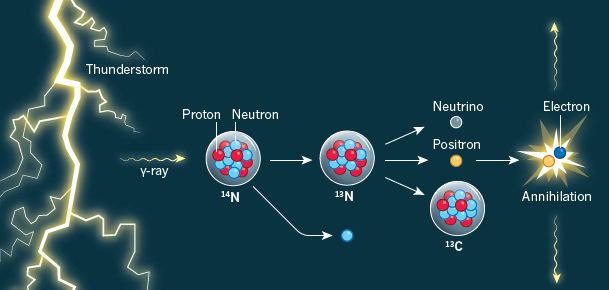

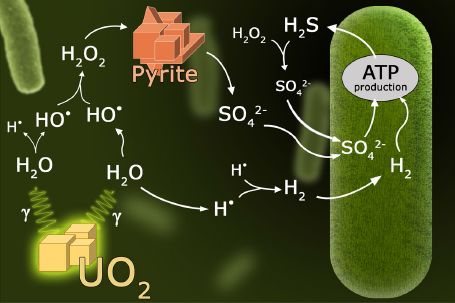

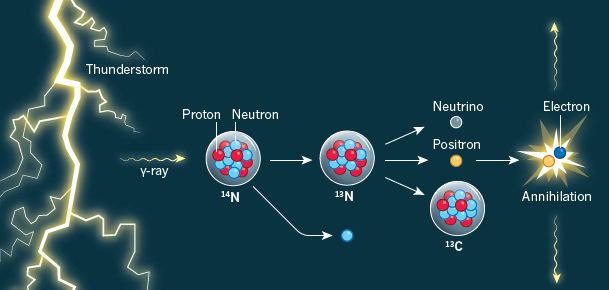

Here's the idea...

Start at the lower left. There is some UO2. That's the mineral uraninite -- a common mineral in the Solar System. What's important is that it is radioactive, and gives off gamma rays -- which are shown emanating from the mineral. (Isotopes of thorium and potassium also contribute γ-rays.)

The γ-rays break some water molecules into pieces: the hydrogen and hydroxyl radicals. (Careful... Not the ions, but the radicals.) That step is called radiolysis -- of water, in this case.

Two hydroxyl radicals can join together to form hydrogen peroxide, H2O2. Two hydrogen radicals can join together to form hydrogen molecules, H2. These two products are shown to the top and to the right, respectively.

The overall result of those steps is to convert ordinary water molecules into hydrogen peroxide plus hydrogen -- an oxidant and a fuel.

2 H2O --> H2O2 + H2.

That sounds hard. It is. It took nuclear energy to do it.

Continuing... There is some pyrite, FeS2. A sulfide mineral. Hydrogen peroxide (or the hydroxyl radical itself) can oxidize the pyrite -- the sulfide -- to make sulfate, SO42-.

And finally, the biology. It's well known than some bacteria can grow by oxidizing hydrogen with sulfate. And that's what the bug at the right does. The bug itself carries out ordinary biochemistry, but its substrates are present because of the radiolysis of water promoted by the γ-rays from the radioactive uranium.

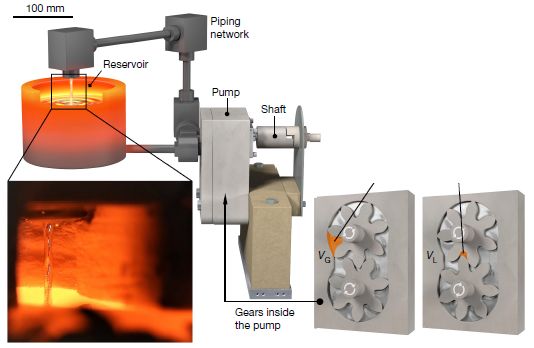

This is Figure 1 from the article.

|

Put all that together, and you have a nuclear-powered bacterium. In a very real sense, the bacteria are nuclear-powered, but it is also true that they are ordinary bacteria, doing ordinary biochemistry. It is the overall biogeochemical picture that makes us characterize the bacteria as nuclear-powered, not any special biochemistry.

Think about... If your electric utility uses nuclear energy, you don't get uranium in your electrical lines. The nuclear reaction just provides the energy for the first step: heating water in the case of a nuclear reactor making electricity. The electricity you get is ordinary electricity. Similarly here, the nuclear reaction just provides the energy for the first step.

In fact, that pathway shown above was proposed for a bacterium discovered in South Africa a decade ago, Candidatus Desulforudis audaxviator. (The lead word there means that we have a proposed name.) The organism was growing -- alone -- deep in a gold mine. As best we can tell, it is growing just as suggested in the figure above. It is a nuclear-powered bacterium -- on Earth.

The current article builds on that model, and explores whether it might hold on Europa. In particular, the article does quantitative modeling, using estimates of the relevant parameters. The general conclusion is that it seems reasonable. That doesn't seem a big surprise, given that we have such an organism on Earth. But the extension to Europa is at least provocative. It raises the possibility, even plausibility, that life on Europa is nuclear-powered. It also provides some guidance as to things we might want to measure on Europa.

News stories:

* This Strange Species That Lives Off Nuclear Energy Is Like Alien Life on Earth. (M Starr, Science Alert, February 26, 2018.)

* Brazilians create model to evaluate possibility of life on Jupiter's icy moon. (J T Arantes, Agência FAPESP, February 21, 2018.) From the São Paulo Research Foundation, a funding source for the current work; FAPESP is an acronym based on their Portuguese name. Overall, this is an excellent overview of the article, with context and implications. (But note that the news story does mix up ions and radicals at one point.)

The article, which is freely available: Microbial habitability of Europa sustained by radioactive sources. (T Altair et al, Scientific Reports, 8:260, January 10, 2018.)

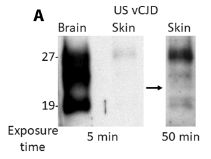

More on Europa: