Home

> Musings: Main

> Archive

> Archive for January-April 2017 (this page)

| Introduction

| e-mail announcements

| Contact

Musings: January - April 2017 (archive)

Musings is an informal newsletter mainly highlighting recent science. It is intended as both fun and instructive. Items are posted a few times each week. See the Introduction, listed below, for more information.

If you got here from a search engine... Do a simple text search of this page to find your topic. Searches for a single word (or root) are most likely to work.

Introduction (separate page).

This page:

2017 (January-April)

April 30

April 26

April 19

April 12

April 5

March 29

March 22

March 15

March 8

March 1

February 22

February 15

February 8

February 1

January 25

January 18

January 11

January 4

Also see the complete listing of Musings pages, immediately below.

All pages:

Most recent posts

2026

2025

2024

2023:

January-April

May-December

2022:

January-April

May-August

September-December

2021:

January-April

May-August

September-December

2020:

January-April

May-August

September-December

2019:

January-April

May-August

September-December

2018:

January-April

May-August

September-December

2017:

January-April: this page, see detail above

May-August

September-December

2016:

January-April

May-August

September-December

2015:

January-April

May-August

September-December

2014:

January-April

May-August

September-December

2013:

January-April

May-August

September-December

2012:

January-April

May-August

September-December

2011:

January-April

May-August

September-December

2010:

January-June

July-December

2009

2008

Links to external sites will open in a new window.

Archive items may be edited, to condense them a bit or to update links. Some links may require a subscription for full access, but I try to provide at least one useful open source for most items.

Please let me know of any broken links you find -- on my Musings pages or any of my regular web pages. Personal reports are often the first way I find out about such a problem.

April 30, 2017

Synthetic stem cells?

April 30, 2017

Synthetic stem cells? That's in the title of both news stories below. What could that possibly mean? After all, the purpose of stem cells is to provide cells that can grow, and we don't really know how to make synthetic cells that can grow.

The work here addresses a special case. There has been a lot of work using cardiac stem cells, so-called adult stem cells, to treat heart damage. The results have been mixed, but over time scientists have come to understand that these stem cells may be of real benefit, but not by working the way we expected. These cardiac stem cells may act primarily by promoting growth of the host tissue, not by providing cells per se. They promote growth by providing growth factors -- and by stimulating the host cells by direct membrane interactions.

If the cardiac stem cells act primarily by providing those functions, perhaps we can make a substitute that will serve. And that's the idea in this new article.

The following figure shows some bottom line results...

The experimental system here is with mice. They are given a lab-induced artificial heart attack (myocardial infarction, or MI), then groups are treated in one of four ways.

The bar height shows a measure of heart function. It is the left ventricular ejection fraction (LVEF).

The graph on the left (part j) shows the results before treatment ("baseline"). All four groups are about equal, with poor function. The graph on the right (part k) shows the results after treatment. There are two low bars, about as before, and two high bars, showing improvement. The two low bars are for controls. The two high bars are for cardiac stem cells (CSC; blue, at the right) and the artificial stem cells the scientists have prepared. The latter are called cell-mimicking microparticles, or CMMP (green).

That is, the synthetic stem cells, the CMMP, work about as well as the cardiac stem cells.

The red bars? They are for an incomplete form of the CMMP. Perhaps it showed a small effect, though it is not statistically significant.

This is Figure 4 parts j and k from the article. I have added a key at the right side.

|

What are these cell-mimicking microparticles? They are based on synthetic particles, but they contain growth factors from cardiac stem cells, and they are coated with membranes from cardiac stem cells.

If this result holds up, it supports the idea that cardiac stem cells may be useful, but not by providing cells per se. That is, work with the synthetic stem cells enhances out understanding of the system. Further, the CMMP may actually have advantages for treatment. They are robust and easily stored, and they avoid immunological concerns.

Whether the CMMP work in humans is open; the work is entirely with mice. And there is no implication here about stem cells in general -- except to remind us that stem cells may do different things in different cases.

News stories:

* Synthetic stem cells offer benefits of natural stem cells without the risks. (Kurzweil, January 13, 2017.)

* Synthetic stem cells repair damaged hearts. (P Waldron, BioNews, January 9, 2017.)

The article, which is freely available: Therapeutic microparticles functionalized with biomimetic cardiac stem cell membranes and secretome. (J Tang et al, Nature Communications 8:13724, January 3, 2017.)

A post about the use of cardiac stem cells: Cardiac stem cells as a treatment for heart damage: preliminary results are "very encouraging" (November 29, 2011).

A recent post about heart damage: The role of mutation in heart disease? (April 25, 2017).

Another post using a similar experimental system of artificial heart attacks in mice: Zebrafish reveal another clue about how to regenerate heart muscle (December 11, 2016).

and more...

* Heart regeneration? Role of MNDCMs (November 10, 2017).

* If an injured heart is short of oxygen, should you try photosynthesis? (June 25, 2017).

More about heart regeneration: Human heart organoids show ability to regenerate (May 2, 2017). That is the next post.

There is more about stem cells on my page Biotechnology in the News (BITN) for Cloning and stem cells. It includes an extensive list of related Musings posts, including those on the broader topic of replacement body parts.

Water desalination using graphene oxide membranes?

April 29, 2017

Sea water is, largely, a solution of sodium chloride in water. If we could simply filter it, so that the water went through but the salt stayed behind, we could make drinking water. However, filtering out something that is dissolved is generally difficult.

Work with graphene has suggested that filtration of salt water might work. However, in real experiments, the material swelled, and the salt went through the filter.

A new article reports further progress in the development of graphene membranes that might work for filtering the salt out of salt water.

The following graph shows some results...

|

The graph shows the filtration rates for three chemical species. Those rates are shown on the two y-axes; we'll come back to what the axes mean in a moment.

The x-axis is a measure of the effective pore size in the membranes. It shows the "interlayer spacing", the spacing between layers in the multi-layer membrane. We'll see a diagram of this below.

There are three data sets on the graph. The top two are for potassium ions (red symbols and dotted line) and sodium ions (black). You can see that these two lines are very similar. For simplicity, we'll focus on the Na+ line. (I suggest you ignore the error bars.)

|

The third line, with blue symbols, is for water.

At first glance, the water line may seem to be only a little less steep than the Na+ line. However, we need to look more carefully at the axis scales. The data for the ions use the y-axis scale on the left; the data for the water use the y-axis scale on the right. It's common to make a graph with multiple y-axis scales, but in this case, there is something special: the left scale is a log scale; the right scale is linear.

To get a feel for the effects, look at the difference between the results for the smallest and largest inter-layer spacings. For Na+, the rate increases by about 30-fold; for water, it increases by about 2-fold. More relevantly, look at it "backwards", from right to left... As the spacing becomes smaller, the rate of ion flow is reduced much more than the rate of water flow. That is, as the spacing becomes smaller, there is better separation of the water from the ions. That's exactly what we want.

Don't try to compare the rate numbers for water and ions. They are measured in different ways.

This is Figure 2b from the article.

|

What are these membranes? The scientists used laminated graphene oxide (GO). People have tried that before, but, as we noted above, the material tends to swell in water; when swollen it allows the salt to pass. What they did here was to block the swelling.

Here's the idea...

Start with the horizontal black lines. Those are the sheets of graphene oxide. You can see that we have a basic structure of layers of GO.

The water molecules, shown in red, move between the layers of GO. They emerge on the right.

The ions, Na+ (purple) and Cl- (green), are unable to pass between the layers. They remain at the left.

|

|

Overall, the salt water on the left is converted to pure water on the right, by filtration between the layers of GO.

Note that the filtration is between layers, not through pores. Thus the spacing between layers is the critical issue. That is the x-axis variable in the top graph.

Learning to control the inter-layer spacing was the key development here. The scientists did that in two steps. First, they prepared the membranes at various humidities; that determines the swelling and thus the spacing. Then they encapsulated the material in epoxy, shown in yellow, to preserve the spacing.

This is Figure 1a from the article.

|

Let's go back to the top graph. A key point there is that the spacing affects ion movement much more than it affects water movement. Why? For water, we are talking about simple movement of the water molecules. Bigger spaces allow it to move faster. But for the ions, it is more complex. The ions in the water are surrounded by water molecules, often called water of hydration. The hydrated ions are quite large, and the water is tightly bound to the charged ions. In order to move through the filter, the ions need to be freed of (some of) their hydration, so they are small enough to pass. It takes energy to remove the water around the ions. That makes the ion movement more sensitive to the details of the filtration.

In principle, GO membranes with restricted swelling might be an economical way to do desalination. The energy requirements should be much lower than for reverse osmosis, the major current process. So far, we have only these early lab experiments, demonstrating the idea. The current article shows how to overcome one technical hurdle. We'll see what happens as development continues.

News stories:

* Graphene-based sieve turns seawater into drinking water. (P Rincon, BBC, April 3, 2017.)

* Graphene sieve turns seawater into drinking water. (Phys.org, April 3, 2017.)

* News story accompanying the article: Ion sieving and desalination: Energy penalty for excess baggage. (R Devanathan, Nature Nanotechnology 12:500, June 2017.)

* The article: Tunable sieving of ions using graphene oxide membranes. (J Abraham et al, Nature Nanotechnology 12:546, June 2017.)

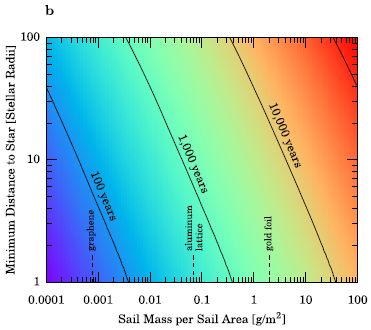

A recent post about graphene: A better way to get to Alpha Centauri? (March 15, 2017).

More graphene...

* GO dough (April 9, 2019).

* Coloring with graphene: making a warning system for structural cracks? (June 2, 2017).

More about water treatment...

* Purifying water using fluorinated nanopores (August 16, 2022).

* Better membranes for water desalination (February 14, 2021).

* Reducing corrosion of sewage pipes (September 27, 2014).

Posts about graphene are listed on my page Introduction to Organic and Biochemistry -- Internet resources in the section on Aromatic compounds.

April 26, 2017

The quality of science news

April 26, 2017

The quality of news is itself a news item. Recent months have brought considerable attention to the topic, including much discussion of "fake news". Of course, we can raise the same issues about science news.

Two organizations that do science news have boldly put forth an analysis and summary. They are the American Council on Science and Health (ACSH) and RealClearScience. Both groups posted the summary, each with their own commentary. These are listed below as the news stories, which are the heart of this post.

I broadly agree with their ranking of news sources, for those I know about. I do think that their analysis made things too complicated at times. Criticizing Physics World, published by the Institute of Physics, because they only cover physics is a cheap shot -- and detracts from the main point that it is high quality coverage. But overall, that is a minor criticism. (I suggest that they use one ranking criterion: quality. Their other comments can be shown as characteristics, but they are not quality per se.)

The ACSH page also links to a story in which they attempt to characterize fake news. I think this is less successful; it is over-reliant on generalities. Nevertheless, it makes some good points. Read it if you want, but I suggest you not take it too literally.

Analyzing news quality is not simple, in science or in general. The organizations here deserve credit for tackling the issue, and for making good, if imperfect, contributions.

News stories:

* Infographic: The Best and Worst Science News Sites. (A Berezow, American Council on Science and Health, March 5, 2017.)

* Ranked: The Best & Worst Science News Sites. (R Pomeroy & T Hartsfield, RealClearScience, March 6, 2017.)

I learned of the analysis from an editorial in Nature: Science journalism can be evidence-based, compelling - and wrong. A ranking of the best science-news outlets misjudges the relationship between research and reporting. (Editorial; Nature 543:150 March 9, 2017) It is critical of the rankings. It makes some interesting comments, but overall seems unduly harsh.

A previous post about science news reporting: Media hype about scientific articles: Who is responsible? (March 9, 2015). The post includes some comments about how I choose news items for Musings.

More about news: Comparing how true and false news stories spread (June 5, 2018).

My page for Biotechnology in the News (BITN) -- Other topics includes a section on Ethical and social issues; the nature of science.

Musings has referred to a few news stories from ACSH, most recently: Update: Ebola vaccine trial (January 24, 2017).

The role of mutation in heart disease?

April 25, 2017

Mutations accumulate as we age. Cancer and heart disease (atherosclerosis) are, in large part, diseases of old age. It is well accepted that the accumulation of mutations as we age contributes to the development of cancer. Is it possible that this holds for heart disease, too?

A recent article offers some support for the idea, using a mouse model of heart disease. The scientists show that mutations in a particular gene lead to larger arterial plaques. They offer some explanation for the connection.

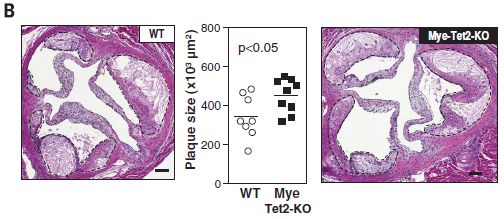

Here is an example of the results...

This experiment uses two strains of mice. One is wild type: WT. The other is labeled Mye-Tet2-KO. That means they lack the enzyme Tet2 in myeloid (bone marrow) cells. (KO stands for knock out.)

Other features of the experiment are designed to enhance plaque formation. The mice lack a receptor for low density lipoprotein, which is protective. And they are fed a diet high in fat and cholesterol.

The graph in the middle shows the plaque sizes in these two kinds of mice. You can see that the mutant mice had larger plaques, on average

The two photos, at the sides, show examples of the plaques from the two kinds of mice. The one on the left is for WT mice; the one on the right is for the mutant mice. There are dashed lines around the plaques. The plaques on the right are larger, though that may or may not be obvious by eye.

This is Figure 2B from the article.

|

The figure above suggests that lack of Tet2 can lead to greater formation of arterial plaques. What is Tet2, and how does it have this effect?

That's complicated. The name Tet2 is unhelpful: Tet stands for ten-eleven translocation. It is an enzyme that modifies DNA, but it probably has other roles, too. It has the potential to alter gene function. In any case, it is known to affect differentiation in the blood cell system.

In other experiments reported here, the scientists showed that Tet2-deficient mice preferentially developed more Tet2-deficient macrophages, and had higher levels of inflammatory signaling molecules. This work begins to make a connection between the mutation and a pathway leading to heart disease.

We emphasize that there is no direct evidence that Tet2 mutations lead to heart disease in humans. What we have here is some evidence from mice, and some logic. Many people with heart disease lack the common risk factors. And it is known that Tet2 mutations accumulate with age. It is plausible that such accumulation of mutations might be a factor in human heart disease. What next? Can we make a direct connection to heart disease in humans? Are people with atherosclerosis more likely to have Tet2 mutations? Is it possible that treatment of the Tet2 defect could reduce plaque formation?

The main point of the article is to focus attention on the possible role of mutations accumulated during aging on conditions other than cancer.

News story: A role for mutated blood cells in heart disease? (Medical Xpress, January 19, 2017.)

* News story accompanying the article: Cardiovascular disease: Hematopoietic stem cells gone rogue. (Y P Zhu et al, Science 355:798, February 24, 2017.)

* The article: Clonal hematopoiesis associated with TET2 deficiency accelerates atherosclerosis development in mice. (J J Fuster et al, Science 355:842, February 24, 2017.)

Heart posts include:

* Synthetic stem cells? (April 30, 2017).

* Zebrafish reveal another clue about how to regenerate heart muscle (December 11, 2016).

* The opah: a big comical fish with a warm heart (July 13, 2015).

* Red meat and heart disease: carnitine, your gut bacteria, and TMAO (May 21, 2013).

* Putting the MRI machine in the patient (June 15, 2009).

Immunization of devils: a treatment for a transmissible cancer?

April 24, 2017

We have another interesting development in the story of the Tasmanian devils and their common cancer, devil facial tumor disease (DFTD). This cancer is unusual in that it is directly transmissible from one animal to another. In fact, the devils transmit it efficiently; biting each other is a common behavior. The devils have become an endangered species.

Investigation of the cancer has suggested that one reason for its virulence is that it fails to induce an immune response, because it fails to display antigens properly. [Links to previous posts on the DFTD are at the end. The 2013 post is about this particular finding on the lack of immune response.]

A new article, from the same lab, builds on that finding. If there is a defective immune response, perhaps we can fix it. To try to do that, the scientists make DFTD cells that do display tumor antigens, and use those cells as a vaccine. It works, though it is more complicated than that statement might suggest.

The following figure shows a sampling of the results...

|

The graphs show tumor size (volume, on the y-axis) vs time (x-axis) for several tumors.

It's a rather complex experiment, but fortunately one can get a useful overview with a quick inspection of the graphs.

In part e (lower graph), the tumor sizes increase steadily. In part d (the two upper graphs), the tumor sizes peak and then decline. What's the difference? The animals in part d had been immunized against the tumor. The animal in part e was a control, not immunized.

These are parts of Figure 3 from the article.

|

That is, the figure above shows a successful treatment of the cancer. Let's look further at what is going on.

A key point in following the procedures is to realize that there are two forms of tumor cells here. The usual form does not display tumor antigens. However, in the lab, the scientists can make a form that does display them. The latter are called MHC-I+ DFTD cells. You can see that name on the key in the graphs, by the vertical red dashed line. The term MHC-1 refers to the part of the immune system that had failed, but which they have "fixed".

Here is the basic procedure for part d...

- The animals were immunized by injecting them with dead MHC-I+ DFTD cells. That is, the immunization is against material that displays the tumor antigens in a form appropriate for an immune response. (The control animal, in part e, was not immunized. It received only the adjuvant, the carrier for the immunization, which helps stimulate the response.)

- The animals were injected with live tumor cells. This is at time zero on the graphs. You can see that this resulted in tumor growth in all the animals shown above over the following months. (There are two tumor injections, one on each side of the animal. LHS = left hand side. Qualitatively, the results are similar for both, so we won't worry about this point further.)

- After tumor growth was apparent, the animals were injected with live MHC-I+ DFTD cells -- that is, with live, antigen-displaying tumor cells. This was done at the time marked by the vertical red dashed line. In the immunized animals (part d), the tumors regressed soon after the injection of antigen-displaying cells.

There is one more tumor to consider; it is at the site of the injection of the live treatment cells, and is labeled IS. In the immunized animals, this happened in one of the two animals; the IS tumor remained small. In the non-immunized animal, the IS tumor continued to grow.

In summary... This is a two-step procedure. First, animals are immunized against the tumor antigens. Second, animals with tumors are treated with antigen-displaying cells. That lets the immunized animals mount a significant immune response against the tumors, which then regress. Although the tumor cells do not display antigens in the form that stimulates the immune system, they are susceptible to the immune response once it is turned on.

This is difficult work. It is with an endangered species, and the supply of animals for lab work is very limited. Each graph is for one animal; each line is for one tumor. The entire work in the article used nine animals -- over five years. Most of the experiments were isolated, without direct controls. Nevertheless, it's encouraging. If this holds up, immunization of animals prior to contracting the disease followed by immune stimulation after they have tumors may be effective in treating the devil facial tumor. Perhaps simpler treatments can be developed.

It could save the species. What is the right way to proceed?

News stories:

* Immunotherapy trial cures Tasmanian devils of DFTD. (Phys.org, March 9, 2017.)

* Breakthrough boosts hope for treating contagious cancer in Tasmanian devils. (S Dasgupta, Mongabay, March 14, 2017.)

The article, which is freely available: Regression of devil facial tumour disease following immunotherapy in immunised Tasmanian devils. (C Tovar et al, Scientific Reports 7:43827, March 9, 2017.)

Key background post on the DFTD immunity problem: Why the facial tumor of the Tasmanian devil is transmissible: a new clue (April 5, 2013). The article of this post is reference 10 of the current article.

Other posts on the DFTD:

* Tasmanian devils: Are they developing resistance to the contagious cancer? (September 6, 2016).

* The devil has cancer -- and it is contagious (June 6, 2011). Includes pictures; one is of an animal with a tumor.

Another transmissible cancer: Is clam cancer contagious? (April 21, 2015).

Also see:

* Predicting who will respond to cancer immunotherapy: role of high mutation rate? (October 6, 2017).

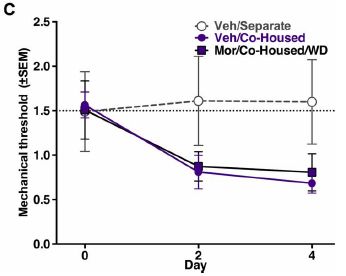

* Cancer and pain -- and immunotherapy (July 7, 2017).

My page for Biotechnology in the News (BITN) -- Other topics includes a section on Cancer.

What do bats argue about?

April 21, 2017

Food. Where to sleep (and with whom). And so forth, according to a new article.

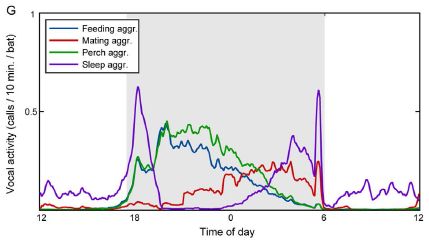

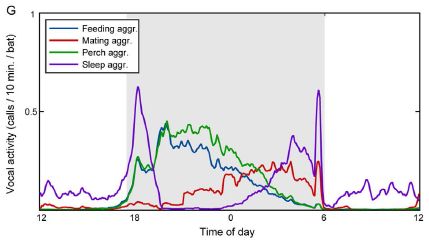

Here is a summary of one part of the findings...

|

The frequency of different kinds of bat calls over a day.

This is Figure 1G from the article.

|

The graph shows that there are patterns of bat calls, during the daily cycle. But the big issue here is that to make that graph, the scientists had to be able to understand what the bats were saying. That's what the article is really about.

Bats are highly social animals. They live in stable groups for many years. And they make a lot of noise. Are they just noisy, or are they communicating?

To try to find out, the scientists made audio-video recordings in a small lab-based colony of Egyptian fruit bats, Rousettus aegyptiacus, for 75 days. That is, they recorded the sounds, and also what the bats were doing. They then analyzed the collection of sounds by computer, looking for patterns. Of course, that was a lot of sound and a lot of information. To help make connections, they focused on situations where only a small number of bats were involved. After all, if one bat refers to another by name, it is easier to figure that out if there are only a few bats around at the time.

Interestingly, the computer figured out a good portion of what was being said. You can see examples of the calls in the article along with some of the conclusions, but there is no way to see how the analysis was done. (The calls are presented as spectrographs, a picture showing the intensity of various frequencies vs time.)

The bats identified other individuals as friend or foe, and sometimes seemed to use names for individuals. They did talk about resources. And the kids would call in distress if left alone. In a sense, the findings of what the bats talked about aren't remarkable. What would you expect them to talk about? What's remarkable is that we are listening in on a colony of bats here, and we knew nothing of their language. The analysis to figure out the language is something like deciphering the Rosetta Stone. We knew nothing about the language, but we knew what it was about; with some effort, we could make the connection. Here, the analysis is high-tech, and the language is that of a bat, but the overall logic is the same.

How good is the analysis? Is it possible that the scientists introduced their own biases about what they expected? In fact, much of the analysis is presented with probabilities; they assign a certain meaning to a certain call -- with a certain probability. Some of the reported probabilities are not very high, even if they do appear to be statistically significant. Per se, that's not a criticism. After all, the data are limited at this point. It will be interesting to see what happens from here. For example, do the bats respond to artificial sounds, designed based on our understanding of their language, as we would expect? It would be nice to see independent replication of the work. And we must wonder... what about the languages of different bat colonies, or even species?

News stories:

* Study of bat vocalizations shows they are communicating with one another. (B Yirka, Phys.org, December 23, 2016.)

* Bat Calls Contain Wealth of Discernible Information. (Neuroscience News, December 30, 2016.)

The article, which is freely available: Everyday bat vocalizations contain information about emitter, addressee, context, and behavior. (Y Prat et al, Scientific Reports 6:39419, December 22, 2016.)

Posts about bats include:

* The use of wing clicks in a simple form of echolocation in bats (May 22, 2015).

* The tree where the West Africa Ebola outbreak began? (January 12, 2015).

* Baseball and violins (May 15, 2012).

* A plant that communicates with bats (September 7, 2011).

Posts about animal communication include:

* Can chimpanzees learn a foreign language? (March 10, 2015).

* Language: What do we learn from other animals? (August 3, 2010).

April 19, 2017

When rivers (or streams) join, what is the preferred angle between them?

April 18, 2017

It averages about 45° in very dry regions, and about 72° in humid regions.

How do we know? Because someone measured a million such angles, and published the analysis in a new article. The measurements were done with satellite photos that covered most of the "contiguous" United States (the original 48 states). Here is a summary...

|

The map shows the United States, color-coded by the average river junction angle in the area. There is a color key at the right. Briefly, yellows are for low angles, blues for large angles.

Some readers may recognize that the regions dominated by yellows tend to be dry, whereas blue regions tend to be wet.

This is Figure 2a from the article.

|

In fact, the scientists compare the map of river angles with a map of aridity. Maps, for comparison [link opens in new window]. (That is Figure 2 parts a and b from the article.) The upper map there is the same as the one above. The lower map shows aridity across the country. The two maps are amazingly similar.

Their measure of aridity is a little more complex than one might expect at first. Their aridity index (AI) is the ratio of precipitation to transpiration. Qualitatively, that's fine, but the numbers won't be familiar.

Geologists have long thought about what affects river junctions, but had little systematic data. The results here suggest that, in dry regions, rivers tend simply to flow downhill. That is, they tend to be parallel, and joins are at low angles. In contrast, in wet regions, there is considerable spreading, accounting for joining at larger angles.

The correlation between river angle and climate is based on detailed analysis of the data summarized in the maps. This is, literally, real-world data. One might wonder whether other features of the landscape affect the river junction angles -- in addition to the aridity. Indeed, the authors examine several variables that have been offered as factors that might affect river junction angles. Their statistical analysis of all the data says that the aridity index is the most significant contributor.

The authors suggest that understanding how rivers join on Earth could help in interpreting observations from other bodies, such as Mars or Titan.

Most likely, you had not thought about this issue before.

News story: Stream Network Geometry Correlates with Climate. (T Cook, EOS, April 6, 2017.) From the American Geophysical Union.

The article, which may be freely available: Climate's watermark in the geometry of stream networks. (H Seybold et al, Geophysical Research Letters 44:2272, March 16, 2017.) Caution... It is a 21 MB pdf file for this 9-page article. Lots of high resolution maps.

River posts include...

* Earth: RSSA (September 18, 2018).

* Atmospheric rivers and wind (May 9, 2017).

* Groundwater depletion in the Colorado River Basin (October 3, 2014).

A post about a non-Earth river system... TALISE: A better boat for Titan? (October 16, 2012).

Hm, "AI" has multiple meanings... Is AI ready to predict imminent kidney failure? (August 24, 2019).

The paperfuge: a centrifuge that costs 20 cents

April 17, 2017

Three years ago, Musings presented a microscope that cost less than a (US) dollar [link at the end]. It's not a toy, just very simple -- and useful.

We now have a centrifuge costing less than a dollar -- about 20 cents. It can be used to separate blood cells and plasma, and can separate out malaria parasites. It's not a toy either, but was inspired by one. It is from the same lab.

Video: There is a video from Stanford University, where the work was done: Stanford bioengineers develop a 20-cent, hand-powered centrifuge. (YouTube, 3 minutes; narrated by the senior and lead authors.) The video illustrates the device. Since the point of the work is the operation of the device, that part is important. You might watch the video before proceeding, or at any point along the way as you read the description below.

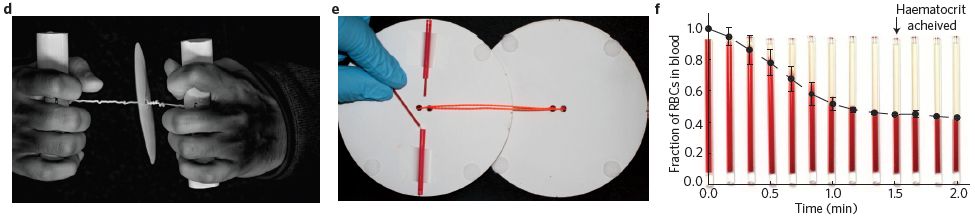

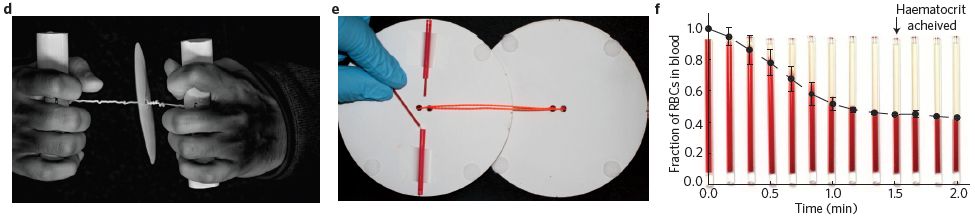

Part d (left) shows the device. The paper disc is spun by hand-power, using the twisted string between the disc and the hands.

Part e (middle) shows how capillary tubes, containing blood, are attached to the paper disc. (The second disk? In use, the two disks are face-to-face, with the samples in between.)

Part f (right) shows some results. The purpose here is to spin down the cells in the blood, leaving clear plasma at the top. The graph shows the fraction of the sample that is red cells (y-axis) vs time of centrifuging (x-axis). The size of that cell pellet, called the hematocrit, becomes stable after about 1.5 minutes of hand-operating the paperfuge. It ends up at about 40% (a "normal" value).

This is part of Figure 1 from the news story in the journal with the article. All three parts here are similar to figures in the article itself (Figures 1c, 3b, 3c, respectively).

|

The idea behind the paperfuge is not new. It is similar to a child's toy, called a whirligig. Figure 1a of the article shows a couple of examples of old ones. The authors note that such devices go back over 5,000 years.

The work here started by examining how the toy works. The article contains an extensive analysis of the principles behind the toy, with numerous equations and graphs. The scientists then used that analysis to develop a device optimized to serve as a simple centrifuge. It works about as well as standard lab centrifuges.

The result is a centrifuge that is simple and inexpensive to make and to use. And it works just fine. That's the point.

The authors have submitted their device to Guinness World Records. They claim it "is the fastest rotational speed reported via a human-powered device." (Page 1 of the pdf, referring to the 125,000 rpm speed.)

News stories:

* Ultra-low-cost, hand-powered centrifuge is inspired by whirligig toy. (M Allen, Physics World, January 11, 2017.)

* A low-cost, hand-powered paper centrifuge. (C Torgan, NIH, January 31, 2017. Now archived.) From the funding agency.

* News story accompanying the article: Diagnostics for global health: Hand-spun centrifuge -- A 20 cent centrifuge made of paper and string and operated by hand can separate plasma from blood in about 90 seconds. (M Bond & R Richards-Kortum, Nature Biomedical Engineering 1:0017, January 10, 2017.)

* The article: Hand-powered ultralow-cost paper centrifuge. (M S Bhamla et al, Nature Biomedical Engineering 1:0009, January 10, 2017.)

Senior author Manu Prakash gave a seminar at Berkeley a few weeks ago, at which he demonstrated, or at least played with, the paperfuge.

Background post... A ream of microscopes for $300? (June 22, 2014).

More inexpensive things: Making better artificial muscles (March 13, 2018).

... or, simpler things: Solar sterilization of medical equipment (February 6, 2021).

Another unusual centrifuge: A better way to un-boil an egg -- and why it might be useful (March 20, 2015).

More things spinning... A new record: spinning speed (October 12, 2018).

A perspective on POC... POCDx -- What's the barrier? (January 29, 2013). POC stands for point-of-care. Dx, in the title, stands for diagnostics. POC is not strictly synonymous with simple and inexpensive, but the terms overlap in common usage.

More about pulling strings: How bumblebees learn to pull strings (November 27, 2016).

Other posts about blood cells include... Progress toward a universal source for red blood cells, avoiding the need to match blood type (February 23, 2021).

Can antibodies to dengue enhance Zika infection -- in vivo?

April 15, 2017

Infection with one strain of dengue virus can make a subsequent infection with a different strain worse. Somehow, the antibodies against one strain enhance the infection with a different strain. The phenomenon is not well understood, but it does have a name: antibody-dependent enhancement (ADE).

Zika virus is rather closely related to dengue virus. Is it possible that prior infection with dengue affects Zika infection -- in any way? In particular, is it possible that dengue makes Zika worse, because of ADE between these two related viruses?

Musings addressed this issue about a year ago, with an article dealing entirely with cell culture. The work showed that dengue antibodies could enhance Zika infection [link at the end].

A new article addresses the question in a mouse model of Zika infection. Here is a key experiment...

|

The basic design is that several groups of mice were infected with Zika, and their survival was followed.

The groups differed in the pretreatment prior to the Zika infection. Each group was injected with one or another sample. The main injections of interest were with blood plasma containing antibodies to dengue (DENV) or West Nile (WNV) viruses. There are also two controls, one with an injection of buffer (PBS), one with no injection (CTRL).

|

The two controls show high survival. The group given dengue antibodies shows low survival. The group given West Nile antibodies shows intermediate survival.

This is Figure 3A from the article.

|

That is, these results fully support a role for ADE in this system. Antibodies to the distinct but closely related dengue virus enhance Zika infection -- in a real animal. West Nile is also related to Zika, but less closely; it has a similar -- but smaller -- effect.

The scientists found that high levels of the anti-dengue antibodies protected against Zika infection. That is, the dose response curve is complex! There is also some evidence that Zika strains vary in their response.

Interestingly, some scientists do not accept that dengue enhancement of Zika is likely. Mice are poor predictors of human immune responses -- and the experimental system here is more complex than we have explained. Nevertheless, there is considerable evidence showing at least the plausibility of the effect: it happens in at least some in vitro and in vivo systems. It would seem prudent to take seriously the possibility that it happens in humans.

The possible role of ADE has implications for understanding the natural history of these virus infections; multiple members of this group of viruses, the flaviviruses, are often found in the same area. Of course, it also has implications for vaccines.

News story: Anti-Flavivirus Antibodies Enhance Zika Infection in Mice -- Researchers report evidence of antibody-dependent enhancement in a Zika-infected, immunocompromised mouse model. (A Azvolinsky, The Scientist, March 30, 2017. Link is now to Internet Archive.)

* News story in an earlier issue of the journal: Dengue may bring out the worst in Zika -- Mouse study offers evidence of antibody "enhancement," which could explain severity of human cases. (J Cohen, Science 355:1362, March 30, 2017.)

* The article: Enhancement of Zika virus pathogenesis by preexisting antiflavivirus immunity. (S V Bardina et al, Science 356:175, April 14, 2017.)

Background post: A Zika-dengue connection: Might prior infection with dengue make a Zika infection worse? (May 7, 2016). The article discussed here is reference 14 of the current article.

More... The effect of prior dengue infection on Zika infection (April 20, 2019).

A post about how subsequent infections with dengue can be more serious than the first: Dengue fever -- Two strikes and you're out (August 10, 2010).

and... Dengue vaccine: a step backwards? (December 6, 2017).

* Previous post about Zika: Why some viruses may be less virulent in women (March 1, 2017).

* Next: Why does Zika virus affect brain development? (August 11, 2017).

There is a section on my page Biotechnology in the News (BITN) -- Other topics on Zika. It includes a list of Musings post on Zika.

Gemmata obscuriglobus, a bacterium with features of a eukaryotic nucleus?

April 14, 2017

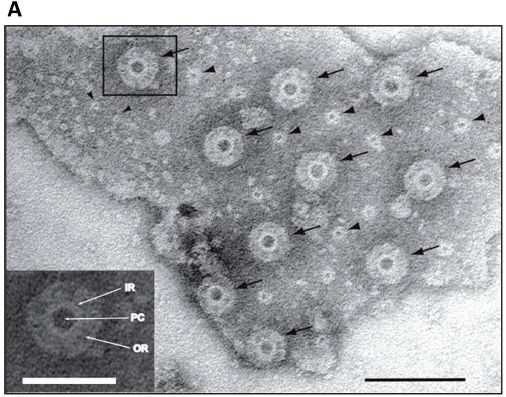

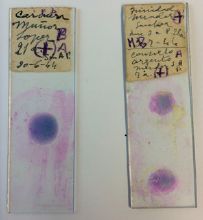

The following figure is from a recent article. According to the textbooks, the structure claimed here does not exist.

The figure shows an electron micrograph image of a membrane from Gemmata obscuriglobus. The membrane has pores.

The main type of pore is marked with arrows in the main figure. An enlarged view of the one that is boxed is shown in the inset at the lower left. PC = pore center; IR = inner ring; OR = outer ring.

The scale bars are 100 nanometers for the main figure, and 50 nm for the inset.

There is a second type of pore, marked with simple arrowheads. We won't comment on them further.

This is Figure 3A from the article.

|

The membrane is thought to be around the genome. That is, it seems to be a nuclear membrane. If the membrane is a nuclear membrane, then the pores are nuclear pores. In fact, more detailed analysis shows that the pores have features found in typical nuclear pores of eukaryotes.

So what's the problem? Gemmata obscuriglobus is a bacterium, and bacteria don't have nuclear membranes, much less nuclear pores.

The bacteria here are part of a recently described group called the Planctomycetes. It is clear that some of the Planctomycetes have extensive internal membranes, but there is no agreement on what they mean. The authors of the current article acknowledge that their work is controversial.

The authors do not suggest that the bacterial nuclear pores seen here are ancestral to eukaryotic nuclear pores. There are substantial differences, and sequence homology seems lacking. Instead, they suggest that these pores are the result of convergent evolution: the independent development of similar structures twice. If it arose more than once, it would suggest that it is not a difficult structure to get, despite its ultimate complexity.

What can we say for sure? This is another step toward characterizing the Planctomycetes, which is clearly an unusual group of bacteria. Some members of the group have features that seem eukaryotic-like.

Anything beyond that is speculation, for now.

But those nuclear pores are intriguing.

News story: Complex bacterium writes new evolutionary story. (Phys.org, February 1, 2017.)

The article, which is freely available: Nuclear Pore-Like Structures in a Compartmentalized Bacterium. (E Sagulenko et al, PLoS ONE 12(2):e0169432, February 1, 2017.)

Nuclear pores were mentioned in the post Origin of eukaryotic cells: a new hypothesis (February 24, 2015).

Another story of a prokaryote that is suspiciously rather eukaryotic... The Asgard superphylum: More progress toward understanding the origin of the eukaryotic cell (February 6, 2017).

This post is noted on my page Unusual microbes.

April 12, 2017

The nasal spray flu vaccine: it works in the UK

April 12, 2017

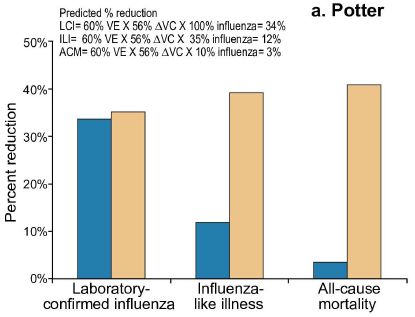

A recent article, from the UK, reports that the nasal spray flu vaccine works well. Why is this noteworthy? About a year ago, the (US) Centers for Disease Control (CDC) concluded that it did not work.

Why the discrepancy? No one knows. The purpose here is to note it -- a challenge to figure out.

Let's backtrack and get an overview of the flu vaccine. There are two broad types of flu vaccine. The traditional flu vaccine is based on inactivated virus, and is given by injection. The newer vaccine is based on modified flu virus; it can infect, but does not cause disease. This vaccine, known as live attenuated influenza vaccine (LAIV), is given as a nasal spray. In principle, the LAIV has two advantages. First, the live virus can promote antibody formation on a continuing basis. Second, it avoids the use of needles. With both vaccines, there is a problem of choosing which flu strains to target; the flu virus is notoriously variable, on a year-to-year basis. Both vaccines include a mixture of different virus strains.

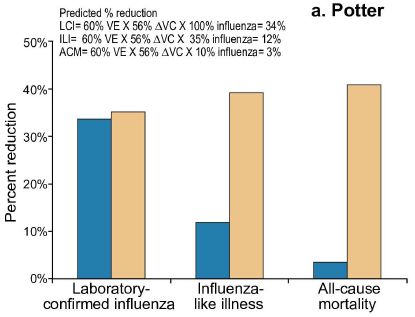

The basic facts here are simple...

- Last summer, the US government, through the CDC, voted against the nasal spray vaccine, citing data that it was only 3% effective in children (ages 2-17). In contrast, the traditional flu shots were 63% effective. (All data for vaccine effectiveness have considerable uncertainties, but the simple numbers convey the message.)

- The current article shows that the nasal spray vaccine was 54% effective for children (ages 2-6) in the UK. The authors recommend its continued use.

There are a number of possible differences between the studies. The current article discusses them. The general conclusion is that there is no explanation at this time for the discrepancy.

It's a reminder that the flu vaccine situation is complex and confusing.

News story: Flu Scan for Jan 27, 2017. (CIDRAP, January 27, 2017.) Scroll down to second story: Study in UK kids shows modest LAIV protection against severe flu.

The article, which is freely available: Live attenuated influenza vaccine effectiveness against hospitalisation due to laboratory-confirmed influenza in children two to six years of age in England in the 2015/16 season. (R Pebody et al, Eurosurveillance, in Volume 22, Issue 4, January 26, 2017.) Since our main purpose here is to note the contradictory results, the most interesting part of the article may be the discussion. The authors analyze what they did, and compare their results to other work, including the US findings. As already noted, there is no resolution at this point.

A page from the CDC announcing the ineffectiveness of the nasal spray vaccine: ACIP votes down use of LAIV for 2016-2017 flu season. (CDC, June 22, 2016. Now archived at the CDC site.) ACIP = Advisory Committee on Immunization Practices. in the CDC.

The following item is for general information.

* A page about flu vaccines from the CDC, in Q-and-A format: Vaccine Effectiveness - How Well Does the Flu Vaccine Work? (CDC, February 15, 2017.) It does not specifically mention the nasal spray vaccine, or the current article.

The nasal spray flu vaccine was discussed in the post Predicting vaccine responses (August 22, 2011). In that case, it fared more poorly than the injectable vaccine.

More on flu vaccine problems: What's wrong with the flu vaccine? (February 16, 2018).

More on needleless delivery of vaccines: Aerospace engineers develop explosive device for supersonic delivery of vaccines (August 2, 2011).

and... Clinical trial of self-administered patch for flu immunization (July 31, 2017).

Posts on the flu virus are listed on the page Musings: Influenza (Swine flu).

More on vaccines is on my page Biotechnology in the News (BITN) -- Other topics under Vaccines (general). It includes a list of related Musings posts.

How the tardigrades resist desiccation

April 10, 2017

The microscopic animals called tardigrades (or, commonly, water bears) are fascinating. Among their unusual properties is that they resist drying. By drying we refer to loss of internal water; the term desiccation is often used.

A new article offers insight into the desiccation resistance of tardigrades.

The first experiment we discuss shows that desiccation resistance depends on how the animals are dried...

|

In this experiment, two different drying conditions were used: slow and fast.

In the top part, the animals were slow-dried, then rehydrated. The results bar shows that 50 of 57 animals survived. That is about 80% (scale at the top).

In the second part, the animals were fast-dried. There were no survivors.

In the bottom part, the animals were first slow-dried, then fast-dried. About 60% survived.

This is Figure 1B from the article.

|

That experiment shows that slow-drying is better. But it shows something more. The final part suggests that slow-drying allows time for something to happen -- perhaps for some genes to be expressed. If that happens, then they can survive fast-drying. That is, it's not the fast removal of water that is the issue, but whether the animals have some time to prepare.

The authors then look for genes that are expressed upon slow drying. This leads them to a number of candidate genes.

The following experiment summarizes some results to test those candidate genes.

The general plan is to inhibit the candidate genes, one at a time. This is done by giving the animals a special RNA that targets the specific gene and blocks it. That RNA is called RNAi, a term that appears in the figure titles.

The figure shows the survival of animals with one or another gene blocked by RNAi. The set of genes was tested under two conditions.

Part A (left side) shows the results for ordinary conditions, that is, hydrated. Part B (right) shows the results for desiccation conditions.

The big picture... Under hydrated conditions, inhibition of any of these genes had no significant effect. ("ns" = not significant.) That is, these genes all appear to be non-essential. However, under desiccation conditions, there was reduced survival in many cases. Thus, as a generality, these genes seem involved in survival during desiccation.

Looking more closely...

- For one gene, survival was high under both conditions; see the green bar at the left of each set. This is in fact a control: green fluorescent protein (GFP), a gene that is not native to the animals, but which was added as a marker. Inhibiting it has no significant effect, wet or dry, as expected.

- Inhibition of each of the other genes seemed to lead to reduced survival. However, the effect was statistically significant only for some. These are the three lowest bars, all marked with one or more asterisks. The main point is that three of the candidate genes show a significant effect, judged from these results alone. That is, they have identified three genes that are involved in desiccation resistance. (The other genes can be studied further. For now, we make no claim about them.)

This is Figure 4 from the article.

|

The scientists do one additional type of experiment. For one of the proteins important for desiccation resistance in the tardigrades, they add it to yeast. Yeast with a tardigrade gene for desiccation resistance survive desiccation better. Survival increases from about 10-5 to about 10-3. Survival is still low, but it's 100-fold better with the one added protein. An intriguing result.

What do we know about these proteins? Interestingly, they seem to be proteins that lack a well-defined structure. Biochemists call them intrinsically disordered proteins. Upon drying, they form amorphous, glass-like structures. It may be that tardigrades survive drying because they are glassy, at least if they are given a little time to turn on their genes for glassiness.

News stories:

* Unstructured Proteins Help Tardigrades Survive Desiccation. (A Olena, The Scientist, March 16, 2017.)

* Tardigrades use unique protein to protect themselves from desiccation. (Phys.org, March 16, 2017.)

The article: Tardigrades Use Intrinsically Disordered Proteins to Survive Desiccation. (T C Boothby et al, Molecular Cell 65:975, March 16, 2017.)

A previous post on tardigrades: A space-faring bear that survives the vacuum of space -- and lay eggs normally (April 30, 2010). It is about desiccation resistance, but also links to some general information about the animals.

More...

* Tardigrade resistance to stress -- how do they do it? (March 27, 2024).

* How some tardigrades are resistant to ultraviolet light (October 27, 2020).

Another case of a desiccation-resistant animal... Lesbian necrophiliacs (March 8, 2010). The post presents a "reason" for the desiccation resistance -- and it ends with what we now recognize as Nobel-prize-winning music.

More about the disordered nature of glass: Turning metal into glass (September 21, 2014).

Staph fighting Staph: a small clinical trial

April 8, 2017

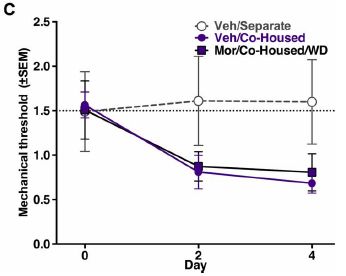

An interesting feature of infection with the pathogen Staphylococcus aureus is that most people who have it don't get sick. Two Musings posts have noted that part of the reason is that other bacteria, including other Staphylococcus strains, keep the pathogenic strains in check [links at the end].

A recent article takes the story a little further. The scientists study a skin disease called atopic dermatitis (AD). They show that it correlates with a higher level of S aureus, and a lower level of other "Staph" strains. Some of those other Staph make antibiotics, which inhibit the pathogenic Staph.

Would adding more "good" bacteria help restore the balance? The scientists show, with a mouse model, that treatment with "good" Staph strains reduces the colonization by the "bad" Staph.

Here is an example of the results...

|

In this experiment, mice were given an experimental skin infection with S aureus. They were then treated with a suspension of "good" Staph (clear bars), or with the vehicle -- the same type of solution but lacking the bacteria being tested (dark bars).

The y-axis shows the number of S aureus found on the skin, per square centimeter, at two different times.

After three days, the number of pathogenic bacteria was reduced by about 10-fold. After 7 days, the bad Staph were undetectable (though they don't say what the limit of detection was).

This is Figure 4D from the article.

|

The results above show that, for this experimental Staph infection of mouse skin, treatment with "good" bacteria reduces the load of "bad" bacteria.

The scientists then do a small test with human subjects with AD. The general nature of the test is similar to the one shown above, except that this is with people who have the skin infection. It is, in effect, a small Phase I trial.

Three conditions are shown: untreated, vehicle only, and AMT (the treatment with "good" bacteria).

The bacteria used here were isolated from each patient's own skin microbiome. Individual strains shown to make useful antibiotics were used. That is, this is a personalized treatment.

The y-axis measures the number of "bad" bacteria on the skin. In this case, the authors use a relative scale, but the idea is the same.

There is a single measurement, 24 hours after the treatment.

You can see that the points for AMT are low.

|

|

Further, for the main group of people, one arm was treated with the AMT bacteria and the other arm was treated with just the vehicle (lacking bacteria). The two points for the same person are shown on the graph connected by a line. Each line points downward, going from vehicle to AMT. That is, the treated arm has fewer S aureus bacteria than the untreated (vehicle) arm, for each person.

The "good" bacteria used here are strains of Staphylococcus hominis and Staphylococcus epidermidis. (An S hominis was also used in the mouse experiment, above.)

The vehicle used here was a common skin cream.

The plotted value is the ratio of the bacterial count after the treatment compared to the count before the treatment.

There is a graph problem here, too. The bottom of the graph is labeled zero; there is no zero on a log scale. Again, the proper question is what the limit of detection was. At least qualitatively for our purposes, it doesn't matter.

This is Figure 7C from the article.

|

The bottom line is that the work provides evidence that use of good, antibiotic-producing Staph strains may be useful in treating Staph aureus infections in humans. Further testing is in order. Does the treatment actually reduce disease symptoms, or does it merely reduce the bacterial count? What strains should be used? (The type of personalized treatment used in this work seems impractical for general use. Surely it is not necessary?) What is the appropriate course of treatment? And what are the merits of treating with bacteria vs treating with one or more specific products (antibiotics) from the bacteria?

News stories:

* Next Generation: Personalized Probiotic Skin Care -- Scientists treat Staphylococcus aureus skin infections using lotions made with bacteria from atopic dermatitis patients' own microbiomes. (J A Krisch, The Scientist, February 27, 2017. Now archived.)

* Transplanting Good Bacteria to Kill Staph. (Y Galindo, UC San Diego, February 22, 2017.) From the lead institution. This story notes that a phase II trial is in progress.

The article: Antimicrobials from human skin commensal bacteria protect against Staphylococcus aureus and are deficient in atopic dermatitis. (T Nakatsuji et al, Science Translational Medicine 9:eaah4680, February 22, 2017.)

Background posts:

* Staph in your nose -- making antibiotics (October 9, 2016).

* Can the Staph solve the Staph problem? (July 12, 2010).

Another story of skin bacteria: Propionibacterium acnes bacteria: good strains, bad strains? (April 1, 2013).

Previous post on the development of a probiotic product: A clinical trial of ice cream (June 2, 2015).

Next: Would a probiotic reduce sepsis in newborn babies? (October 20, 2017).

More on antibiotics is on my page Biotechnology in the News (BITN) -- Other topics under Antibiotics. It includes an extensive list of related Musings posts.

April 5, 2017

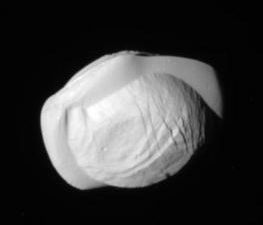

Quiz: what is it?

April 5, 2017

Let's make this multiple choice...

A, A body in the Alpha Centauri star system; photo.

B, A body in the Alpha Centauri star system; artist's conception.

C. A moon of Saturn.

D. A walnut or ravioli, or such.

E. An artificial pollinator.

What do you think it is? The answer is immediately below, so make your choice before proceeding.

This is the first figure from the news story listed below.

Answer and discussion...

A hint? The image was recently returned by the Cassini spacecraft.

This is an image of the Saturnian moon Pan. (That's C, folks.) It is oddly shaped. It has been called a walnut or ravioli, among other things. The reason for its shape is unknown, but probably depends on its relationship with the ring system.

Cassini is near the end of its mission. It is being sent on more risky paths in these final months, with closer approaches to ring material than allowed earlier. In this case, it is about 25,000 kilometers from Pan -- itself about 35 km across.

In September, Cassini will be crashed into Saturn. After all, one would not want to leave it out-of-control in the Saturnian system, where it might crash into one of the moons. It is possible that some of those moons might have life; crashing a spacecraft into them would not be good.

There is no article yet with this photo. NASA releases many images as they become available, and they get noted by the news media. Here is one news story: Cassini, with only a half-year to go at Saturn, just keeps dropping awesome images. (J Davis, Planetary Society, March 9, 2017.) Includes some other recent images from Cassini.

Previous quiz... Quiz: What is it? (August 17, 2015).

* Previous post from Cassini: Venus: an unusual view (March 18, 2013).

* Next: Is there food on Enceladus? (May 21, 2017).

A recent post about the Alpha Centauri system: A better way to get to Alpha Centauri? (March 15, 2017).

Previous post about artificial pollinators: What if there weren't enough bees to pollinate the crops? (March 27, 2017).

Previous posts about walnuts or ravioli: none.

Another oddly-shaped thing out there: Twins? A ducky? Spacecraft may soon be able to tell (August 4, 2014).

The smallest radio receiver

April 4, 2017

It's advertised as a radio built from two atoms. There is a reason for that claim, but it is also an exaggeration, so beware. Nevertheless, it is an interesting radio. It's a radio housed in a diamond.

Listen... video: A diamond radio receiver. (YouTube, 2 minutes. Lots of sound; that's the point.) The music there is from this new radio. And the accompanying background text and pictures give a good idea of what it is about. Feel free to listen just once; you can study how it works later. (The video is also included in the Nanowerk news story.)

The radio makes use of a phenomenon we have encountered before [link at the end]. Diamonds with nitrogen vacancy (NV) defects are fluorescent. Shine green light on them, and they fluoresce red. What's new here is that the intensity of the fluorescence is modulated by radio waves. That is, the output of the radio -- the diamond-based receiver -- is red light whose intensity carries the radio signal. That light signal can be processed by the usual means, and connected to a loudspeaker.

What is an NV defect? A diamond has an orderly array of carbon atoms. Replace one C atom with an N atom, and then remove another C atom right next to it, creating a vacancy (V). That is, two adjacent C atoms of the diamond have been replaced by an N (atom) and a V; that gives an NV defect.

Is this really a two-atom radio? Well, the basic receiver unit is indeed a single NV vacancy, based on replacing two atoms in a diamond. The actual receiver is macroscopic, and contains billions of those units. Further, the part discussed here is just the receiver, not the entire radio. It's a stretch to talk about a two-atom radio, but there really is a key two-atom part.

An interesting point about this radio is its robustness. It is, after all, made of diamond. The authors test the radio at 350° C. It works, though with reduced signal strength above about 200° C. They suggest that their diamond-based radio receiver may be suitable for use in harsh environments, including corrosive environments and space.

The radio operates in the 2.8 gigahertz band. The authors note that other analogous materials, based on atomic defects, should operate at other frequencies.

News stories:

* World's smallest radio receiver has building blocks the size of 2 atoms (w/video). (Nanowerk, December 19, 2016.)

* Hacked Diamond Makes Two-Atom Radio. (D Maloney, Hackaday, December 20, 2016.) An interesting page. Browse the comments, too.

The article: Diamond Radio Receiver: Nitrogen-Vacancy Centers as Fluorescent Transducers of Microwave Signals. (L Shao et al, Physical Review Applied 6:064008, December 15, 2016.)

Background post, with another use of the fluorescence of nitrogen vacancies of diamonds: Where is the hottest part of a living cell? (September 23, 2013).

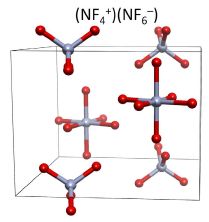

More about nitrogen: How many atoms can one nitrogen atom bond to? (January 17, 2017).

More about atomic vacancies: Progress toward an ultra-high density hard drive (November 9, 2016).

More about diamonds: Ice in your diamond? (April 23, 2018).

Is photosynthesis the ultimate source of primary production in the food chain?

April 2, 2017

Plants fix CO2, using light energy, and that is the start of the food chain. It's an old idea, and oft repeated. Repeated even when we should know better.

Some bacteria fix CO2 without using light energy. They are called chemoautotrophs. The "autotroph" part of the name refers to their use of CO2; "chemo" means they use chemical energy, rather than "photo" energy.

We have long known about chemoautotrophic bacteria. And for decades we have known there are animals that harbor such chemoautotrophs, and use them as their main food. These include organisms near thermal vents, such as the tube worms. Still, we are likely to say that photosynthesis provides the base of the food chain.

A recent article should help us appreciate the importance of the chemoautotrophs.

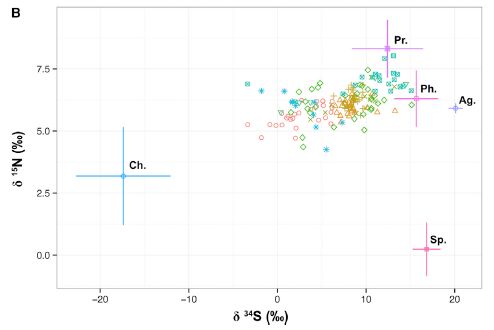

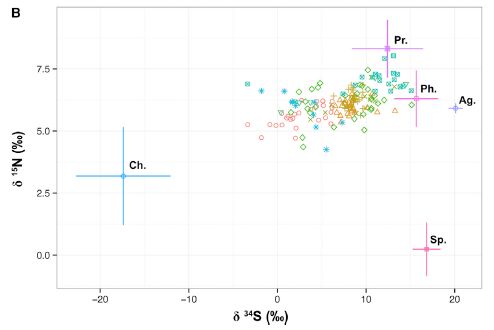

The following figure summarizes some of the findings. It shows certain isotopes found in Caribbean lobsters and in their likely food sources.

|

This figure shows data for a particular isotope of sulfur (S-34; x-axis) and one of nitrogen (N-15; y-axis).

In each case, the amount of isotope is shown as the difference (delta, δ) between the sample and a standard reference, in parts per thousand (‰).

Note the small numbers -- most less than 10‰ (1%); it requires modern instrumentation to get these measurements. Chemical reactions, including enzymatic reactions, may separate isotopes, but the effects are quite small.

|

There are two kinds of data here.

The crosses show the range of values typical of different types of food. For example, the cross at the left, well isolated from most of the rest of the graph, is what is found for chemoautotrophs, labeled Ch. The other important cross is one of those near the upper left, labeled Ph, for photosynthesis. The food sources differ in isotope ratios because the reactions they use separate isotopes differently. (This is largely an empirical finding.)

The data points are results for individual lobsters. The various colors are for lobsters from various geographical sites; we won't worry much about that.

You can see that the data for the lobsters is to the left of what would be expected if they mainly ate food based on photosynthesis. The lobster data suggests that a substantial fraction of their food is based on chemoautotrophs.

The other food sources shown in the figure are: Ag, algae; Pr, predator; Sp, sponge.

This is Figure 2B from the article. Part A of the figure shows a

similar analysis for C-13 vs N-15. The conclusions in the article are based on considering both sets of results together. I show Part B here because it is easier to see the effect visually.

|

Qualitatively, that's the point. The lobsters' food chain has a substantial contribution from chemosynthesis. They eat a type of clam that depends on chemoautotrophic bacteria. And since these lobsters are a major commercial crop, the work shows that the human food chain, too, has a significant contribution from chemosynthesis. People eat lobsters, which eat clams, which use a chemoautotrophic base. The food chain was suspected. What the current work does is to provide data showing that we can see the chemoautotrophic contribution in the lobster -- our food.

The analysis of the full data suggests that the contribution of the chemoautotrophs to the lobsters is about 10-30%, depending on the specific site.

The authors conclude... "As such, lobsters play a key role in transferring chemosynthetically fixed carbon from the deep sediment into the wider marine food web. Ultimately, this includes a contribution to human diets and prosperity in the form of lobster biomass that is worth US$17.4 million to the Bahamas fishery alone." (From the end of the Discussion, p 3397.)

News stories:

* Valuable Caribbean spiny lobsters get their food from an unexpected source. (EurekAlert, December 8, 2016.)

* Caribbean lobster fisheries sustained by 'dark carbon'. (A Merrington, Plymouth University, December 9, 2016.) From the lead university.

* News story accompanying the article: Ecology and Fisheries: Dark Carbon on Your Dinner Plate. (J M Petersen, Current Biology 26:R1277, December 19, 2016.)

* The article, which is freely available: Caribbean Spiny Lobster Fishery Is Underpinned by Trophic Subsidies from Chemosynthetic Primary Production. (N D Higgs et al, Current Biology 26:3393, December 19, 2016.)

More from the Caribbean: Chikungunya in the Americas, 1827 -- and the dengue confusion (April 3, 2015).

More about autotrophic bacteria: Turning E. coli into an autotroph (using CO2 as sole carbon source) (December 9, 2019).

My page of Introductory Chemistry Internet resources includes a section on Nuclei; Isotopes; Atomic weights. It includes a list of related Musings posts, including measurements of isotopes.

Also see:

* Growing food with artificial photosynthesis? (July 9, 2022).

* The new IUPAC periodic table; atomic weight ranges (August 1, 2017).

March 29, 2017

Making triangulene -- one molecule at a time

March 29, 2017

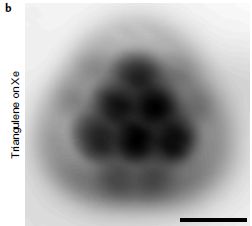

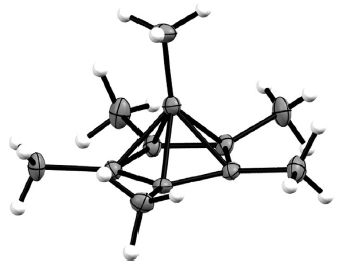

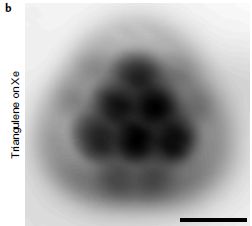

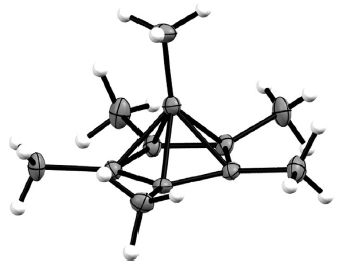

Here it is: a molecule of triangulene...

|

An image by atomic force microscopy (AFM) of one molecule of triangulene. It is resting on a piece of xenon. Yes, it's cold -- about 5 Kelvins.

This is Figure 3b from a new article.

|

What is triangulene? Start with a benzene ring. You may know that you can connect two benzene rings together, sharing a side; that gives naphthalene. Connect three together, side by side, and you get anthracene. Continue... Connect six together side by side, and you get a bigger molecule, which is still aromatic, like benzene. (It is called hexacene.) In particular, you can draw a structural formula with alternating single and double bonds.

Now, instead of connecting those six benzenes side by side, imagine piling them up: three in a base, two above that, and one on top. You get a triangular structure -- like what is seen above. (A structural formula in a moment.)

But there is a catch. You can indeed stack six hexagons that way, and get a big triangle. However, the resulting chemical is not aromatic: you cannot draw it with alternating single and double bonds.

For those who take that as a challenge, and want to try drawing it... I suggest you use a drawing program, such as ChemSketch. You can lay out the big triangle; that is no problem. But look at the bonding. And to make it clearer, I suggest that you turn on the display of all hydrogens. You will see at least one C that has 2 H on it, in addition to 2 C. That is a "saturated", sp3-hybridized C -- and a sure sign this is not one big aromatic ring system.

The anomaly of triangulene was recognized decades ago. Attempts to make it failed, presumably because it was unstable.

A new article reports a synthesis of triangulene. It's of interest for fulfilling the promise -- and for the unusual route of synthesis.

The following figure shows how the scientists made it; it also shows the strange feature of triangulene.

Triangulene is the upper compound in the figure, labeled compound 1. Below it is the compound the scientists made it from, labeled 2b.

The only difference is at the two positions with dots in triangulene. But to understand this, you need to remember a convention in drawing organic chemical structures: hydrogen atoms are often omitted. At those positions, there are two H in compound 2b -- but only one in compound 1. That is, in going from 2b to 1, two H were removed -- as it says on the arrow between them.

|

|

Those two dots on the triangulene? They represent unpaired electrons, left after removing a hydrogen atom at each position. Triangulene is a "radical", a chemical species with unpaired electrons. It is a di-radical.

How did the scientists remove the two H? They used an AFM tip to deliver a voltage to the molecule. The C-H bonds where there are two H are the weakest bonds in the molecule, resulting in loss of a hydrogen atom.

This is part of Figure 1 from the article. (The full figure shows some variations of the starting material. I chose to show only one: the one where it is easiest to see what is going on.)

|

Radicals, with their unpaired electrons, tend to be unstable. That is presumably why previous syntheses of triangulene failed. In this work, the triangulene molecules were stable over a few days of observation; that was under the extreme conditions of ultra-high vacuum and low temperature.

That's the synthesis of triangulene. A molecule that is more complicated than it might appear, now made one molecule at a time.

Some think that triangulene could be useful in electronics, including in quantum computers. Interestingly, some think that making it one molecule at a time might provide a useful supply.

News stories:

* Researchers use new approach to create triangulene molecule. (B Yirka, Phys.org, February 14, 2017.)

* Elusive triangulene created by moving atoms one at a time. (P Ball, Nature News, February 13, 2017.)

* News story accompanying the article: Graphene fragments: When 1 + 1 is odd -- Triangulene, an elusive open-shell magnetic molecule, is synthesized and characterized by electron microscopy. (M Melle-Franco, Nature Nanotechnology 12:292, April 2017.)

* The article: Synthesis and characterization of triangulene. (N Pavlicek et al, Nature Nanotechnology 12:308, April 2017.)

More about radicals: Science myths (February 23, 2016).

Also see:

* Hückel at 40 -- that's n = 40: the largest known aromatic ring (February 1, 2020).

* The longest acene (September 6, 2017).

This post is listed on my page Introduction to Organic and Biochemistry -- Internet resources in the section on Aromatic compounds. That section includes a list of related Musings posts.

A classic example of using the AFM to move atoms: The 35 most famous xenon atoms (June 29, 2010).

A recent example... A new form of carbon: C18 (September 24, 2019).

For more on AFM and related techniques, see a section of my page of Internet Resources for Introductory Chemistry: Atomic force microscopy and electron microscopy (AFM, EM).

What if there weren't enough bees to pollinate the crops?

March 27, 2017

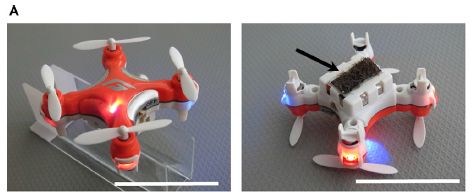

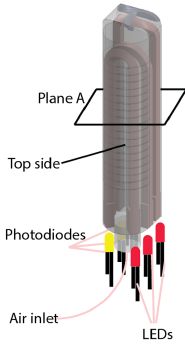

Perhaps we could use one of these, as described in a recent article...

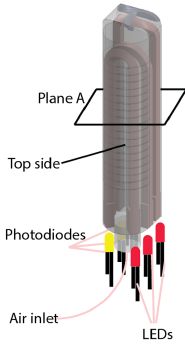

It's probably clear how that device, as seen in the basic view on the left, could fulfill one bee function: flying.

What about pollinating? Turn it over and look at its bottom side -- shown at the right. The arrow points to a black strip. It is sort of a sticky tape, designed to pick up, carry, and release pollen. We'll come back to this in a moment.

The scale bar is 4 centimeters. The device is described as insect-sized. That's a rather big insect!

This is Figure 4A from the article.

|

The main scientific development in the article is to find materials suitable for the pollen transfer steps. The pollination pad is a hairy structure, coated with a sticky gel. The gel is based on ionic liquids. In fact, the article is in a chemistry journal, and is presented as an application of ionic liquid gels.

The article reports examples of using the ionic liquid gels. Ants coated with the gel collected pollen. Then, unmanned aerial vehicles (or drones), were equipped with hairs impregnated with the gel (as shown in the right-hand figure above); they served to transfer pollen from one flower to another. (The drone used here is a commercially available radio-controlled drone.)

The point, then, is that the authors have developed an artificial pollinator. It can fly around, alight on flowers, and transfer pollen from one to another. It's proof of principle.

It's cute, even clever. But is it a good idea? One of the news stories listed below is quite negative about the development. After all, if we take care of our bees, we could use natural pollinators. The title of this post plays into his concerns.

But maybe that's not the right way -- or, at least, the only way -- to think about this. We try to develop an artificial pollinator because we can. Maybe it will work. Maybe it will be cheaper or more reliable than the natural pollinator. Or maybe it won't. We might use automated pollination in cases where pollination is now done by hand. And, yes, we might use an artificial pollinator if the bees are not available. Taking care of the bees is a separate issue. (The way bees are maintained for commercial pollination is hardly a model for wise stewardship of our animals.)

This article may be not only cute and clever, but also thought-provoking. Enjoy -- at many levels.

News stories:

* Sticky gels turn insect-sized drones into artificial pollinators. (Phys.org, February 9, 2017.)

* Artificial pollinators are cool, but not the solution. (M Saunders, Ecology is not a dirty word, February 11, 2017.)

Video. There are various short video clips around. They are fun, but they are too fast to follow. Try them if you want; you might even try slowing them down.

* News story accompanying the article: Sticky Solution Provides Grip for the First Robotic Pollinator. (G J Amador & D L Hu, Chem 2:162, February 9, 2017.)

* The article: Materially Engineered Artificial Pollinators. (S A Chechetka et al, Chem 2:224, February 9, 2017.)

Recent post about bees: Bumblebees play ball (March 20, 2017).

Recent post about robots: A robot that can feed itself (February 3, 2017).

More drones:

* Using drones to count wildlife (May 15, 2018).

* Crashworthy drones, wasp-inspired (October 16, 2017).

Posts about pollinators include...

* Why growing sunflowers face the east each morning (November 8, 2016).

* A "flower" that bites -- and eats -- its pollinator (December 27, 2013). Hm, I wonder what would happen...

* Caffeine boosts memory -- in bees (April 12, 2013).

* Bees -- around you (June 11, 2009).

and maybe... Quiz: what is it? (April 5, 2017).

Also see: Progress toward an artificial fly (December 6, 2013).

A possible genetic cause for the large human brain

March 25, 2017

It's the gene ARHGAP11B.

The gene ARHGAP11B is unique to humans. It's very similar to a gene called ARHGAP11A, which is widespread in other organisms. A team of scientists has now explored the nature of these two genes, and has suggested a special role of ARHGAP11B in the development of the human brain.

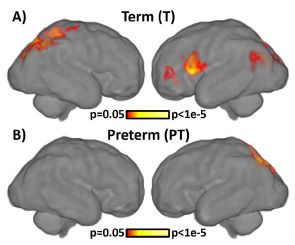

For simplicity, let's refer to the genes as B and A.