Musings is an informal newsletter mainly highlighting recent science. It is intended as both fun and instructive. Items are posted a few times each week. See the Introduction, listed below, for more information.

If you got here from a search engine... Do a simple text search of this page to find your topic. Searches for a single word (or root) are most likely to work.

Introduction (separate page).

This page:

2018 (May-August)

August 29

August 22

August 15

August 8

August 1

July 25

July 18

July 11

July 3

June 27

June 20

June 13

June 6

May 30

May 23

May 16

May 9

Also see the complete listing of Musings pages, immediately below.

All pages:

Most recent posts

2026

2025

2024

2023:

January-April

May-December

2022:

January-April

May-August

September-December

2021:

January-April

May-August

September-December

2020:

January-April

May-August

September-December

2019:

January-April

May-August

September-December

2018:

January-April

May-August: this page, see detail above

September-December

2017:

January-April

May-August

September-December

2016:

January-April

May-August

September-December

2015:

January-April

May-August

September-December

2014:

January-April

May-August

September-December

2013:

January-April

May-August

September-December

2012:

January-April

May-August

September-December

2011:

January-April

May-August

September-December

2010:

January-June

July-December

2009

2008

Links to external sites will open in a new window.

Archive items may be edited, to condense them a bit or to update links. Some links may require a subscription for full access, but I try to provide at least one useful open source for most items.

Please let me know of any broken links you find -- on my Musings pages or any of my web pages. Personal reports are often the first way I find out about such a problem.

August 29, 2018

A Neanderthal-Denisovan hybrid. DNA analysis of an ancient human bone shows that it came from a person whose parents were one Neanderthal and one Denisovan. A milestone, yet inevitable.

* News story: Mum's a Neanderthal, Dad's a Denisovan: First discovery of an ancient-human hybrid -- Genetic analysis uncovers a direct descendant of two different groups of early humans. (M Warren, Nature News, August 22, 2018. In print, with a different title: Nature 560:417, August 23, 2018.) It links to the article.

* A background post... A person who might, just possibly, have met his Neandertal ancestor (June 30, 2015).

* and then ... Denisovan man: beyond Denisova Cave (May 7, 2019).

August 28, 2018

About 14,000 years old.

Here is what it looks like...

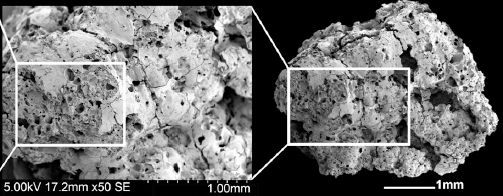

|

The figure shows images of a piece of bread, at two magnifications.

Start at the right. The boxed part is shown at higher magnification to the left. This is part of Figure 3A from the article. |

That's the stuff. It was found in a "fireplace" at an ancient archeological site in Jordan.

What is bread? Well, take some grain (cereal), process it to make some kind of flour, add water, and bake. The structure seen in the images above shows the porosity typical of bread. (This would be a "flatbread", unleavened.)

Why is this interesting -- assuming that the basic claim is correct? The date. It's from a hunter-gatherer society, 4,000 years before farming. At least in this case, it seems that the development of a processed food preceded intentional cultivation of the crop. Bread-making is a complex process; the nature of the bread material studied here suggests great care in its preparation.

The article concludes with some speculation on the purpose of the bread. It is possible that the bread, difficult to make and expensive, was a luxury food used for special feasting. But that is indeed largely speculation at this point.

The work is also interesting for the approach. The authors note that the study of charred food remains from archeological sites is unusual.

News stories:

* Archaeologists discover bread that predates agriculture by 4,000 years. (Phys.org, July 16, 2018.)

* Ancient Bread: 14,400-Year-Old Flatbreads Unearthed in Jordan. (S D Pfister, Biblical Archaeology Society, July 25, 2018.) Excellent overview of the entire story.

The article, which is freely available: Archaeobotanical evidence reveals the origins of bread 14,400 years ago in northeastern Jordan. (A Arranz-Otaegui et al, PNAS 115:7925, July 31, 2018.)

Figure 2 of the article is a nice picture of the fireplace -- with a sunset in the background. (That figure is also in the news story by Pfister.)

* * * * *

More old food: The oldest known piece of cheese (April 25, 2014).

More from ancient Jordan: The case of the missing incisors: what does it mean? (September 13, 2013).

More from a hunter-gatherer culture: The earliest human warfare? (February 17, 2016).

August 26, 2018

The common yeast, Saccharomyces cerevisiae, has a haploid chromosome number of n = 16. (Humans have n = 23.) Two new articles, published together, report the construction of yeast strains with only one or two chromosomes. In these strains, all of the usual small chromosomes have been fused into one or two big chromosomes, which contain the same information.

We'll focus here on article #1, which reports n = 1. Article #2 reports n = 2; however, the similarities between the articles are more important than the differences, at least for now.

The yeast genome is well characterized. Logically, the task is straightforward: just combine all the short pieces of chromosomal DNA into one long piece. Extra copies of special chromosome features, such as centromeres and telomeres need to be removed. That's all logical. Of course, it is a huge technical achievement to get it all done. (Hint... They used CRISPR.)

Why would one want to do this? Well, we really don't know what would happen. Simple principles of genetics suggest that the number of chromosomes shouldn't matter. On the other hand, molecular biologists are increasingly learning about how chromosomes are organized within the cell. It's not at all clear that making a major change in chromosome organization is allowed. Let's try it and see.

So they do it. The resulting strains grow -- almost as well as the original strains, but not quite. Both parts of that are of interest.

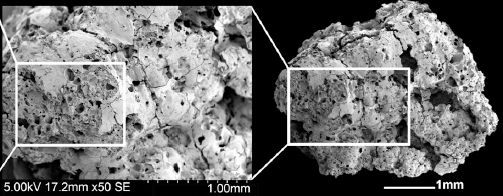

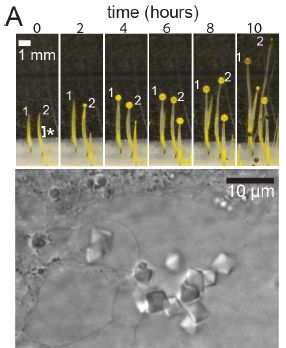

The first figure here shows the technical success...

|

Each (vertical) lane shows the results of electrophoresis of the intact chromosomes of one yeast strain. Smaller chromosomes move through the gel faster; larger ones get caught up and move more slowly.

Each lane is labeled at the top with the strain number. Lanes labeled "marker" have DNA pieces of known size; these are used to calibrate the gel. (Mb = megabases.) There is no marker lane in the first gel. The smallest yeast chromosome is about 0.23 Mb. |

|

Big picture... Strain BY4742, at the left, is the original strain, with 16 chromosomes. In some cases, two chromosomes are about the same size, and run together as a single band. So there aren't 16 bands, but there are quite a few -- labeled with the chromosome numbers. Strain SY14, at the right, is the final constructed strain, with one big chromosome. There is only one band, at the very top. Results for several intermediate strains are also shown. In general, as you go from left to right, small bands (for old chromosomes) disappear and big bands (for new chromosomes) appear. Bands for new chromosomes are marked with red arrows. This is Figure 2a from article 1. | |

The chromosome bands above show that the scientists succeeded. They started with a strain with 16 small chromosomes, and made one with one big chromosome.

There is a little bonus. They couldn't have done that final analysis unless the strain grew. The mere fact that we have an analysis of strain SY14 means that the new strain grew -- at least well enough to grow up a culture to analyze.

How well does it grow? The following graphs provide some basic results...

|

The two graphs in part c (top) show growth curves for the original and new strains. The left-hand graph is for the haploid forms; the right-hand graph is for the diploid forms. In both cases, the growth curves for the two strains are similar. However, it is also true that the new strain (SY14; red curves) grows a little worse. Is that a significant difference? One way to test that is to grow the two strains together, so that they actually compete with each other. |

|

Part d shows a competition test. The y-axis shows the percentage of each strain in the mixed culture over time. The culture starts with about 50% of each. You can see that the percentage of the old strain rises and the percentage of the new strain falls. After three days, the old strain has taken over. For part d, I said that the y-axis is the percentage of each strain. It is not explicit on the graph or in the article, but I am fairly sure that I have interpreted it correctly. This is part of Figure 5 from article 1. | |

So, the new strain grows -- rather well, but not as well as the original strain.

Why doesn't the new strain grow as well as the original? The article provides considerable characterization of the new strain, but does not provide an answer at this point. We can only briefly note some possibilities.

There are two broad types of reasons why the new strain doesn't grow as well as the original strain. One is that there is some mistake along the way. A new mutation might have been introduced, for example. The scientists note a possible problem with the promoter for one gene, but have not yet resolved it.

The other type of reason is that there is something unfavorable about the new chromosome. We might subdivide that further: explanations of detail, such as two genes now being near each other and functioning poorly that way; explanations that fundamentally involve having only one chromosome.

Further work will presumably reveal information about why the new single-chromosome strain doesn't grow as well as the multi-chromosome parent. It may or may not be interesting.

One type of experiment is to let the new strain grow and see what mutations develop to improve its growth. This is an artificial selection in the lab, mimicking what would happen in nature.

There is another point to note about the new strain. It is quite defective in completing the sexual cycle when mated with the original strain. It is, in effect, a new species. (This issue is discussed most extensively in article 2.)

The new strain, a Saccharomyces with only one chromosome in its haploid set, raises many questions. It will be the subject of much study in the coming years.

News stories:

* Researchers Fuse Chromosomes to Create New Yeast Strains. (D Kwon, The Scientist, August 1, 2018.) Now archived.

* Gene editing crunches an organism's genome into single, giant DNA molecule -- The yeast seem to grow just fine with all their genes on a single piece of DNA. (J Timmer, Ars Technica, August 3, 2018.)

* Creating a functional single chromosome yeast. (X Xue, Nature Research Bioengineering Community, August 1, 2018.) By one of the authors of article 1.

News story accompanying the two articles: Genome editing: Chromosomes get together. (G Liti, Nature 560:317, August 16, 2018.)

Two articles:

1) Creating a functional single-chromosome yeast. (Y Shao et al, Nature 560:331, August 16, 2018.) Produced a strain with n = 1.

2) Karyotype engineering by chromosome fusion leads to reproductive isolation in yeast. (J

Luo et al, Nature 560:392, August 16, 2018.) Produced a strain with n = 2.

Another example of developing reproductive isolation in a yeast: Making a new species in the lab (July 26, 2015). The yeast here is Schizosaccharomyces pombe.

Among other posts about yeasts:

* What if a yeast cell contained a bacterial cell? A step toward understanding the evolution of mitochondria? (January 29, 2019).

* The history of brewing yeasts (October 28, 2016).

* How to confuse a yeast -- a sensory illusion (January 15, 2016).There is more about genomes on my page Biotechnology in the News (BITN) - DNA and the genome. It includes an extensive list of related Musings posts.

August 24, 2018

The broad topic of water on Mars remains controversial. For one thing, there are multiple parts to it. Surface water, or sub-surface? Long term, or transient? And of course, solid or liquid?

A new article claims finding a sub-surface lake on Mars. It's probably not very convincing, but it is interesting.

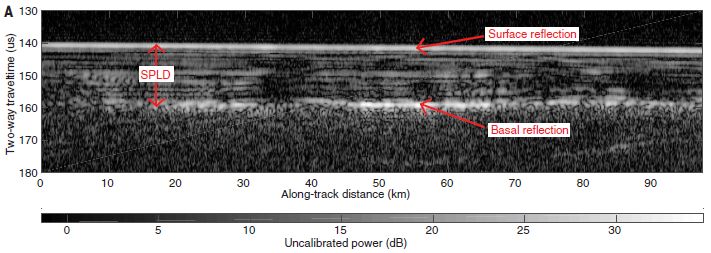

The argument has two parts. The first part is finding an unusual reflection, by radar. Here's that evidence...

|

The Mars Express spacecraft is orbiting Mars. In this work, it is sending out radar signals, and measuring the strength of the signal that is reflected back.

The graph axes effectively are vertical position (e.g., height or depth; y-axis) vs horizontal position along the surface (x-axis). (We'll clarify the y-axis scale below.) The x-axis shows that a swath over the surface of about 100 kilometers was examined here. The color (or shading) encodes the strength of the signal sent back as a radar reflection. The whiter (brighter) the color shown, the stronger the signal. (The calibration bar at the bottom of the figure shows the detail.) Look at the y-axis value of 140. There is a bright line across the entire surface at that "height". A rather uniform bright line. That's the planet surface, as labeled (red arrow). There are various other horizontal streaks. Of particular interest, there is a bright streak at y-axis = 160 and x-axis = 45 to 65. It's labeled "basal reflection". This is Figure 2A from the article. The y-axis is labeled in time units. It is the time for the radar signal to travel to its target and back. Thus it is a measure of the distance from the radar source (in the orbiting spacecraft) to the reflecting structure. Since the signal travels through various materials, with different speeds, the relationship between time and distance is complex. However, the scientists can estimate the depth. Part B of the figure plots the same results as power vs position. In the figure above, power is encoded in the shading, as shown in the bar at the bottom. In B, it is shown as a numerical value, on the y-axis. SPLD? (The vertical red arrow near the left.) South Polar Layered Deposits. |

That "basal reflection" is the focus of the story. It is about 1.5 km (or one mile) underground. That's part one of the argument: there is an unusual reflection; you can see it in the figure above.

Part two? The authors argue that the observed reflection is due to liquid water -- a lake. More specifically, they argue that it is due to a boundary between water and rock.

How do they get to water as a conclusion? It involves trying to understand the signal strength, which depends on the properties of the material. In particular, it depends on an electronic property called the dielectric permittivity. The analysis is complicated, because the radar signal passes through various materials. What the authors do is to model the radar path, and make some estimates and assumptions. The permittivity of liquid water is distinctively high; the modeling strongly suggests that a layer of such high permittivity is part of the path. Thus they conclude that there is a layer of liquid water. It may be more like slush than simple water, but still, something that has some basic properties of liquid water.

The reflection is clear. The interpretation is difficult. The authors have put it out there, to be studied further.

News stories:

* First firm evidence for liquid water on Mars. (K Krämer, Chemistry World, July 25, 2018.)

* Underground Lake Found on Mars? Get the Facts. (N Drake, National Geographic, July 25, 2018. Now archived.)

News stories in the journal:

* Planetary science: Lake spied deep below polar ice cap on Mars. (D Clery, Science 361:320, July 27, 2018 (the preceding issue).)

* Planetary science: Liquid water on Mars -- A water body exists below the martian south polar ice cap. (A Diez, Science 361:448, August 3, 2018.)

The article: Radar evidence of subglacial liquid water on Mars. (R Orosei et al, Science 361:490, August 3, 2018.)

More about water on Mars:

* Water on Mars? InSight finds none (September 19, 2022).

* Another underground lake on Mars -- near the equator? (April 5, 2022).

* Is Mars wetter than Earth -- underground? (February 9, 2018).

* Water at the Martian surface? (August 27, 2011).More about Mars: Mars wobbles, too (January 24, 2021).

More about sub-surface water "out there": Europa is leaking (February 10, 2014).

... and "here": Life in an Antarctic lake (April 22, 2013).

August 22, 2018

1. The May 2018 Ebola outbreak in the Democratic Republic of the Congo (DRC) is over. About three months from first reported cases to a formal declaration of the end. In general, the DRC and partners around the world are getting high marks for how they handled the outbreak, which seemed to have the potential to become very serious. The experimental vaccine and ring vaccination protocol were used here. As far as we know, no vaccinated individuals got Ebola; it is hard to know the significance of that result, since there was no control group. News story: DRC declares end to Ebola outbreak. (L Schnirring, CIDRAP, July 24, 2018.) The bad news... A few days after the official end of the May outbreak, a new Ebola outbreak in the DRC was reported; it is an independent outbreak -- and shows signs of becoming very serious. I have added the information in this note to my page Biotechnology in the News (BITN) -- Other topics in the section Ebola and Marburg (and Lassa).

2. Direct-to-consumer genetic testing. It's not (quite) practical yet to test your own genes, but it is practical -- and inexpensive -- to pay a company to do genetic tests for you. It has become quite a business. Here is a bioethics column with a skeptical view: Opinion: Consumer DNA Testing Is Crossing into Unethical Territories -- Data don't support many direct-to-consumer products, from telomere assessments to bespoke diets based on genetic sequences. (J D Loike, The Scientist, August 16, 2018. Now archived.) This is one person's view. As so often, it is best read for the questions it raises. I have added this article to my page Biotechnology in the News (BITN) -- Other topics in the section Ethical and social issues; the nature of science.

August 21, 2018

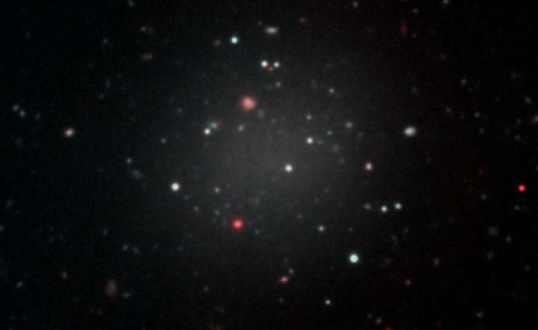

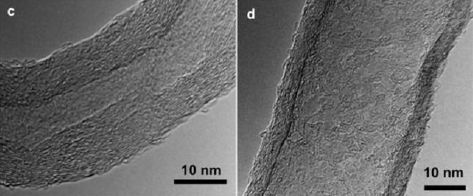

|

A human skull. Much of the top has been removed.

It dates to about 300 BC, and came from what is now Peru. It is presented in a recent article as an example of ancient Inca skull surgery. This is Figure 3 from the article. The arrow marks a depressed region. It is presumably from an injury, perhaps the one that was behind doing the current surgery. |

The article is fascinating -- and a little weird. It is a survey of trepanation in Peru, over 2000 years. It is based on analysis of skulls found in collections as well as from the authors' own field work.

Here is the opening of the article: "Trepanation, or trephination, the scraping, cutting, or drilling of an opening into the cranium, was practiced in various parts of the world in prehistoric times, dating back 5000 years ago in Europe and to around 2500 years ago in the New World. Interestingly, more prehistoric trepanned crania have been found in Peru than any other location in the world. And even more interesting, the survival rates for the ancient procedure in Peru rival those for trepanation done during other ancient and medieval times and through the American Civil War in the 19th century, ..." (It then goes on to discuss possible complications.)

From examination of the skulls, the authors suggest the purpose of the surgery, and comment on other injuries they see. They estimate survival, by looking for signs of healing. That leads them to the survival estimates noted above. How good are those survival estimates? How representative is the available skull sample of the entire surgical experience? It's hard to know. But the authors do suggest conclusions, such as that the survival rate was better for the Incas in the 15th century than for the Americans in the Civil War (19th century). How much that comparison might be affected by the nature of the injuries is not addressed.

It is interesting medical history.

News stories:

* Holes in the head. (M Bell, Phys.org, June 8, 2018.)

* The Incas were better at skull surgery than Civil War doctors. (M Andrei, ZME Science, June 8, 2018.)

The article: Trepanation Procedures/Outcomes: Comparison of Prehistoric Peru with Other Ancient, Medieval, and American Civil War Cranial Surgery. (D S Kushner et al, World Neurosurgery 114:245, June 2018.) The article is described as a "Historical Vignette".

Want more? The authors have a recent book on the subject. See #1, by Verano, in the reference list in the article. The article also notes (but does not list) an earlier book on the topic, by Hippocrates (460-377 BC).

* * * * *

More about skull injuries:

* Head injuries in Neandertals: comparison with "modern" humans of the same era (February 22, 2019).

* Stone age human violence: the Thames Beater (February 5, 2018).Posts about making, using, or fixing skull holes include:

* Need a new bone? Just print it out (November 13, 2016).

* A microscope small enough that a mouse can wear it on its head (November 12, 2011).More historic skulls: An interesting skull, and a re-think of ancient human variation (November 12, 2013).

More from the Incas: A new approach for testing a Llullaillaco mummy for lung infection (August 17, 2012).

My page Internet resources: Biology - Miscellaneous contains a section on Medicine: history. It includes a list of some related posts.

August 19, 2018

The following figure shows a family tree for animals of the phylum Cnidaria -- the phylum that includes the jellyfish, sea anemones, corals, and more. Simple animals, without an organized brain. Of particular interest are the blue boxes; they show which groups include animals that have eyes.

|

Five of the groups shown across the top are marked with blue boxes. That's five out of 13.

Those groups with eyes seem to have arisen independently. None of the ancestral groups (below the top row) have blue boxes; none of them are thought to have had eyes. This is part of the graphical abstract from the article. |

The more complete results within the article suggest that eyes arose (at least) eight times within the Cnidaria.

Eyes use a protein called opsin. Opsin proteins, in fact, serve as light receptors for animals without eyes; they may be universal in cnidarians. Opsin proteins -- and some ability to detect light -- came first; eyes, using opsins, came later. Makes sense. Interestingly, the authors found that the family tree for opsins agreed with the family tree for eyes. That supports their claim that the many cnidarian eyes developed independently.

This is a big-picture story: a broad survey of the features of 1100 species of cnidarians. It reveals some patterns. It remains for future work to work out the details of eye types and functions in these simple animals. But the big picture is that there is a complex story of eye origins in animals as simple as jellyfish, just as there is in more complex animals.

News stories:

* The eyes have it! (K Freel, Molecular Ecologist, July 20, 2018.)

* Without Batting an Eye. (J Cohen, University of California Santa Barbara, July 19, 2018.)

The article: Prolific Origination of Eyes in Cnidaria with Co-option of Non-visual Opsins. (N Picciani et al, Current Biology 28:2413, August 6, 2018.)

Musings has discussed the eyes of the box jellyfish (Cubozoa): How many eyes does it have? (March 12, 2010). Links to more -- both about this animal and about other unusual animal eyes.

More about opsins: A better understanding of the basis of color vision (February 1, 2013).

More about cnidarians: The immune response of cnidarians (e.g., corals) (November 1, 2021).

August 17, 2018

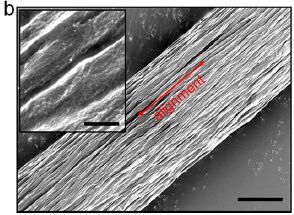

A new article explores another way to make replacement organs. It's rather preliminary, but interesting and encouraging.

The idea is to grow organs in the lab, from cells. To aid in forming an organ in the lab, the cells are grown on a scaffold consisting of an empty organ. What's an empty organ? It is an organ that has been decellularized: all the original cells have been removed. That is, it provides structure, but no biological function beyond that.

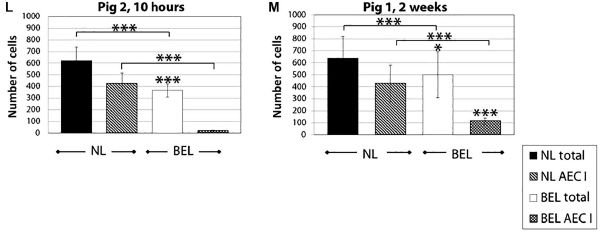

Here is the general plan for the new work... A lung was removed from a dead pig. The lung was decellularized; that gives the scaffold. A lung was then removed from an animal destined to be the recipient. Cells from that lung were used to re-seed the scaffold. After a month of development in the lab, the new, bioengineered lung (BEL) was transplanted back into the animal. The animal also has, of course, a normal lung (NL). Several aspects of lung development were measured at various times; the NL in the same animal serves as a reference for each BEL.

In this work, the cells are from the animal that received the new organ. In a sense, the animal received a transplant from itself. However, there are key steps outside the animal, and immunological compatibility is not to be taken for granted.

|

Each of the two graphs above is for one animal, sacrificed at the age shown at the top. For one animal, that was at 10 hours (after transplantation of the new lung); obviously, little has happened. It is sort of a zero point. The other graph is for an animal sacrificed at two weeks.

Each graph has data for the NL (left) and the BEL (right). There are two kinds of cell counts. One is for total cells; the other is for alveolar epithelial cells type I (AEC1), a type of lung cell. From the 10 hr graph... You can see that the BEL is smaller, and has only a small number of the AEC1 cells. Compare with the two week pig... The BEL is stable, maybe even a little larger. Importantly, it has considerably more of the specialized AEC1 cells. This is part of Figure 5 from the article. |

That's an example of evidence that the lungs were developing properly upon transplantation to the recipient animal. It's not exactly overwhelming evidence, but it is good as far as it goes.

Of course, the article contains much more evidence. There are two more pigs, one each analyzed at one and two months. There are many pictures showing tissue structure. There is evidence of vascularization (formation of blood vessels), an important issue with lab-grown materials. And there is evidence that a proper lung microbiome is being established. No big problems were seen (though there were some small problems).

You may have noticed that the figure shown above contains parts L and M of Figure 5. The full article contains eight figures, five of which have parts up through J or more. A lot of evidence, indeed.

Did the transplanted lung provide respiratory benefit to the recipient? No. In fact, it wasn't even "hooked up"; the pulmonary artery, between heart and lung, was not connected for the new lung.

Bottom line... The approach of using a decellularized organ as a scaffold for making a new lung in the lab has passed some early tests in a pig model.

News stories:

* Researchers successfully transplant bioengineered lung. (Medical Xpress, August 1, 2018.)

* Expert reaction to study attempting to create better bioengineered lungs in pigs. (Science Media Centre, August 1, 2018.) One expert's comments.

The article: Production and transplantation of bioengineered lung into a large-animal model. (J E Nichols et al, Science Translational Medicine 10:eaao3926, August 1, 2018.)

Musings has discussed the possible use of pig organs transplanted to humans. That's not what this is about. The role of the pig here is as a model. If we can get this to work with the pig, then we can try to get it to work to make human organs: a human-derived scaffold, seeded with human cells.

* * * * *

Also see:

* Can human lungs that are too damaged to be transplanted be fixed? (August 22, 2020).

* Human heart tissue grown in spinach (September 5, 2017). Another example of using a cell-free scaffold for growing organs.

* Lamb-in-a-bag (July 14, 2017). Lung development.My page Biotechnology in the News (BITN) for Cloning and stem cells includes an extensive list of related Musings posts, including those on the broader topic of replacement body parts.

August 15, 2018

1. Underwater landslides are a poorly understood but important phenomenon. One of the mysteries is why they sometimes occur at places where the slope is quite small. A new article suggests that a particular combination of materials may be responsible: a layer of diatoms (algae) topped by clay.

* News story: Diatom ooze: the weak link in submarine landslides? (D Petley, Landslide Blog (American Geophysical Union), February 13, 2018.) Links to the article.

* More about diatoms: Communication in diatoms (February 6, 2022).

* More about what causes landslides... How hot is a landslide? (April 16, 2019).

2. How many genes do humans have? The latest count is 21,306 (for protein-coding genes). That's about 1000 more than commonly accepted values. The following news story discusses the history and problems of gene counts; it links to the new article (currently a preprint, freely available at BioArXiv): New human gene tally reignites debate -- Some fifteen years after the human genome was sequenced, researchers still can't agree on how many genes it contains. (C Willyard, Nature News, June 19, 2018. In print, with a different title: Nature 558:354, June 21, 2018) This is for perspective on the issue of gene count. There is no attempt to judge or analyze any particular estimate.

August 14, 2018

Fall 2017 brought devastating hurricanes to the Caribbean. The lizards that survived the hurricanes were stronger than typical of the original population. They were better able to hold on to tree branches during the winds. That's the message of a new article.

Here is an example of what the scientists found...

|

The graphs show the size of the toepads on the front limbs of the lizards (y-axis) vs the size of the animal (x-axis). The two graphs are for the animals at two different locations in the Caribbean island nation of Turks and Caicos. The animals are Anolis scriptus, a common small lizard.

The timing of the measurements is defined by two major hurricanes (Irma and Maria), close together in the Fall of 2017. The open circles and dashed lines are for the data on animals captured before the hurricanes. The solid circles and solid lines are for the data on animals captured after the hurricanes. In both graphs, you can see that the animals captured after the hurricanes have larger toepads. The gray regions show the 95% confidence intervals for the lines to fit the data points. Approximate timeline, in weeks: "before" measurements (0); hurricanes (1, 3); "after" measurements (6). This is the lower left frame of Figure 2 from the article. The full figure includes three other frames, with other such measurements. Each one shows a clear after-vs-before effect. |

The observations seem simple enough. Animals that survived the hurricanes have larger toepads.

What does it mean? The observations are interesting, but we should be cautious about interpreting them.

It's easy enough to imagine that larger toepads allowed some lizards to hang on to trees better, thus increasing their chances of survival. But is there any evidence that supports that interpretation? Are there alternative explanations?

First, we should understand that this was not a carefully controlled experiment. The authors had been in the Islands making measurements on the lizards as part of another project. They left as the storms approached. Later, they realized that the situation presented an opportunity: go back and measure the lizard population again, and see if it has changed. That's the basis of the current work. But there can be questions about how the initial and final populations are related. The authors note some of these in their discussion.

For now, for the sake of discussion, let's assume that the two sets of measurements are samples from the proper related populations. It would seem that the hurricanes resulted in a selection for lizards that are "stronger" -- specifically, better able to hold on to the branches.

Does this mean that next year's lizards will be like the "after" sample above? No; there is nothing in the observations that says the differences noted above are due to genetics. It would certainly be interesting to continue the observations, and see what happens over the coming generations.

There is another question... In discussing the work, we suggested that larger toepads means the lizards can hang on better during a wind storm, and thus survive the winds better. It is known that toepad area relates to clinging ability. However, it is not known if clinging would be the natural response of the lizards to high winds. In fact, some thought that the main survival strategy would be to scurry to a protected area (which is what the scientists did).

That question led the authors to do some testing, with some artificial wind (from a leaf blower). That work showed how the lizards respond to wind. They found that the lizards tried to hang on -- and that bigger toepads helped them do so. It also led to some understanding of one of the results that at first didn't seem to make sense. The experimental work is just hinted at in the main article.

It's an interesting article. Serendipity. A natural disaster just happened to occur while some work was in progress. The scientists were able to take advantage of the situation, and make additional measurements, showing how the disaster affected the lizards. That led to some experimental work. Overall, we know more about how lizards respond to hurricane-force winds, but many questions remain.

News stories:

* Leggy lizards don't survive the storm. (Phys.org, July 25, 2018.)

* After Last Year's Hurricanes, Caribbean Lizards Are Better at Holding on for Dear Life. (E Yong, Atlantic, July 25, 2018.) Now archived.

* Behind the paper: Hurricane-induced selection on the morphology of an island lizard. (C Donihue, Nature Research Ecology & Evolution Community (blog), July 25, 2018.) From the lead author of the article.

Video: Natural selection in a hurricane - The lizards that won't let go. (3 minutes; narrated.) A promotional video, from the journal (linked here via YouTube). Perhaps useful, and even a little amusing.

The article: Hurricane-induced selection on the morphology of an island lizard. (C M Donihue et al, Nature 560:88, August 2, 2018.)

A post about hurricane effects: Recovery from natural disaster: can the poor benefit? (July 22, 2011).

More about winds and animals: Wind-borne mosquitoes repopulate the Sahel semi-desert after the dry season (October 14, 2019).

More about lizard toes: A story of dirty toes: Why invading geckos are confined to a single building on Giraglia Island (November 12, 2016).

More about lizards: How to clamp down to keep the partner from straying (December 15, 2020).

August 12, 2018

Recent years have seen huge decreases in the cost of DNA sequencing, driven largely by the introduction of novel technologies. However, the state of synthesizing DNA has not improved much. Some groups have made heroic efforts with the old technology and made some small chromosomes. However, many scientists feel that the field of DNA synthesis is ripe for a major transformation -- fundamentally new technology.

What about using DNA polymerase? Ordinary DNA polymerases only copy DNA. What we want here is new ("de novo") DNA: DNA with the sequence determined by the person placing the order (not by a pre-existing DNA template).

A recent article offers a novel -- and interesting -- approach to making DNA. It establishes the principle. Whether the method can fulfill the promise of being a revolutionary development is for the future.

Here is the idea. (It is summarized in the figure below, but I think it may be good to go through the key steps before introducing the figure.)

The key player is an enzyme, called terminal deoxynucleotidyl transferase, or TdT (or terminal dNTP transferase). Its natural enzymatic activity is to add one nucleotide to the end of a DNA chain -- a single strand of DNA. How does it know which nucleotide to add? It doesn't. This enzyme adds a nucleotide at random to the end of the chain. TdT is a DNA polymerase, but -- unusually -- it is template-independent.

How do the authors solve this problem (#1)? They make four batches of the TdT enzyme, each bound to one of the four nucleotide triphosphate precursors. For example, they make TdT-dATP, where the TdT enzyme has the nucleotide triphosphate for A (dATP) bound to it. This particular batch of the enzyme can add only A. Problem #1 solved.

That leads to problem #2... The enzyme as described above should add A, then another A, and so on. We don't want that; we want it to add just one A -- and then we decide which nucleotide to add next by adding another batch of the enzyme.

How do the authors solve problem #2? They stop the enzyme after one addition. How? We said that the enzyme is bound to the nucleotide triphosphate, such as TdT-dATP. The trick is that it is bound "permanently" (covalently). After the enzyme adds its nucleotide, it is stuck. There is now a separate step to release the enzyme. Solving problem #2 was the key breakthrough of the new work.

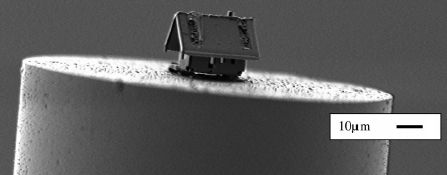

The following figure is a cartoon of the strategy. Add one nucleotide at a time, using a form of the enzyme covalently bound to the correct nucleotide triphosphate precursor. Then release the used enzyme, and go on to the next nucleotide.

|

Start at the top, with the primer (length 5, in this case). As with all DNA polymerases, TdT adds nucleotides only onto a pre-existing chain.

At the right side is a green "horseshoe"; that is the enzyme TdT. Inside it is one dNTP nucleotide triphosphate, which is "tethered" to the enzyme. The base itself is shown in red. At the bottom, the enzyme with its nucleotide binds to DNA. The base is transferred to the end of the DNA chain. The DNA chain is now length 6, with the last base in red. At the left, remove the enzyme. Repeat the cycle. This is Figure 1a from the article. |

That's the idea. Does it work? The scientists report some small tests. They add as many as ten new nucleotides onto a DNA chain. The accuracy is good, but not good enough yet for actual use.

The authors argue that the method has the potential to be faster and cheaper than the current methods for making DNA.

An interesting feature of the proposed method is that the enzyme is consumed. It indeed carries out what would normally seem to be a catalyzed reaction, adding one nucleotide to the chain. But because of the special bound form of the enzyme, the enzyme is then destroyed. The enzyme has now become a consumable reagent. Is that practical or economical? The authors argue that enzymes are now so inexpensive that it is.

If you are concerned about the tedium of the cycle, adding one reagent or another at each step... This is how DNA is made now. It's just that different reagents are used. The user enters the desired sequence into the computer that controls the synthesizing machine. That same strategy would be used here, just with different reagents.

It's an interesting approach. Further work will tell us whether it is useful.

News stories:

* New DNA synthesis technique promises rapid, high-fidelity DNA printing. (Phys.org, June 18, 2018.)

* Faster, Cheaper, Better: A New Way to Synthesize DNA. (J Chao, Lawrence Berkeley National Laboratory, June 18, 2018.) From one of the institutions involved.

The article: De novo DNA synthesis using polymerase-nucleotide conjugates. (S Palluk et al, Nature Biotechnology 36:645, July 2018.)

Why is there an enzyme like TdT -- an enzyme that makes DNA without sequence specificity -- in nature? It's part of the vertebrate immune system, where it helps to generate diversity.

* * * * *

A post involving extensive de novo DNA synthesis: What is the minimal set of genes needed to make a bacterial cell? (July 9, 2016). The largest DNA chains made by de novo synthesis are about 200 base pairs. With effort, they can be assembled into larger chains. The largest products made from that approach are about 1.5 million base pairs, in work making synthetic yeast chromosomes. The DNA discussed in this 2016 post is a little less than that. These projects are pushing the limits of current DNA synthesis technology. However, the smallest of the human chromosomes is more than 20-fold larger.

A post about the cost of DNA sequencing: DNA sequencing: the future? (November 7, 2017).

August 10, 2018

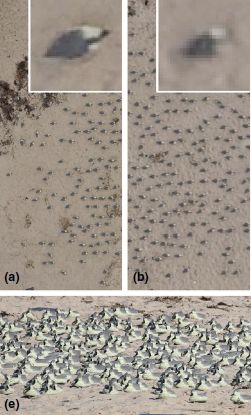

As the numbering in the title suggests, this is a follow-up to two previous posts, which introduced Wolbachia [links at the end]. They explained the idea behind using Wolbachia, and gave some data that was encouraging. We now have the largest trial yet of Wolbachia-infected mosquitoes in the field; it, too, is encouraging.

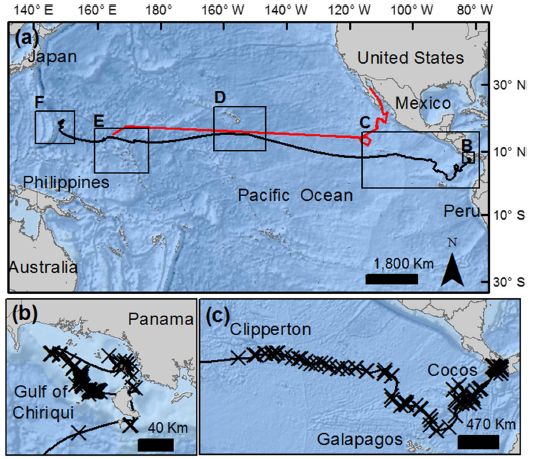

Wolbachia bacteria infect insects, and are transmitted to the offspring. Interestingly, infection with Wolbachia often limits the growth of pathogens in the insects. Wolbachia infection of mosquitoes is not common is nature, but can be established. The earlier work showed that the infection is maintained -- and that the infected mosquitoes have a reduced capacity to maintain the pathogens tested. In particular, one post showed lab data suggesting that Wolbachia reduced Zika virus.

In the current test, Wolbachia-infected mosquitoes were released in a medium-sized city, where dengue -- a virus related to Zika -- has been prevalent. There was a major reduction in the incidence of locally-acquired dengue over a four-year study period.

At this writing we have only a preliminary version of the article, which has been submitted for peer review. More about this later, but we just want to note that the article is not yet in final form -- and might change.

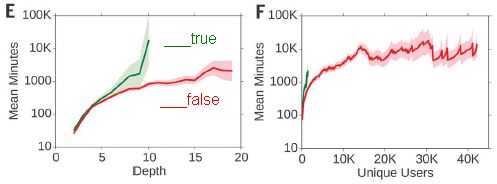

The following figure shows the main results of interest.

|

Look at the top frame. The black bars show the number of locally-acquired cases of dengue (y-axis scale at the left), over time (x-axis).

The green bars at the right show the implementation of the Wolbachia-infected mosquitoes, which are supposed to reduce virus transmission. The implementation was done in four stages, starting in late 2014; the height of the green bar shows the area covered at various times (y-axis scale at the right). You can see that there are bursts of dengue cases -- up through early 2014. Since the program was started, there have been only four cases. The first three of those are marked as not being relevant. The fourth case (early 2017) is discussed in the article; its origin is not clear. In any case, even if you counted all of those cases since 2014, there is a substantial reduction. The bottom frame is a "control". It is laid out in the same fashion, but this frame shows "imported" cases of dengue during the same time -- cases in the area that are likely due to the person acquiring it elsewhere. You can see that imported dengue continues more or less as usual during the test time. The reduction in locally acquired dengue is not due to a general downturn in dengue incidence. (Caution... The y-axis scales are different in the two frames. For the green areas, it is the same information, but at a different scale on the graph.) This is Figure 4 from the article. |

That's it. The largest field test yet of Wolbachia-infected mosquitoes, and there is a major reduction in locally acquired dengue, over the four-year study.

It's an encouraging result.

It's important to note that each specific area was treated only during one phase. That the first area treated remained dengue-free over four years reflects that the Wolbachia are being maintained in the mosquito population.

Much of the article is about how the scientists worked in the community to build acceptance of the program.

News story: Dengue fever outbreak halted by release of special mosquitoes. (S Boseley, Guardian, August 1, 2018.) Good overview, though some details are a bit hyped.

The article, which is freely available: Scaled deployment of Wolbachia to protect the community from Aedes transmitted arboviruses [version 1; referees: awaiting peer review]. (S L O'Neill et al, Gates Open Research 2:36, version 1: August 2, 2018.)

Some comments on the journal and how it handles articles...

This is a new journal, from the Gates Foundation, which was a major funding source for the work. Gates requires that the work they fund be published open access; their new journal is a vehicle.

The journal makes the articles public at all stages of the process of consideration. In this case, the current version is what was submitted; it has not yet been peer-reviewed. That information is included in the title of the article -- on the journal web site, and in my listing here. You may, of course, find a later version at the journal site. (I think that they maintain all versions, so that a reader can check how things changed, if that seems to be an issue.)

On August 10, one referee report was posted at the web site. (It is public; you can read it.) The article title was modified to reflect the development, but the article itself was not changed. I note this largely to give an idea how the web site works for a journal such as this.

There is a some interest in making preliminary versions of articles public, clearly labeled. This may be of particular importance in the public health field, where time may be an issue in responding. Since the status of the article is clear, those who might want to make use of any information in it are aware that they should double check any key points of interest. (Preprint servers, such as ArXiv and BioArXiv, are used in various ways, but sometimes host early versions of articles.)

* * * * *

Background posts on Wolbachia in mosquitoes:

* Can Wolbachia reduce transmission of mosquito-borne diseases? 1. Introduction and Zika virus (June 14, 2016).

* Can Wolbachia reduce transmission of mosquito-borne diseases? 2. Malaria (June 17, 2016).Previous post on dengue: Antibiotics and viruses: An example of harm (May 6, 2018).

Another approach: What if the mosquitoes carried immunity to the dengue virus? (March 8, 2020).

More on dengue is on my page Biotechnology in the News (BITN) -- Other topics under Dengue virus (and miscellaneous flaviviruses). It includes a list of related Musings posts.

August 8, 2018

Two new genomes. Genome articles are both fascinating and frustrating. The work in them is almost entirely computer analysis; reading about that analysis is not much fun. The resulting genome is potentially a gold mine. Genome articles typically offer comments about why the genome seems interesting, though any such comments should be taken as preliminary. The news stories give the idea.

1. News story: A genetic bed of roses: scientists sequence the complete genome of the rose. (M Andrei, ZME Science, April 30, 2018.) Links to the article, which is freely available.

Also see: A step toward roses without thorns (August 28, 2024).

2. News story: Cracking the genetic code of koalas. (Science Daily, July 2, 2018.) Links to the article, which is freely available.

August 7, 2018

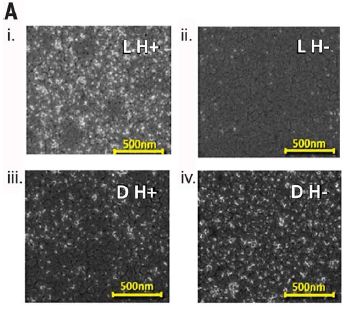

The following figure shows samples of a peptide (short chain of amino acids) adsorbed onto a magnetic surface, as reported in a recent article.

|

There are two variables here. One is the handedness of the stereocenters in the peptide. They are either all L or all D. The other variable is the direction of the applied magnetic field. H+ and H- refer to opposite orientations of the magnetic field.

The top two frames (i, ii) are for the L isomer. You can see that it adsorbs better when the field is H+ (left, i). In contrast, the D isomer (lower: iii, iv) adsorbs better when the field is H- (iv). This is Figure 1A from the article. |

That is, the two enantiomers (mirror-image isomers) adsorb differently on the surface, depending on the direction of the magnetic field. Just in case you get lost somewhere along the line here, which is likely, this is the main point. The figures above show that this is true. There shouldn't be any debate about that point.

If the above point is true, then it should be possible to use the method to separate enantiomers. In fact, the authors go on to show such a separation.

That could be useful. If this really works, it could be an inexpensive way to separate enantiomers. It's simple. And it may be fairly general, not requiring customization to individual cases.

How does this work? It has to do with electron spin -- which is probably a clue that we won't have a clear explanation. The two enantiomers have electron clouds that are mirror images of each other. The surface, too, has an electron cloud. The applied magnetic field polarizes the electron cloud in the magnetic surface. For one isomer, the effect is that the electrons of the approaching molecule are spin-aligned anti-parallel to those in the surface; this makes for a more favorable interaction. For the other isomer, the electron-spin alignment is parallel (to that of the surface), which is less favorable. That's the basis of the separation.

The separation is kinetic. That is, it affects the rate at which the two enantiomers approach the surface. It does not affect the final binding strength. Using the method requires finding the optimum time that maximizes the separation.

The figure (above) shows that the separation worked better for one isomer than the other. The authors note this, and do not have a simple explanation.

In the authors' words (from the abstract)... "Here we show experimentally that the interaction of chiral molecules with a perpendicularly magnetized substrate is enantiospecific. Thus, one enantiomer adsorbs preferentially when the magnetic dipole is pointing up, whereas the other adsorbs faster for the opposite alignment of the magnetization. The interaction is not controlled by the magnetic field per se, but rather by the electron spin orientations, and opens prospects for a distinct approach to enantiomeric separations."

Understand it or not, the method has a name: chiral-induced spin selectivity. That leads to a nice acronym, which you can figure out -- and check with the title of this post.

It's not a completely new idea, but the work here seems more promising than any previous work. Since it has potential to be useful, as well as theoretically intriguing, it is likely that this will be pursued.

News stories:

* Magnetic fields could fish out enantiomers -- Spin-state effect could lead to new way to run chiral separations on racemic mixtures. (S Lemonick, C&EN, May 11, 2018.) The figure at the top of this story may help. Note that the bottom electrons shown for the two isomers have opposite spins. For the one on the left, it is anti-parallel to the spins on the surface; that promotes binding. (The figure is similar to Figure 4B of the article.)

* Chiral Separations With Magnets. No, For Real. (D Lowe, In the Pipeline (blog at Science Translational Medicine), May 17, 2018.)

The article: Separation of enantiomers by their enantiospecific interaction with achiral magnetic substrates. (K Banerjee-Ghosh et al, Science 360:1331, June 22, 2018.)

Posts that mention issues of stereoisomerism include:

* Carbon-silicon bonds: the first from biology (January 27, 2017).

* Doing X-ray "crystallography" without crystals (September 18, 2016).

* The answer is cereblon (March 16, 2010). A classic example of the importance of stereoisomerism in drug development, though that aspect is not discussed here. One stereoisomer is a useful drug; another is dangerous.A recent post on magnetic fields: Brain imaging, with minimal restraint (June 2, 2018).

Also see... A new record: spinning speed (October 12, 2018).

This post is noted on my page Internet Resources for Organic and Biochemistry under Stereochemistry (Chirality).

August 5, 2018

Let's start with some simple lab experiments. They explore how an animal responds to various colors of light. Then we will try to interpret the results in the context of the natural environment of the animal.

The animal here is the marine ragworm, Platynereis dumerilii. Specifically, the scientists study the planktonic larvae. The animal has six small eyes, plus additional photoreceptors in the brain.

The following graphs show the vertical swimming speed of the animals as a function of the wavelength(s) of light. The speed is shown (y-axis) in millimeters per second. However, for the most part it is sufficient just to note whether the response is positive or negative: upward or downward swimming in response to the light.

|

The graph at the left shows the response of the animals to various wavelengths of light.

There are two types of symbols. They are for larvae of different ages. For example, 41 hpf means 41 hours post fertilization. Qualitatively, the results are similar for the larvae of different ages. There are differences in detail, which need not concern us here. |

|

The first bars, at the left, are for darkness. There is no vertical movement. The next few bars show a downward (negative) swimming response. These are for short wavelengths, in the ultraviolet (UV). The next bars show an upward (positive) swimming response. These are for wavelengths corresponding to blue (and a little beyond). Longer wavelengths lead to little swimming response. This is Figure 3E from the article. | |

Thus we see that the vertical swimming response of the animals depends on the color of the light. UV leads to downward movement; blue to upward movement.

What if we provided two types of light, ones that promote different responses? That's the basis of the next experiment...

|

Look at the open symbols. Here, light of 380 and 480 nm was used. One of those promotes downward swimming, whereas the other promotes upward swimming.

The x-axis shows the relative amounts of the two kinds of light. It is confusingly labeled as "ratio (%)". It shows the percentage of the light that is 380 nm (UV). That percentage is based on the number of photons. |

|

If the light is mostly UV (to the left), the downward response dominates. If the light is mostly blue (to the right), the upward response dominates. (Open symbols only, for the moment!) Somewhere along the way, the response is zero, and the larvae do not swim vertically at all. That is, the two signals -- one to swim upward and one to swim downward -- cancel. That occurs at about 50% on this scale. There is a second data set, which is something of a control. It's shown by the shaded bars. In this case, the light is a mixture of 360 and 660 nm light. The latter provides no response (see top graph). The result is that there is a downward response over most of the range of light mixtures. At the very right, that response is reduced, probably because the UV light is less intense. This is Figure 3G from the article. | |

How does that get us to a depth gauge? It turns out that different wavelengths of light penetrate into water differently. Thus the ratio of UV to blue light varies with depth. The authors don't actually demonstrate the depth gauge, but it follows from the responses shown above. The animals will swim upward or downward in the ocean until they reach the depth where the two signals cancel. That's the prediction.

The article contains much more about the biology of these worms. It discusses the two types of photoreceptors, one in the eyes and one in the brain, and how the brain integrates the two signals. But that is beyond our scope here. Even the behavioral responses to light are more complex than discussed here. What we have done in this post is to focus on one aspect of how the worms respond to light.

News story: 'The eyes have it' - Photoreceptors in marine plankton form a depth gauge to aid survival. (Phys.org, June 27, 2018.)

The article, which is freely available: Ciliary and rhabdomeric photoreceptor-cell circuits form a spectral depth gauge in marine zooplankton. (C Verasztó et al, eLife 7:e36440, May 29, 2018.) There is a short "eLife digest", intended as an overview. In the pdf file, it is on page 2, embedded within the Introduction.

Another study on these larvae: Melatonin and circadian rhythms -- in ocean plankton (November 24, 2014). Includes the UV-avoidance response. The article of this post is referred to in the current article.

Also see a section of my page Internet resources: Biology - Miscellaneous on Medicine: color vision and color blindness.

August 3, 2018

Imagine the following experiment... A wheat farmer and a rice farmer meet at Starbucks. As they proceed to their table, there is a chair in the way. Which of them is more likely to move the chair out of the way?

A recent article addresses the matter. The authors didn't do exactly the experiment I described above, but what they did should lead you to a clear prediction.

You might also make a good prediction if you recall an earlier Musings post [link at the end] on the nature of wheat- and rice-farming.

Here are results from one experiment in the new article...

|

The experiment here was not a competition, as presented in my opening. However, it is based on a designed experiment. The authors arranged that visitors at various Starbucks locations would face a situation in which moving the chair out of the way would seem to be advantageous (though not strictly required).

The experiment was done in five cities. In two of the cities, about 15% percent of the people moved the chair out of the way. In the other three cities, fewer than half that many moved the chair. As you can see from the labeling, the cities with higher numbers of chair movers (yellow bars) are from the wheat-growing region of China. The cities with lower numbers (green bars) are from the rice-growing region. The people being observed and recorded here were ordinary customers, who had no knowledge of the "experiment". This is Figure 5 from the article. |

Taken alone, the story here might seem rather odd. However, it is part of a larger story, one that Musings has noted before [link at the end]. Wheat- and rice-farming are very different kinds of activities. Different regions of China have been doing one or the other for millennia. Researchers have found that the cultures in those areas are also different. That is, there is a correlation between major grain crop and some cultural features.

In this case, the authors predicted that people in wheat-farming regions, who tend to be more individualistic (rather than collectivistic or cooperative), would be more likely to move the chair. The argument is that people in individualistic cultures are more likely to try to control the environment -- and that means moving the chair to suit their needs. In contrast, those with rice-farming traditions, who tend to adapt themselves to what they find, would more often squeeze through, rather than move the chair.

The individuals who were observed are not necessarily themselves farmers. In fact, the study sites, in Starbucks cafes in big cities, would seem biased toward middle class urban residents. The analysis is about regional characteristics.

As a small validation test... The authors did the same test in the United States and Japan. The percentage of chair movers was about 20% in the US, the more individualistic culture. It was about 8% in Japan, the more collectivist culture.

You don't need to buy all that. But let the authors make their case. Ultimately, it will take much evidence to sort out which of the interpretations are most broadly useful. Surely, it is plausible that long standing cultural practices, developed in the context of farming, affect other aspects of life -- including modern life at Starbucks.

As usual, this post presents one experiment from a larger article. The purpose is as much to raise the questions and show some examples of what is being done as to reach conclusions. It's science in progress. Be cautious about reaching conclusions, especially from the post alone.

News stories:

* In China, traits related to traditional rice or wheat farming affect modern behavior. (EurekAlert!, April 25, 2018.)

* Behavioral differences between Northern v. Southern Chinese linked to wheat v. rice farming, study shows. (University of Chicago, April 25, 2018.) From the lead institution.

The article, which is freely available: Moving chairs in Starbucks: Observational studies find rice-wheat cultural differences in daily life in China. (T Talhelm et al, Science Advances 4:eaap8469, April 25, 2018.)

Background post: Can growing rice help keep you from becoming WEIRD? (July 22, 2014). The article discussed here is from the same research team; that article is reference 1 of the current article. I encourage you to read this earlier post for background and perspective for the current post.

More rice... A perennial rice (March 4, 2023).

More wheat... Disease transmission by sneezing -- in wheat (July 29, 2019).

Previous posts mentioning Starbucks: none.

August 1, 2018

1. Plastics are useful, but we now also understand that they become hazardous waste after their useful life. What if plastics were designed, from the start, with recyclability in mind? Not just one cycle, but with the ability to be used and recycled over and over, without loss of quality. A recent article explores the approach. The results so far seem modest, but the idea is worth noting.

* News story: New Plastic Can Be Recycled Infinitely. (P Patel, Anthropocene, May 3, 2018.)

* The article: A synthetic polymer system with repeatable chemical recyclability. (Jian-Bo Zhu et al, Science 360:398, April 27, 2018.)

* A background post: History of plastic -- by the numbers (October 23, 2017).

2. How many moons hath Jupiter? 79 is the current count. News story: A dozen new moons of Jupiter discovered, including one "oddball". (Carnegie Institution, July 16, 2018.) Includes a nice video of the Jupiter moon system; be sure you see how Valetudo fits into the picture. (Movie: 1 minute; music, but no useful sound.) The new work has apparently not yet been published. Interestingly, the new discoveries came out accidentally during a search for Planet 9.

* Background post: A ninth planet for the Solar System? (February 2, 2016).

* More: Briefly noted... Now, 92 for Jupiter (February 22, 2023).

July 31, 2018

On August 24, 2014 (nearly four years ago), we had a significant local earthquake. Magnitude 6, centered near the "wine country" town of Napa a few miles north of the San Francisco Bay Area.

A new article explores some of the background to that quake, making use of the extensive instrumentation that monitors our quake-prone area.

The following figure reveals a "smoking gun"...

|

The top frame shows strain that was recorded by GPS monitors in the area. Focus on the blue curve, which is for a region of 100 km2 around the epicenter of the quake. (The red curve is the same idea, but over a larger area.)

It's a strikingly periodic curve. It peaks in about August of each year over the entire time period studied. The bottom frame shows that same data expressed another way. Here it is expressed as the pressure on the fault. The black line shows the continual build up of pressure due to the usual plate movements. The additional pressure due to the varying seasonal strain (shown in the top frame) is small, but still clear. This is Figure 4 from the article. |

The Napa quake occurred at the very end of the time shown in those figures. That's the time when the pressure was highest, with the seasonal strain adding onto the accumulated fault strain.

Does that mean that the seasonal strain caused this quake? No, that would be beyond what the data can show. Anyway, strain was building up, and if the quake hadn't occurred at that time, it seems likely it would have occurred soon. But it might at least suggest that the specific timing of the quake was affected by the seasonally varying strain. That in itself would be interesting. Further, the periodic fluctuation of the seasonal strain would seem to be continually flexing the Earth crust; surely that is not good for it.

What causes the seasonally varying strain? The scientists favor the possibility that it might be due to seasonally fluctuating groundwater levels. In any case, they refer to the effect as "non-tectonic" strain.

The authors examine the database of earthquakes in the region over several years. Is there a seasonal pattern? The analysis does not support such a claim. That is, there is no big trend that quakes in the area occur significantly more often in August (or in the summer). (However, other work has shown such an effect, so this should be considered an open question. A negative result for such an analysis merely shows that the statistical evidence does not support such a claim in general; it does not disprove that it is relevant to some specific cases. The authors note that the Napa area has many active faults; that may make it less likely that a specific effect, acting on one fault, will appear as statistically significant in the overall quake record.)

Once again we have some tantalizing evidence about things that may be affecting the occurrence of earthquakes. And once again, the story is incomplete.

News story: South Napa Earthquake linked to summer groundwater dip. (L Lester, GeoSpace (AGU -- the American Geophysical Union), June 12, 2018.)

The article: Seasonal Nontectonic Loading Inferred From cGPS as a Potential Trigger for the M6.0 South Napa Earthquake. (M L Kraner et al, Journal of Geophysical Research: Solid Earth, 123:5300, June 2018.) Caution... pdf file is 31 MB, with some very hi-res maps.

Among quake posts...

* Another million earthquakes for California (June 30, 2019).

* Earthquakes induced by human activity: oil drilling in Los Angeles (February 12, 2019).

* Fracking and earthquakes: It's injection near the basement that matters (April 22, 2018).

* Detecting earthquakes using the optical fiber cabling that is already installed underground (February 28, 2018).

* Does the moon affect earthquakes? (October 21, 2016).More about our groundwater: Groundwater depletion in the nearby valley may be why California's mountains are rising (June 20, 2014).

July 29, 2018

Here are some results for a new type of battery presented in a recent article...

|

The black curve (rising toward the upper right), shows the charging of the battery. The main number of interest is the capacity, as shown on the x-axis. In this case, it's a little over 90. (Units? That's 90 mAh/g -- milliamp-hours per gram. The subscript on the g identifies the battery anode material.)

The other three curves (the ones that decline) show discharge cycles at three temperatures (T). |

|

The orange (top) curve is for the warmest T. You can see that the entire charged capacity is recovered at that T, which is -40 °C. At the lowest T tested, -70 °C (purplish curve), about 2/3 of the battery capacity is recovered. (Actual numbers, from the article: 69/99 = 70%.) The battery was charged at room T (25 °C) for all tests. This is Figure 4a from the article. | |

Those are remarkable results. Most ordinary batteries are pretty much dead by -40 °C.

To develop a low-T battery, the scientists made two major changes. One was to use a liquid electrolyte with a low freezing point (FP). It's ethyl acetate, a readily available chemical with a FP of -84 °C. It's still quite polar, an important requirement for conducting electricity within the device.

The second improvement was the development of organic electrode materials that worked well at the low T.

The battery at this point offers unprecedented low-T performance. It contains inexpensive materials, and is environmentally benign. However, its capacity (charge per battery mass) is low.

The authors do not claim that they have achieved a practical low-T battery, only that they have made good steps toward that goal. Work continues.

News story: New lithium-ion battery operates at -70 C, a record low. (T Puiu, ZME Science, February 27, 2018.)

The article: Organic Batteries Operated at -70°C. (X Dong et al, Joule 2:902, May 16, 2018.)

Among posts on battery development...

* Why can't lithium metal batteries be recharged? (October 1, 2019).

* Manganese(I) -- and better batteries? (March 21, 2018).

* Making lithium-ion batteries more elastic (October 10, 2017).

* What happens when a lithium ion battery overheats? (February 19, 2016).There is more about energy on my page Internet Resources for Organic and Biochemistry under Energy resources. It includes a list of some related Musings posts.

July 27, 2018

Bees that live in the city do better than those on the farm.

That's the finding from a new article. Here is an example of the results...

|

Bee colonies were maintained in three environments, as labeled on the figure. The number of worker bees (y-axis) was counted over time (x-axis).

The animals here are bumblebees, Bombus terrestris. The clear result is that the "agricultural" bee colonies (solid line; bottom curve) did considerably worse than the ones in populated areas. Several other parameters that reflect bee health are reported; the general observation is the same for all measures. This is Figure 2b from the article. |

That's interesting.

How did the scientists do this? The basic plan is that they established colonies at the various locations starting with individual queens, all from one central source. (That was apparently an urban source. Does that matter?)

Why is this happening? The authors don't know, but they do address the question.

What about pesticides? That's an issue much in the news for bees these days. Perhaps the work compares a high-pesticide agricultural environment to a low-pesticide urban environment. The authors discuss this at some length, noting some reasons why that's not at all clear given their specifics. But there is no data.

Another possibility is that modern agricultural lands, with controlled crops, are not reliable sources of food to the bees throughout the year.

The article provides an interesting experimental system. It's simple, and offers a window into bee health. The basic plan should allow for considerable experimental variation. For example, the scientists could set up colonies that are urban, but are accompanied by a fair amount of soil from the agricultural area.

News stories:

* Bumblebees found to do better in urban settings than in agricultural areas. (B Yirka, Phys.org, June 27, 2018.)

* Bumblebees thrive in towns more than countryside. (N Davis, Guardian, June 26, 2018.)

The article, which is freely available: Lower bumblebee colony reproductive success in agricultural compared with urban environments. (A E Samuelson et al, Proceedings of the Royal Society B 285:20180807, June 27, 2018.)

Previous bee post: Zero? Do bees understand? (July 20, 2018).

Bees and pesticides: Largest field trials yet... Neonicotinoid pesticides may harm bees -- except in Germany; role of fungicide (August 20, 2017).

... or herbicides: Glyphosate and the gut microbiome of bees (October 16, 2018).

More city bees... Bees -- around you (June 11, 2009).

More bees... Why did many bees in the United States stop buzzing mid-day on August 21, 2017 (January 2, 2019).

More about city living: Are urban dwellers smarter than rural dwellers? (August 2, 2016).

July 25, 2018

Determining the relationships between various primates can be difficult and sometimes contentious. A new article reports that the Lorax is most closely related to Erythrocebus patas. News story. (C Barras, Nature News, July 23, 2018.) It links to the article -- and includes a dissent. (If you have access... The 3-page article is quite good. Perhaps having an English professor among the authors of a scientific article helps.)

July 24, 2018

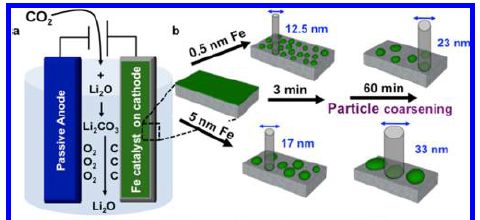

Increasing CO2 in the atmosphere is leading to an increased global temperature (T). That CO2 comes largely from burning fossil fuels. To limit the T increase, we need to reduce the amount of CO2 in the air. We can do that by burning less fossil fuel and/or by removing CO2 once produced. We can reduce fossil fuel use by replacing such fuels with those that don't produce CO2 -- or by just using less fuel.

A recent article suggests that we can achieve major reductions in CO2 emissions by aggressively improving the efficiency of fuel usage.

The following figure provides an example of what they have in mind. It is perhaps both instructive and amusing.

|

In the big middle part of the figure there is a list of numerous common devices around us. The energy usage of each is shown: the blue circle shows power consumption when in use, and the reddish circle shows power consumption in stand-by mode. (The full figure in the article actually shows a few more devices -- 18 total. I think I have included the major ones here.)

The two big circles at the right show the totals (for the entire set of devices shown in the article). At the left is a smart phone. The authors argue that it can replace all those devices in the middle, with much lower energy usage, as shown by the tiny blue and red circles at the left. During use, its power consumption is about 1/100 that of the devices in the middle; during stand-by, about 1/30. Look at the blue circle for the TV set. It has a darker blue wedge in it. That shows the portion of the total that this device represents. That is, the TV set accounts for nearly half of the power consumption shown in the full figure. Most of those wedges are individually rather small. Energy? Power? Power is the rate of using energy. Consider that TV set: about 200 watts. Use it for 5 hours, and you have used 1000 watt-hours of energy. That's 1 kilowatt-hour (kwh). Electricity is typically billed by the kilowatt-hour. (Other forms of energy are billed differently, but in each case, the energy usage could be re-calculated as kwh.) For qualitative discussion, it doesn't matter much whether we talk about energy or power here. But in calculation, it is important to keep them straight. It's the amount of energy used that matters. A heater will have a high power rating. Turn it off, and its energy usage is zero. This is part of Figure 2 from the article. As noted, the full figure contains a few more devices in the middle section. |

The idea is that a single low-energy device is replacing older higher-energy devices. This is a trend in place as a result of technology development, not (necessarily) driven by energy considerations.

There is much to quibble about with that figure. But that would miss the point. The replacements suggested above may not be entirely fair, and smart phones do not dominate the energy scene. However, smart phones can improve the overall energy scene.

The authors do a thorough analysis of energy usage, over various sectors of the economy and geographical regions. They conclude that overall energy usage could be substantially reduced by 2050 by moving toward more energy efficiency. Their conclusion holds even in the face of increasing population and greater overall development.

Increasing energy efficiency reduces fuel use. As a corollary, that makes it easier for low-CO2 fuels, such as renewables, to become a larger fraction of the total.

It's rather rosy! In fact, their bottom line is one of the very best reported for reducing atmospheric CO2. Of course, it is all modeling.

What do we make of this? Reducing the global T increase involves a combination of scientific understanding, technological development, and political decisions. It's not for Musings to take political positions (though we do express opinions sometimes). We present individual articles, each of which makes one contribution to the overall story. Is this a good story? Qualitatively, it would seem to be. The idea that smart phones increase our energy efficiency is reasonable, whether you buy all the details or not. That smart phone of course is just a token. The full analysis addresses the entire energy economy. Most of us probably won't want to go very far in the details of the analysis. Experts will do so, looking at the specifics as well as the general structure of the modeling.

Perhaps the point is that the new article shows how technology developments can improve our energy efficiency, maybe enough to make a substantial contribution to reducing atmospheric CO2 levels. That's good. However, improvements in energy efficiency won't happen automatically, and we should not assume they will happen as the authors say. With attention, such improvements could become an important part of the CO2-reduction story. The article should provide an incentive for directing effort at improving energy efficiency.

News stories:

* World can limit global warming to 1.5C by 'improving energy efficiency'. (Carbon Brief, June 4, 2018.) Includes more of the specifics.

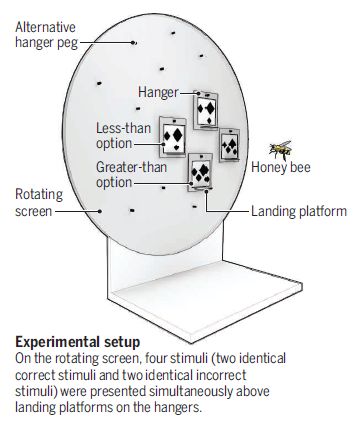

* Transforming how we move around, heat our homes and use devices could limit warming to 1.5C. (Tyndall Centre for Climate Change Research, University of East Anglia, 2018.) From one of the institutions involved in the work. At the end of this story, there is a link to an "open access" copy of the article, presumably from the authors.